IBM is teaching AI to behave more like the human brain

Since the days of Da Vinci’s “Ornithoper“, mankind’s greatest minds have sought inspiration from the natural world for their technological creations. It’s no different in the modern world, where bleeding-edge advancements in machine learning and artificial intelligence have begun taking their design cues from the most advanced computational organ in the natural word: the human brain.

Mimicking our gray matter isn’t just a clever means of building better AIs, faster. It’s absolutely necessary for their continued development. Deep learning neural networks — the likes of which power AlphaGo as well as the current generation of image recognition and language translation systems — are the best machine learning systems we’ve developed to date. They’re capable of incredible feats but still face significant technological hurdles, like the fact that in order to be trained on a specific skill they require upfront access to massive data sets. What’s more if you want to retrain that neural network to perform a new skill, you’ve essentially got to wipe its memory and start over from scratch — a process known as “catastrophic forgetting”.

Compare that to the human brain, which learns incrementally rather than bursting forth fully-formed from a sea of data points. It’s a fundamental difference: deep learning AIs are generated from the top down, knowing everything it needs to from the get-go, while the human mind is built from the ground up with previous lessons learned being applied to subsequent experiences to create new knowledge.

What’s more, the human mind is especially adept at performing relational reasoning, which relies on logic to build connections between past experiences to help provide insight into new situations on the fly. Statistical AI (ie machine learning) is capable of mimicking the brain’s pattern recognition skills but is garbage at applying logic. Symbolic AI, on the other hand, can leverage logic (assuming it’s been trained on the rules of that reasoning system), but is generally incapable of applying that skill in real-time.

But what if we could combine the best features of the human brain’s computational flexibility with AI’s massive processing capability? That’s exactly what the team from DeepMind recently tried to do. They’ve constructed a neural network able to apply relational reasoning to its tasks. It works in much the same way as the brain’s network of neurons. While neurons use their various connections with each other to recognize patterns, “We are explicitly forcing the network to discover the relationships that exist” between pairs of objects in a given scenario, Timothy Lillicrap, a computer scientist at DeepMind told Science Magazine.

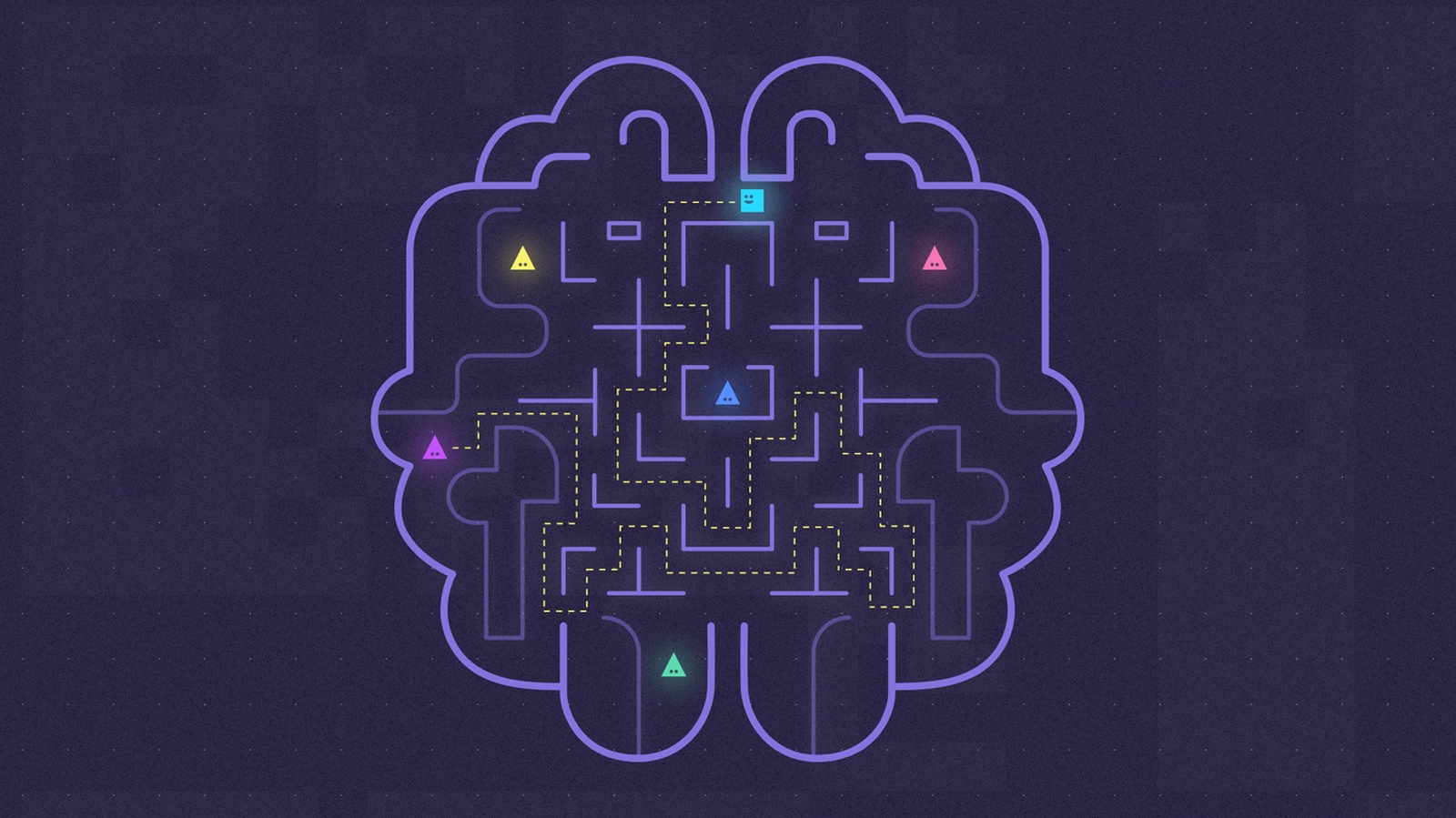

When subsequently tasked in June with answering complex questions about the relative positions of geometric objects in an image — ie “There is an object in front of the blue thing; does it have the same shape as the tiny cyan thing that is to the right of the gray metal ball?” — it correctly identified the object in question 96 percent of the time. Conventional machine learning systems got it right a paltry 42 – 77 percent of the time. Heck even humans only succeeded in the test 92 percent of the time. That’s right, this hybrid AI is better at the task than the humans that built it to do.

The results were the same when the AI was presented with word problems. Though conventional systems were able to match DeepMind on simpler queries such as “Sarah has a ball. Sarah walks into her office. Where is the ball?” the hybrid AI system destroyed the competition on more complex, inferential questions like “Lily is a Swan. Lily is white. Greg is a swan. What color is Greg?” On those, DeepMind answered correctly 98 percent of the time compared to around 45 percent for its competition.

Image: DeepMind

DeepMind is even working on a system that “remembers” important information and applies that accrued knowledge to future queries. But IBM is taking that concept and going two steps further. In a pair of research papers presented at the 2017 International Joint Conference on Artificial Intelligence held in Melbourne, Australia last week, IBM submitted two studies: one looking into how to grant AI an “attention span”, the other examining how to apply the biological process of neurogenesis — that is, the birth and death of neurons — to machine learning systems.

“Neural network learning is typically engineered and it’s a lot of work to actually come up with a specific architecture that works best. It’s pretty much a trial and error approach,” Irina Rish, an IBM research staff member, told Engadget. “It would be good if those networks could build themselves.”

IBM’s attention algorithm essentially informs the neural network as to which inputs provide the highest reward. The higher the reward, the more attention the network will pay to it moving forward. This is especially helpful in situations where the dataset is not static — ie, real life. “Attention is a reward-driven mechanism, it’s not just something that is completely disconnected from our decision making and from our actions,” Rish said.

“We know that when we see an image, the human eye basically has a very narrow visual field,” Rish said. “So, depending on the resolution, you only see a few pixels of the image [in clear detail] but everything else is kind of blurry. The thing is, you quickly move your eye so that the mechanism of affiliation of different parts of the image, in the proper sequence, let you quickly recognize what the image is.”

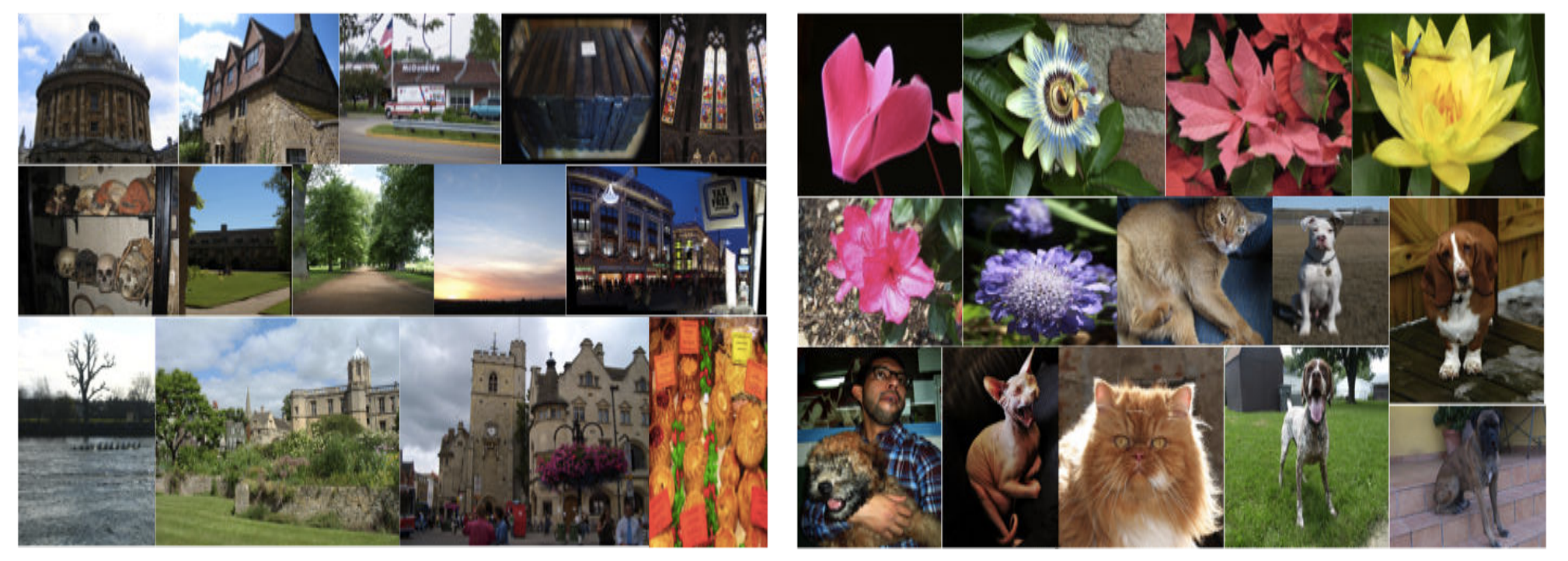

Examples of Oxford dataset training images – Image: USC/IBM

The attention function’s first use will likely be in image recognition applications, though it could be leveraged into a variety of fields. For example, if you train an AI using the Oxford dataset — which is primarily architectural images — it will be easily able to correctly identify cityscapes. But if you then show it a bunch of pictures from countryside scenes (fields and flowers and such) the AI is going to brick because it has no knowledge of what flowers are. However, you do the same test with humans and animals and you’ll trigger neurogenesis as their brains try to adapt what they already know about what cities look like to the new images of the country.

This mechanism basically tells the system what it should focus on. Take your doctor for example, she can run hundreds of potential tests on you to determine what ails you, but that’s not feasible — either time-wise or money-wise. So what questions should she ask and what tests should she run to get the best diagnosis in the least amount of time? “That’s what the algorithm learns to figure out,” Rish explained. It doesn’t just figure out what decision leads to the best outcome, it also learns where to look in the data. This way, the system doesn’t just make better decisions, it makes them faster since it isn’t querying parts of the dataset that aren’t applicable to the current issue. It’s the same way that your doctor doesn’t tap your knees with that weird little hammer thing when you come in complaining of chest pain and shortness of breath.

While the attention system is handy for ensuring that the network stays on task, IBM’s work into neural plasticity (how well memories “stick”) serves to provide the network with long term recollection. It’s actually modelled after the same mechanisms of neuron birth and death seen in the human hippocampus.

With this system, “You don’t have to necessarily have to start with and absolutely humongous model millions of parameters,” Rish explained. “You can start with a much smaller model. And then, depending on the data you see, it will adapt.”

When presented with new data, IBM’s neurogenetic system begins forming new and better connections (neurons) while some of the older, less useful ones will be “pruned” as Rish put it. That’s not to say that the system is literally deleting the old data, it simply isn’t linking to it as strongly — same way that your old day-to-day memories tend to get fuzzy over the years but those which carry a significant emotional attachment remain vivid for years afterward.

“Neurogenesis is a way to adapt deep networks,” Rish said. “The neural network is the model and you can build this model from scratch or you can change this model as you go because you have multiple layers of hidden units and you can decide how many layers of hidden units (neurons) you want to have… depending on the data.”

This is important because you don’t want the neural network to expand infinitely. If it did, the data set would become so large as to be unwieldy even for the AI — the digital equivalent of Hyperthymesia. “It also helps with normalization, so [the AI] doesn’t ‘overthink’ the data,” Rish said.

Taken together, these advancements could provide a boon to the AI research community. Rish’s team next wants to work on what they call “internal attention.” You’ll not just choose what inputs you want the network to look at but what parts of the network you want to employ in the calculations based on the dataset and inputs. Basically the attention model will cover the short term, active, thought process while the memory portion will enable the network to streamline its function depending on the current situation.

But don’t expect to see AIs rivalling the depth of human consciousness anytime soon, Rish warns. “I would say at least a few decades — but again that’s probably a wild guess. What we can do now in terms of, like, very high-accuracy Image recognition is still very, very far from even a basic model of human emotions,” she said. “We’re only scratching the surface.”

(137)