If Something Is Going To Destroy Humanity, It’s Going To Be One Of These Catastrophes

Global catastrophes—events that wipe out at least 10% of the world population—obviously don’t happen very often. But they have happened in the past; the plague in the 14th century, for example, killed as much as 17% of the global population. More recently, the Spanish flu in 1918 killed between 50 to 100 million people—not quite an official catastrophe by this definition, but still as much as 5% of the people in the world.

That was before modern medicine. Today, though, we face even more potential risks. A new report, Global Catastrophic Risk 2016, outlines exactly what might go wrong—and what we might do to prevent it.

Pandemics

Despite medical advances, pandemics like the plague or the flu are still a risk. If the H5N1 bird flu became easily transmissible between humans, by some estimates, it could kill as many as 1.7 billion people. Right now, it doesn’t easily spread. But two recent scientific papers showed how to make a version that could be more transmissible. As new gene editing techniques such as CRISPR make it simpler and cheaper to change organisms, there’s more of a risk of accidental release of lethal diseases from labs, or intentional release by terrorists.

Natural pandemics are also still a risk; flu pandemics happen relatively frequently (though none have been at the scale of “catastrophe,” so far, they’ve occurred 10 times in the last 300 years). It’s possible that a disease as transmissible as some types of the flu, but as lethal as Ebola, could emerge, and spread around the world as people travel.

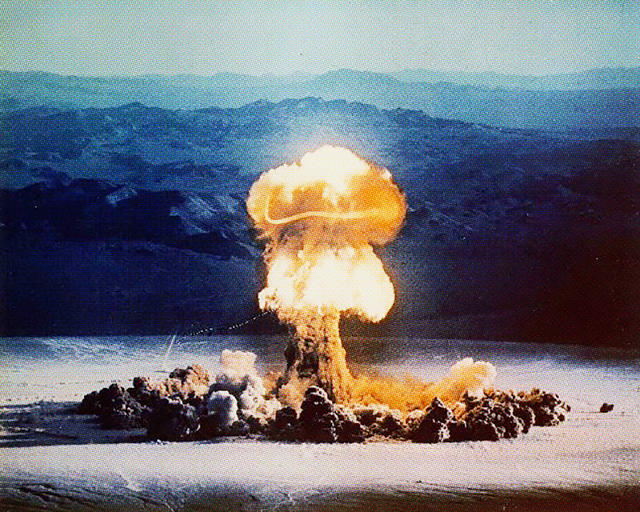

Nuclear war

Nuclear war is also still a risk, though there are far fewer warheads around now (9,920, as of 2014) than there were a few decades ago. This isn’t just a movie scenario. On multiple occasions in the past, we’ve come close to nuclear war: In 1995, Russian systems read a Norwegian weather rocket as a nuclear attack, and Yeltsin had launch codes ready.

In addition to killing millions of people, the smoke from bombs could block sunlight and end food production for years. The most likely intentional nuclear war right now might be between India and Pakistan. By some pessimistic estimates, the nuclear winter that would follow a hypothetical conflict between those two countries could starve 1 billion people.

Super volcanoes and asteroids

There are other, less likely natural risks. A super volcano that erupted in Indonesia 70,000 years ago seems to have caused mass extinction as clouds of dust and sulphates blocked out light and killed plants. It’s possible that this type of eruption may happen every 30,000 to 50,000 years. Asteroids and comets could also potentially kill 10% of the global population—or, if the asteroid was larger than 1.5 kilometers, the entire world. The risk is fairly small, though: a 1 in 1,250 chance of impact in a 100-year period.

Catastrophic climate change

While ordinary climate change is obviously pretty bad, climate change beyond our projections that totally destroys the environment is also possible. If researchers have underestimated the sensitivity of climate systems, or if feedback loops start happening—like arctic permafrost melting, and releasing methane that speeds up warming, melting more permafrost—it’s possible that temperatures could rise six degrees or more, making huge chunks of the planet mostly uninhabitable. Some scientists have estimated that there’s a slight chance of six degrees of warming even if emissions are dramatically cut now.

Emerging risks

Like the risk of a bioengineered flu designed by terrorists, it’s also possible that other real-life viruses could be engineered using information available on the Internet. The genetic data for smallpox, for example, is easy to find online, and new tools make it possible to synthesize a real bug from this information.

And then there are potential unintended consequences: The robots we’re developing today are expected to be able to do what humans can do at some point in the next few decades, and soon after that, to be “superintelligent.” They may decide they don’t need us. To prevent destruction from climate change, we might try to geoengineer a solution, but this also comes with risks, especially if some countries decide to deploy them without worrying about how those actions affect weather in other regions.

The report looks at how likely each of these risks are to materialize within the next few years, and how they should be prioritized. Many are interrelated—climate change, for example, may cause mass migration into slums with poor sanitation, increasing the risk of a global pandemic. But many of the solutions, such as building up resilience, are also connected.

“Because all of the risks have the shared property of seeming a little bit unreal—a little bit far off—many of the political effects that cause them to be under-addressed are shared, and can be tackled collectively,” says Sebastian Farquhar of the Global Priorities Project at Oxford University, one of the authors of the report.

The report suggests specific solutions, such as potentially requiring researchers using new gene editing techniques to get a license, building up a better stock of vaccines and drugs around the world, or implementing carbon taxes. They’re hoping that laying out the problems clearly can help push for prioritization of issues that often don’t get as much attention.

Farquhar believes all of the challenges are addressable. “I’m still saving up a pension, so I guess that tells you something,” he says. “I’m an optimistic person. I think we can overcome these challenges, but it’s not going to happen automatically.”

He points to the fact that nuclear war has been avoided in the past because single officers have decided, at the last minute, not to push the button. “I think we can put a lot of faith in conscience, in individuals doing what’s right, but we can’t rely on that forever,” he says. “We do need to set the structures up to support that, and to try to build a more collaborative and trust-based global environment, with more coordination and collaboration between states. Otherwise we’re going to just keep rolling the dice, and one day, we’ll lose that dice roll.”

Fast Company , Read Full Story

(42)