Independent auditors are struggling to hold AI companies accountable

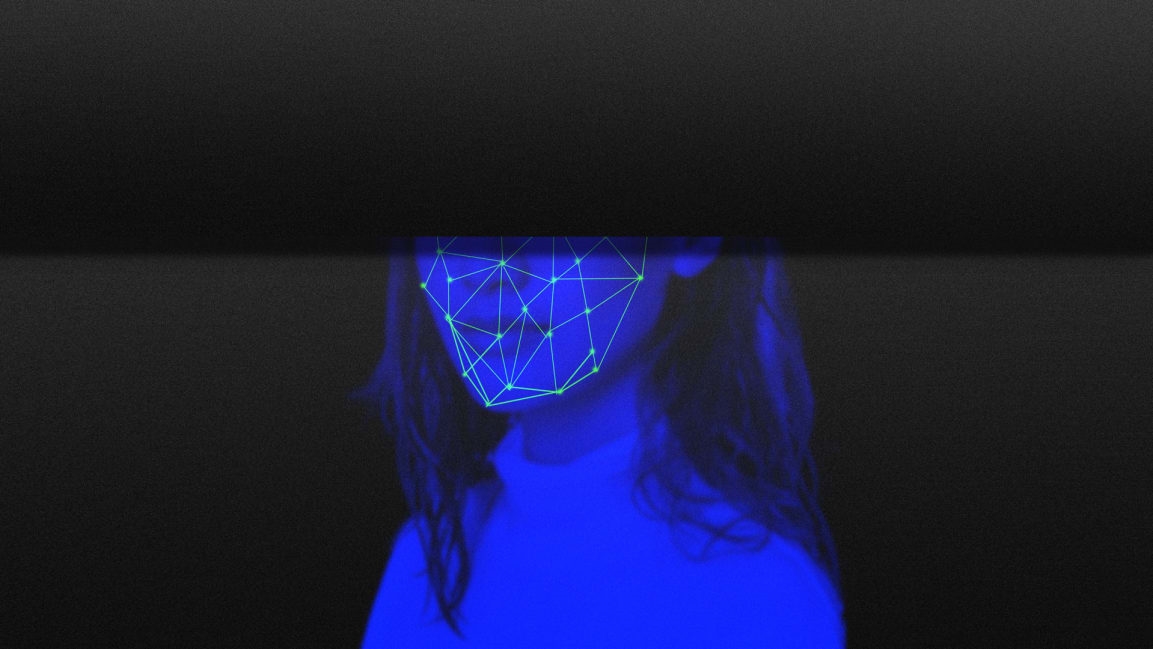

For years, HireVue’s fully autonomous software, which uses AI to analyze facial features and movements during job interviews, has raised concerns and criticisms for both its grandiose claims and the high likelihood of biased outcomes. Previously, the company had shrugged off those concerns, as facial and audio analysis was a central aspect of its sales pitch—even though its methods were based on the dubious idea that a person’s facial expressions or tone of voice can reveal whether they’d be good at a particular job. So it was especially shocking that when announcing it would stop using facial analysis, HireVue also cited an independent audit that supposedly exonerated its algorithms from bias.

Yet what is needed is not surprise, but incredulity. On closer inspection, it’s not clear that either claim is completely true, and in mischaracterizing the audit, HireVue reveals the shaky foundations of the new algorithmic auditing industry.

FACIAL ANALYSIS IN OLDER ASSESSMENTS

HireVue’s press release says that the company has stopped using facial analysis in new assessments, the evaluation tools that HireVue sells to companies. However, the company will continue to use facial characteristics to determine employability in preexisting models at least into this year.

HireVue’s assessments can vary from a simple game of math proficiency to custom evaluations that predict specific job outcomes—such as sales or performance reviews—based on resumes, psychometric tests, and video interviews. These custom assessments are expensive and time-consuming to build, and they feasibly could be used for years after their development. Last year, HireVue algorithms analyzed millions of job interviews, of which some unknown percent still use facial analysis. Now, HireVue says it is removing the facial analysis component of these assessments when they come up for review.

HireVue calls its policy change “proactive,” but it came many months after a chorus of criticism and a formal complaint filed with the Federal Trade Commission. HireVue’s statement also takes credit for having an expert advisory board, but neglects to mention that its board’s AI fairness expert Suresh Venkatasubramanian, “quit over [HireVue’s] resistance to dropping video analysis” in 2019.

A BURGEONING BUT TROUBLED INDUSTRY

Yet most concerning is how HireVue misrepresents the analysis by O’Neil Risk Consulting & Algorithmic Auditing (ORCAA), the consultancy founded and run by Cathy O’Neil, a PhD mathematician and author of the skeptical AI book Weapons of Math Destruction.

The idea of algorithmic auditing is that an independent party scrutinizes the inner workings of an algorithmic system. It might evaluate concerns about bias and fairness, check for unintended consequences, and create more transparency to build consumer trust.

HireVue’s statement uses a partial quote and misleading phrasing to suggest that the ORCAA algorithmic audit broadly verifies the absence of bias in HireVue’s many assessments. However, having viewed a copy of the ORCAA audit, I don’t believe it supports the conclusion that all of HireVue’s assessments are unbiased. The audit was narrowly focused on a specific use case, and it didn’t examine the assessments for which HireVue has been criticized, which include facial analysis and employee performance predictions.

I would quote relevant sections of the ORCAA report, except that in order to download it, I was forced to agree not to share the document in any way. You can download the entire report for yourself. HireVue did not respond by press time to a request for comment about how it characterized the report.

HireVue’s shady behavior encapsulates the challenges facing the emerging market for algorithmic audits. While the concept sounds similar to well-established auditing practices such as financial accounting and tax compliance, algorithmic auditing lacks the necessary incentives to function as a check on AI applications.

Consider the market incentives. If clients of a financial auditing company keep releasing clean balance sheets until the moment they go bankrupt, you might not be able to trust that auditor. Look at how Ernst & Young has suffered from missing a client’s $2 billion of alleged fraud. That Ernst & Young is taking such reputational damage and possibly faces legal liability is a good thing—it means there are clear costs to making big mistakes.

Yet there is no parallel in algorithmic auditing, as algorithmic harms tend to be individualized and hard to diagnose. Plus, companies don’t face consequences when their algorithms are discriminatory. If anything, self-evaluation and disclosure can pose a bigger risk. For instance, people remember that Amazon built a sexist hiring algorithm, but may forget that Amazon discovered the problem and scrapped the project on its own. Amazon’s lack of transparency about the project, which was discovered through an investigative report, also contributed to the blowback. However, the company might have been just as well off, at least in the public eye, by doing no introspection at all.

Government oversight can also be a mechanism for keeping audits honest. If clients of a certain tax accountant keep getting fined by the Internal Revenue Service, you might pick another firm. The Trump administration largely eschewed this work, choosing to take a light-touch approach to algorithmic regulation. The new Biden White House could swiftly change course, using available tools to enforce existing anti-discrimination laws on algorithms.

The auditors themselves also don’t have a clear reason to be as critical as necessary—especially if the company they’re auditing is the one writing the check. It’s a mistake to assume that analyzing algorithms is a purely mathematical process with an objectively correct answer—there are countless subjective choices in each specific audit. These choices can easily tip an audit towards a favorable view of the client. If there isn’t a market incentive or governmental backstop to prevent that, this will happen far more often than it should, and algorithmic auditing won’t be as effective.

A LACK OF TRUST

ORCAA’s audit makes it clear that ORCAA proposed more holistic examinations of HireVue’s algorithms, and one can speculate that O’Neil doesn’t appreciate how her report is being obfuscated (she declined to comment for this story). Unfortunately, O’Neil has very little leverage to get HireVue to take bolder steps or to even represent the report honestly, rather than using it for PR spin.

HireVue has no obligation to do anything, and it can simply decline to provide more information, enable access to its data and models, or expand the scope of the audit to be more meaningful. It is worth stating that the audit may have helped HireVue improve, such as by working through potential issues with the auditory analysis that remains in their product. That said, the company appears to be more interested in favorable press than legitimate introspection.

Stories like this one explain why many people do not trust AI or the companies that use it—and that they are often right not to. The millions of job applicants that get sorted by algorithmic funnels like HireVue are justified in their suspicion that they are being taken for a ride. Other companies, like Yobs Technologies and Talview, still openly market pseudoscientific AI facial analysis for job interviews. While the worst outcomes are likely for people with disabilities or unusual accents, anyone with an atypical speaking style or even quirky mannerisms should be concerned. Without a change in market incentives or real government oversight, algorithmic audits alone won’t provide the accountability that we need when it comes to AI systems.

Alex C. Engler is a David M. Rubenstein Fellow at the Brookings Institution, where he studies the governance of artificial intelligence and emerging technology. He is also an adjunct professor and affiliated scholar at Georgetown University’s McCourt School of Public Policy, where he teaches courses on data science for policy analysis.

(30)