Inside Intel’s billion-dollar transformation in the age of AI

As I walked up to the Intel visitor center in Santa Clara, California, a big group of South Korean teenagers ran from their bus and excitedly gathered round the big Intel sign for selfies and group shots. This is the kind of fandom you might expect to see at Apple or Google. But Intel?

Then I remembered that Intel is the company that put the “silicon” in Silicon Valley. Its processors and other technologies provided much of the under-the-hood power for the personal computer revolution. At 51 years old, Intel still has some star power.

But it’s also going through a period of profound change that’s reshaping the culture of the company and the way its products get made. As ever, Intel’s main products are the microprocessors that serve as the brains of desktop PCs, laptops and tablets, and servers. They’re wafers of silicon coated with millions or billions of transistors, each of which has an “on” and “off” state corresponding to the binary “ones and zeros” language of computers.

Since the 1950s, Intel has achieved a steady increase in processor power by jamming ever more transistors onto that piece of silicon. The pace was so steady that Intel cofounder Gordon Moore could make his famous 1965 prediction that the number of transistors on a chip would double every two years. “Moore’s Law” held true for many years, but Intel’s transistor-cramming approach has reached a point of diminishing returns, analysts say.

At 51 years old, Intel still has some star power.

Meanwhile, the demand for more processing power has never been greater. The rise of artificial intelligence, which analysts say is now being widely used in core business processes in almost every industry, is pushing the demand for computing power into overdrive. Neural networks require massive amounts of computing power, and they perform best when teams of computers share the work. And their applications go far beyond the PCs and servers that made Intel a behemoth in the first place.

“Whether it’s smart cities, whether it’s a retail store, whether it’s a factory, whether it’s a car, whether it’s a home, all of these things kind of look like computers today,” says Bob Swan, Intel’s president since January 2019. The tectonic shift of AI and Intel’s ambitions to expand have forced the company to change the designs and features of some of its chips. The company is building software, designing chips that can work together, and even looking outside its walls to acquire companies that can bring it up to speed in a changed world of computing. More transformation is sure to come as the industry relies on Intel to power the AI that will increasingly find its way into our business and personal lives.

The death of Moore’s Law

Today, it’s mainly big tech companies with data centers that are using AI for major parts of their business. Some of them, such as Amazon, Microsoft, and Google, also offer AI as a cloud service to enterprise customers. But AI is starting to spread to other large enterprises, which will train models to analyze and act upon huge bodies of input data.

This shift will require an incredible amount of computation. And AI models’ hunger for computing power is where the AI renaissance runs head-on into Moore’s Law.

For decades, Moore’s 1965 prediction has held a lot of meaning for the whole tech industry. Both hardware makers and software developers have traditionally linked their product road maps to the amount of power they can expect to get from next year’s CPUs. Moore’s Law kept everyone “dancing to the same music,” as one analyst puts it.

Moore’s Law also implied a promise that Intel would continue figuring out, year after year, how to deliver the expected gain in computing power in its chips. For most of its history, Intel fulfilled that promise by finding ways to wedge more transistors onto pieces of silicon, but it’s gotten harder.

“We’re running out of gas in the chip factories,” says Moor Insights & Strategy principal analyst Patrick Moorhead. “It’s getting harder and harder to make these massive chips, and make them economically.”

We’re running out of gas in the chip factories.”

Patrick Moorhead

It’s still possible to squeeze larger numbers of transistors into silicon wafers, but it’s becoming more expensive and taking longer to do so—and the gains are certainly not enough to keep up with the requirements of the neural networks that computer scientists are building. For instance, the biggest known neural network in 2016 had 100 million parameters, while the largest so far in 2019 has 1.5 billion parameters—an order of magnitude larger in just a few years.

That’s a very different growth curve than in the previous computing paradigm, and it’s putting pressure on Intel to find ways to increase the processing power of its chips.

However, Swan sees AI as more of an opportunity than a challenge. He acknowledges that data centers may be the primary Intel market to benefit, since they will need powerful chips for AI training and inference, but he believes that Intel has a growing opportunity to also sell AI-compatible chips for smaller devices, such as smart cameras and sensors. For these devices, it’s the small size and power efficiency, not the raw power of the chip, that makes all the difference.

“There’s three kinds of technologies that we think will continue to accelerate: One is AI, one is 5G, and then one is autonomous systems—things that move around that look like computers,” says Swan, Intel’s former CFO who took over as CEO when Brian Krzanich left after allegations of an extramarital affair with a staffer in 2018.

We’re sitting in a large, nondescript conference room at Intel’s headquarters. On the whiteboard at the front of the room, Swan draws out the two sides of Intel’s businesses. On the left side is the personal computer chip business—from which Intel gets about half of its revenue now. On the right is its data center business, which includes the emerging Internet of Things, autonomous car, and network equipment markets.

“We expand [into] this world where more and more data [is] required, which needs more processing, more storage, more retrieval, faster movement of data, analytics, and intelligence to make the data more relevant,” Swan says.

Rather than taking a 90-something-percent share of the $50 billion data center market, Swan is hoping to take a 25% market share of the larger $300 billion market that includes connected devices such as smart cameras, futuristic self-driving cars, and network gear. It’s a strategy that he says “starts with our core competencies, and requires us to invent in some ways, but also extends what we already do.” It might also be a way for Intel to bounce back from its failure to have become a major provider of technology to the smartphone business, where Qualcomm has long played an Intel-like role. (Most recently, Intel gave up on its major investment in the market for smartphone modems and sold off the remains to Apple.)

The Internet of Things market, which includes chips for robots, drones, cars, smart cameras, and other devices that move around, is expected to reach $2.1 trillion by 2023. And while Intel’s share of that market has been growing by double digits year-over-year, IoT still contributes only about 7% of Intel’s overall revenue today.

The data center business contributes 32%, the second-largest chunk behind the PC chip business, which contributes about half of total revenue. And it’s the data center that AI is impacting first and most. That’s why Intel has been altering the design of its most powerful CPU, the Xeon, to accommodate machine learning tasks. In April, it added a feature called “DL Boost” to its second-generation Xeon CPUs, which offers greater performance for neural nets with a negligible loss of accuracy. It’s also the reason that the company will next year begin selling two new chips that specialize in running large machine learning models.

The AI renaissance

By 2016, it had become clear that neural networks were going to be used for all kinds of applications, from product recommendation algorithms to natural language bots for customer service.

Like other chipmakers, Intel knew it would have to offer its large customers a chip whose hardware and software were purpose-built for AI, which could be used to train AI models and then draw inferences from huge pools of data.

At the time, Intel was lacking a chip that could do the former. The narrative in the industry was that Intel’s Xeon CPUs were very good at analyzing data, but that the GPUs made by Intel’s rival in AI, Nvidia, were better for training—an important perception that was impacting Intel’s business.

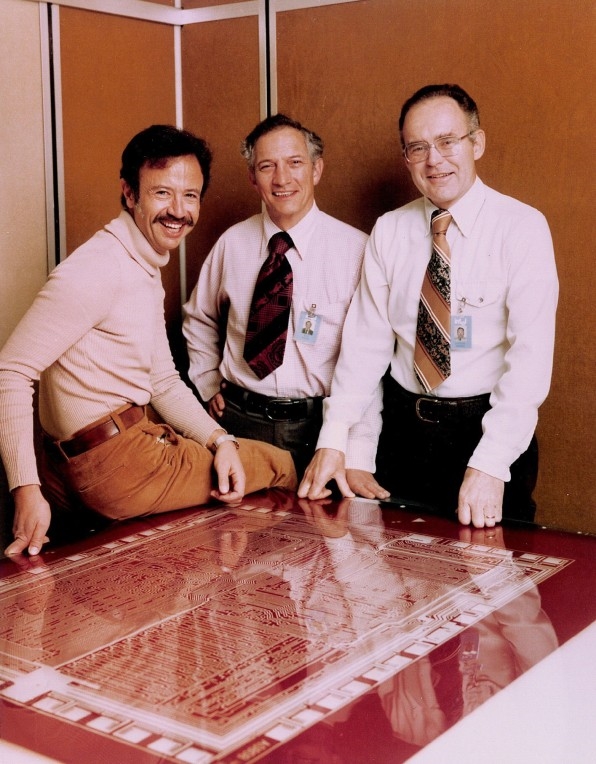

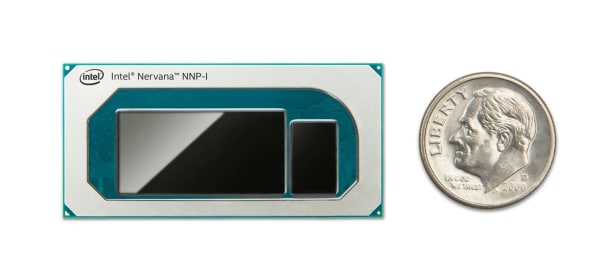

So in 2016, Intel went shopping and spent $400 million on a buzzy young company called Nervana that had already been working on a ripping fast chip architecture that was designed for training AI.

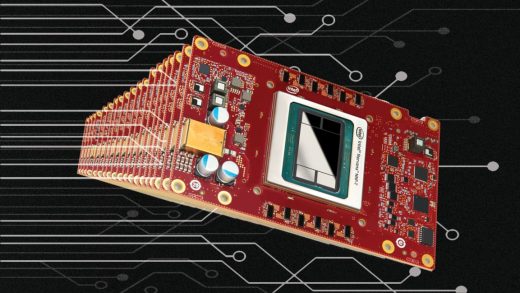

It’s been three years since the Nervana acquisition, and it’s looking like it was a smart move by Intel. At a November event in San Francisco, Intel announced two new Nervana Neural Network Processors—one designed for running neural network models that infer meaning from large bodies of data, the other for training the networks. Intel worked with Facebook and Baidu, two of its larger customers, to help validate the chip design.

Nervana wasn’t the only acquisition Intel made that year. In 2016, Intel also bought another company, called Movidius, that had been building tiny chips that could run computer vision models inside things such as drones or smart cameras. Intel’s sales of the Movidius chips aren’t huge, but they’ve been growing quickly, and they address the larger IoT market Swan’s excited about. At its San Francisco event, Intel also announced a new Movidius chip, which will be ready in the first half of 2020.

[Photo: courtesy of Intel Corporation]

Many of Intel’s customers do at least some of their AI computation on regular Intel CPUs inside servers in data centers. But it’s not so easy to link those CPUs together so they can tag-team the work that a neural network model needs. The Nervana chips, on the other hand, each contain multiple connections so that they easily work in tandem with other processors in the data center, Nervana CEO and founder Naveen Rao tells me.

“Now I can start taking my neural network and I can break it apart across multiple systems that are working together,” Rao says. “So we can have a whole rack [of servers], or four racks, working on one problem together.”

[Photo: Walden Kirsch/Intel Corporation]

In 2019, Intel expects to see $3.5 billion in revenue from its AI-related products. Right now, only a handful of Intel customers are using the new Nervana chips, but they’re likely to reach a far wider user base next year.

Transforming Intel, from the chip on up

The Nervana chips represent the evolution of a long-held Intel belief that a single piece of silicon, a CPU, could handle whatever computing tasks a PC or server needed to do. This widespread belief began to change with the gaming revolution, which demanded the extreme computational muscle needed for displaying complex graphics on a screen. It made sense to offload that work to a graphics processing unit, a GPU, so that the CPU wouldn’t get bogged down with it. Intel began integrating its own GPUs with its CPUs years ago, and next year it will release a free-standing GPU for the first time, Swan tells me.

That same thinking also applies to AI models. A certain number of AI processes can be handled by the CPU within a data center server, but as the work scales up, it’s more efficient to offload it to another specialized chip. Intel has been investing in designing new chips that bundle together a CPU and a number of specialized accelerator chips in a way that matches the power and workload needs of the customer.

“When you’re building a chip, you want to put a system together that solves a problem, and that system [often] requires more than a CPU,” Swan says.

When you’re building a chip, you want to put a system together that solves a problem.”

Bob Swan

In addition, Intel now relies far more on software to drive its processors to higher performance and better power efficiency. This has shifted the balance of power within the organization. According to one analyst, software development at Intel is now “an equal citizen” with hardware development.

In some cases, Intel no longer manufactures all its chips on its own, an epoch-shifting departure from the company’s historical practice. Today, if chip designers call for a chip that some other company might fabricate better or more efficiently than Intel, it’s acceptable for the job to be outsourced. The new Nervana chip for training, for example, is manufactured by the semiconductor fabricator TSMC.

Intel has outsourced some chip manufacturing for logistical and economic reasons. Because of capacity limitations in its most advanced chip fabrication processes, many of its customers have been left waiting for their orders of new Intel Xeon CPUs. So Intel outsourced the production of some of its other chips to other manufacturers. Intel sent a letter to its customers earlier this year to apologize for the delay and lay out its plans for catching up.

All these changes are challenging long-held beliefs within Intel, shifting the company’s priorities, and rebalancing old power structures.

The fact is that mobile devices have become vending machines for services delivered to your phone via the cloud.

In the midst of this transformation, Intel’s business is looking pretty good. Its traditional business of selling chips for personal computers is down 25% from five years ago, but sales of Xeon processors to data centers are “rocking and rolling,” as analyst Mike Feibus says.

Some of Intel’s customers are already using the Xeon processors to run AI models. If those workloads grow, they may consider adding on the new Nervana specialized chips. Intel expects the first customers for these chips to be “hyperscalers,” or large companies that operate massive data centers—the Googles, Microsofts, and Facebooks of the world.

It’s an old story that Intel missed out on the mobile revolution by ceding the smartphone processor market to Qualcomm. But the fact is that mobile devices have become vending machines for services delivered to your phone via the cloud’s data centers. So when you stream that video to your tablet, it’s likely an Intel chip is helping serve it to you. The coming of 5G might make it possible to run real-time services such as gaming from the cloud. A future pair of smart glasses might be able to instantly identify objects using a lightning-fast connection to an algorithm that’s running in a data center.

All of that adds up to a very different era than when the technological world revolved around PCs with Intel inside. But as AI models grow ever more complex and versatile, Intel has a shot at being the company best equipped to power them—just as it has powered our computers for almost a half-century.

(29)