Integrating Container Deployment Strategies with Flagger

The fast pace of modern society often leads us to face a multitude of questions that either must be answered instantly or within a set time frame that makes sense. Depending on the answer, then the average person will be able to make the right decision and get on with their day.

Should my coffee be iced or hot? Shall I listen to music on my way to work or an audiobook? Chicken or fish for lunch? Do I need to invest in the digital tools that will help my company move forward in the cloud and deliver the required goals for success? OK, the first few were just what most people think about, but the final question is arguably the one that keeps them up at night.

The simple answer is yes, but there are many reasons why. Digital tools are ubiquitous across all business sectors, but it is vital that companies not only integrate the right tools into their business optimization strategies but also understand why these integrations are important.

With that in mind, this blog post is going to look at both why containers and microservices should be deployed in your architecture and introduce you to Flagger.

What are Containers and Microservices?

The term “Container” has been in common software parlance for at least a decade and is often seen as a solution to the problem of moving code from one compute environment to another. On a very simple level, containers are packages of your software that include everything they need to run in a dedicated environment – this includes code, dependencies, libraries, binaries and more.

Microservices, on the other hand, are an architecture that splits an application into multiple services, each of which performs fine-grained functions that are part of said application. In most cases, each of these microservices will have a different logical function for the application.

The question that many companies end up asking is how best to package these microservices and then run in an isolated environment, such as cloud.

This is where the container comes in. Docker, for example is a container runtime that provides resource and network isolation to the application running inside a docker container. As a result, frameworks like Kubernetes and Swarm orchestrate multiple containers in enterprise environments.

It is worth noting that Kubernetes is an open-source container orchestration platform that lets you deploy, scale, and manage all containers. Originally designed by Google and released in 2014, it allows you to automate the deployment of your containerized microservices.

With that in mind, let’s take a few minutes to consider application deployment, delivery patterns and progressive delivery.

Progressive Delivery

Progressive delivery is an umbrella term for advanced deployment patterns that include canaries, feature flags and A/B testing. These established techniques are used to reduce the risk of introducing a new software version in production by giving app developers and SRE teams fine-grained control over what can be considered the blast radius.

For those new to these techniques, the term “canary” is a throwback to old mining techniques where a bird would be released into a physical mine environment to test for poisonous gases. Basically, if the canary died, the mine was unsafe, and a different location or tunnel would be utilized.

With progressive delivery, new versions are deployed to a subset of users and evaluated in terms of correctness and performance, before being rolled out to the totality of the users and rolled back if not matching some key metrics. Unlike continuous delivery, this gives the developer some level of control over what gets deployed as an initial test and is an invaluable part of understanding what works and, importantly, what doesn’t.

The inherent cruelty of sending an unsuspecting bird into a dangerous work zone aside, a tool such as Flagger can help the DevOps team quickly implement progressive delivery standards and application-specific deployment strategies.

What is Flagger?

Flagger is an Apache 2.0 licensed open-source tool. Initially developed in 2018 at Weaveworks by a developer called Stefan Prodan, the progressive delivery operator became a Cloud Native Computing Foundation project in 2020.

The underlyjng intent for Flagger was to give developers confidence in automating application releases with progressive delivery techniques. As a result, Flagger can run automated application analysis, testing, promotion and rollback for the deployment strategies.

From a configuration standpoint, the tool can send notifications to Slack, Microsoft Teams, Discord, or Rocket. Flagger will post messages when a deployment has been initialized, a new revision has been detected and if the canary analysis failed or succeeded.

Flagger is an operator for Kubernetes and can be configured to automate the release process for Kubernetes-based workloads with the aforementioned canary.

As part of its automated application analysis, the tool can validate service level objectives (SLOs) like availability, error rate percentage, average response time and any other objective based on app-specific metrics. For instance, if a drop in performance is noticed during the SLOs analysis, the release will be automatically rolled back with minimum impact to end-users.

In addition, Flagger supports metrics analysis providers with built-in HTTP request metrics, such as Prometheus, Datadog, AWS CloudWatch, and NewRelic.

Taking the above into account, we can now turn our attention to the recognized deployment strategies that Flagger supports.

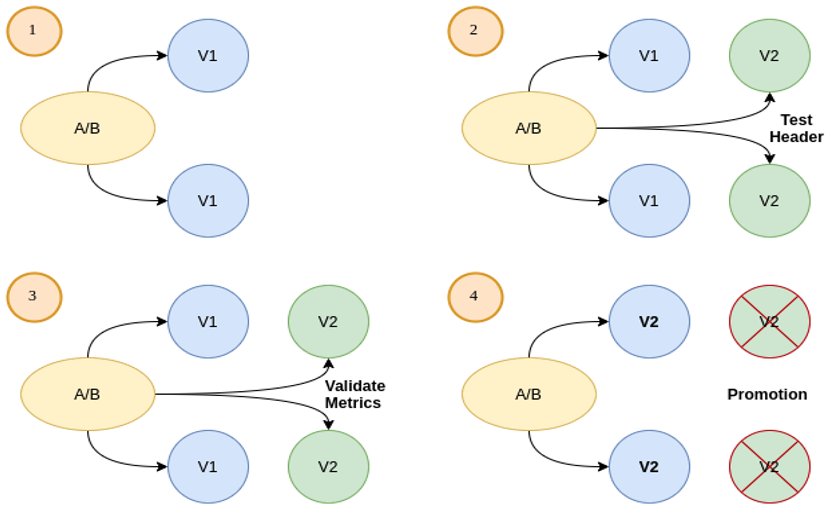

Blue/Green

In this deployment strategy, two versions are running alongside one another, blue (current version) and green (Canary new version). Flagger can orchestrate blue/green style deployments with Kubernetes L4 networking, and by incorporating something like Istio (a leading service mesh extension) you have the option to mirror traffic between blue and green versions.

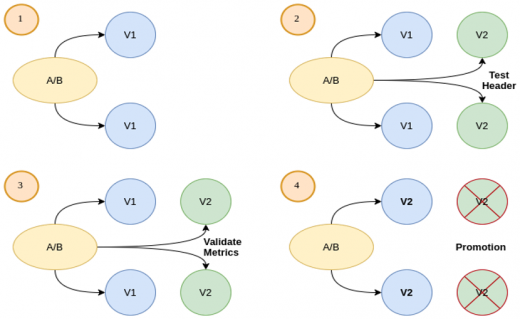

The visual below shows the workflow:

Traffic Switching and Mirroring

Flagger’s canary resource allows us to configure analysis with the specified iterations and interval time frame. For example, if the like interval is one minute and there are 10 iterations, then the analysis runs for 10 minutes.

With this configuration, Flagger will run conformance and load tests on the canary pods for ten minutes. If the metrics analysis succeeds, live traffic will be switched from the old version to the new one when the canary is promoted.

After the analysis finishes, the traffic is routed to the canary (green) before triggering the primary (blue) rolling update. This action then ensures a smooth transition to the new version, avoiding dropping in-flight requests during the Kubernetes deployment rollout.

Supported mesh providers: Kubernetes CNI, Istio, Linkerd, App Mesh, Contour, Gloo, NGINX, Skipper, Traefik

Canary

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators like HTTP requests success rate, requests average duration, and pod health. Based on analysis of the KPIs, a canary is promoted or aborted.

The canary analysis runs periodically and, if it succeeds, will run for specified minutes while validating the HTTP metrics and webhooks every minute.

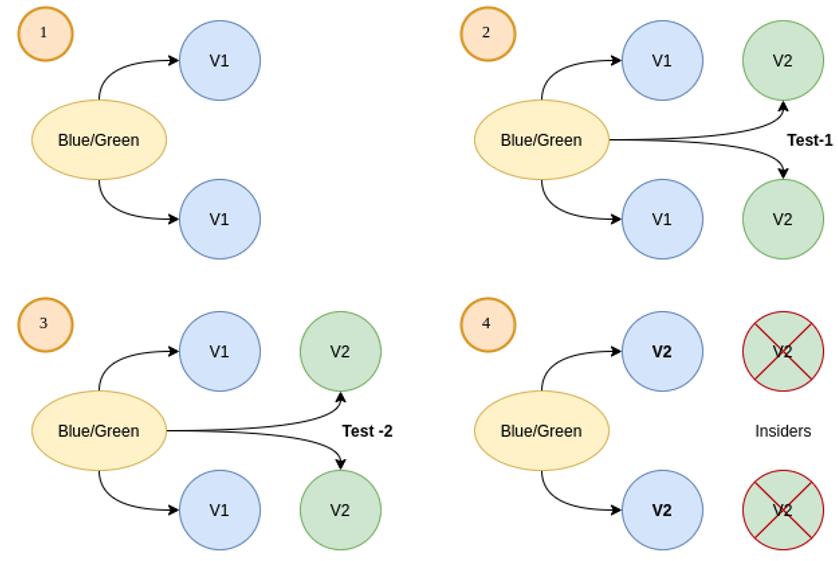

This can be seen in the visual below:

In this configuration, stepWeights (utilizing a percentage of zero to 100) determine the ordered array of weights to be used during canary promotion.

When stepWeightPromotion (0-100%) is specified, the promotion phase happens in stages. Traffic is routed back to the primary pods in a progressive manner and the primary weight is increased until it reaches 100%.

Supported mesh providers: Istio, Linkerd, App Mesh, Contour, Gloo, NGINX, Skipper, Traefik

A/B Testing

For this iteration, two versions of the application code are simultaneously deployed. In this case, frontend applications that require session affinity should use HTTP headers or cookies with match conditions. This will ensure that a set of users will stay on the same version for the whole duration of the canary analysis.

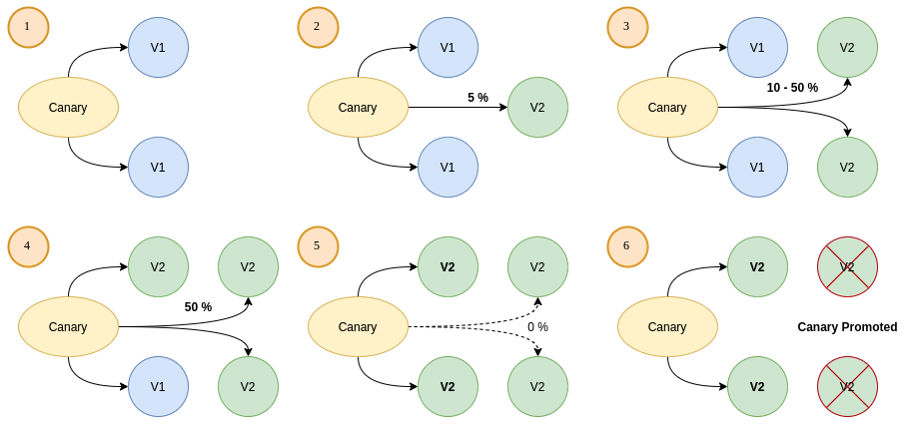

You can see this workflow below:

HTTP Headers and Cookies Traffic Routing

As you can see, traffic will be routed to version A or version B based on HTTP headers or cookies (OSI Layer 7). In this way, the routing provides full control over traffic distribution.

By using Flagger, we can enable A/B testing by specifying the HTTP match conditions and the number of iterations in the analysis. Specified configuration will run an analysis for defined minutes targeting the headers and cookies.

Sample headers include:

headers:

x-canary: regex: “.*insider.*”

headers:

cookie: regex: “^(.*?;)?(canary=always)(;.*)?$ ”

Supported mesh providers: Istio, App Mesh, Contour, NGINX

Alerting and Monitoring

As we noted above, Flagger can be configured to send alerts to various chat platforms. You can define a global alert provider at install time or configure alerts on a per canary basis – integrations include Slack, Microsoft Teams, Prometheus Alert Manager.

Additionally, the tool comes with a Grafana dashboard made for canary analysis. Canary errors and latency spikes have been recorded as Kubernetes events and logged by Flagger in JSON format.

Finally, Flagger exposes Prometheus metrics that can be used to determine the canary analysis status and the destination weight values.

Conclusion

As more companies ramp up their investment in containers and microservices, there is a defined need to utilize the best tools for the job.

Flagger would certainly fall into that category and the open-source nature of this progressive delivery operator can help developers understand different container deployment patterns and the scenarios in which they are best utilized. In that way, decision makers can then implement the specific actions which are suited best to the application and business strategy.

Business & Finance Articles on Business 2 Community

(28)