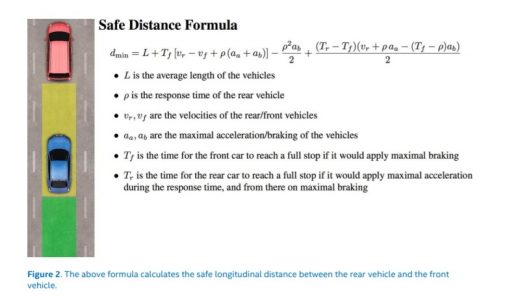

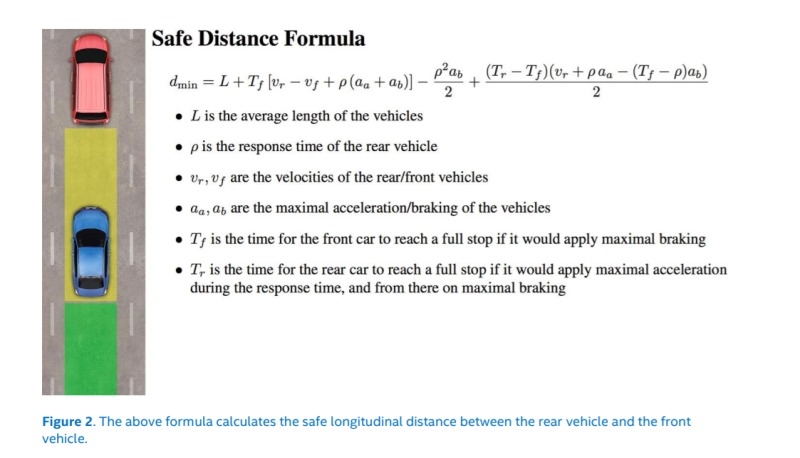

Intel proposes a mathematical formula for self-driving car safety

As autonomous vehicles become a part of the landscape, there are more questions than ever about their safety, and how to determine responsibility when they get in an accident. With so many companies (Alphabet, Uber, GM, Tesla and Ford — just to name a few) working on different technology, there’s also a question of how to establish standards on any level. Now, Amnon Shashua, the CEO of (recently acquired by Intel) Mobileye is proposing a model called Responsibility Sensitive Safety to “prove” the safety of autonomous vehicles.

In practice, the AV needs to know two things:

- Safe State: This is a state where there is no risk that the AV will cause an accident, even if other vehicles take unpredictable or reckless actions.

- Default Emergency Policy: This is a concept that defines the most aggressive evasive action that an AV can take to maintain or return to a Safe State.

We coin the term Cautious Command to represent the complete set of commands that maintains a Safe State. RSS sets a hard rule that the AV will never make a command outside of the set of Cautious Commands. This ensures that the planning module itself will never cause an accident.

The paper (PDF) backing this model up tries to establish some baseline equations (the setup pictured above is just one of the situations) for self-driving cars to make sure they will behave safely. That includes in situations where it’s possible for pedestrians to appear from behind another car, or, for example, making sure they’re not following another car too closely. The point is to make sure that autonomous vehicles don’t cause collisions, even if it may not prevent all collisions, and be able to establish blame in a crash that happens without a human driver.

(50)