Is Mixed Reality The Future Of Computing?

Alex Kipman knows about hardware. Since joining Microsoft 16 years ago, he has been the primary inventor on more than 100 patents, including Xbox Kinect’s pioneering motion-sensing technology that paved the way for some of the features in his latest creation, the holographic 3D headset called the HoloLens.

But today, sitting in his office in Microsoft’s headquarters in Redmond, Washington, Kipman is not talking hardware. He’s discussing the relationship between humans and machines from a broader philosophical perspective. Whether we interact with machines through screens or stuff that sits on our heads, to him, it’s all “just a moment in time.”

The Brazilian-born Kipman, whose title is technical fellow at Microsoft’s Windows and Devices Group, enthusiastically explains that the key benefit of technology is its ability to displace time and space. He brings up “mixed reality” (MR), Microsoft’s term for tech that mixes real-world with computer-generated imagery and will, some day, according to Kipman, seamlessly blend augmented and virtual reality. He says that one of the most exciting features of MR is its potential to unleash “displacement superpowers” onto the real world.

Humans attach value to the feeling you get when physically sharing a space with another person, which is the reason I took a 10-hour flight to have a face-to-face conversation with Kipman. “But if you could have this type of interaction without actually being here,” he says, “life suddenly becomes much more interesting.

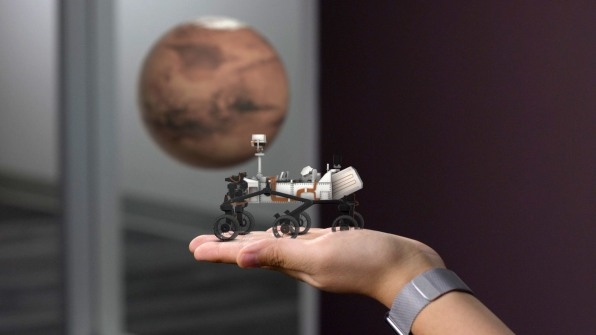

“My daughter can hang out with her cousins in Brazil every weekend, and my employees don’t need to travel around the globe to get their job done,” he continues. “With the advent of artificial intelligence, we could still be talking, but I’m not even here anymore. One day you and I are going to be having this conversation, you’ll be sitting on Mars, and I’ll have been dead for 100 years. Our job as technologists is to accelerate that future and ask how we do that.”

Microsoft is betting on mixed reality to help launch us into the future. Which brings us back to hardware. The availability of the right device at the right price will be a factor in whether consumers adopt MR (though devices alone aren’t likely to jump-start a MR revolution, if the slow sales of VR systems are any indication). While the HoloLens is the only self-contained holographic computer on the market (unlike the Oculus Rift or HTC Vine, it doesn’t need to be attached by cables to an external device), the $3,000 smart glasses have served more as proof of concept than a consumer product.

Now Microsoft wants to change that. This fall, the company is launching the Windows Mixed Reality Headsets, its first major attempt to sell the concept to the general public. Though still closer to virtual reality than a perfect AR/VR hybrid, the new device repackages some of the main features of the HoloLens—such as its advanced tracking and mapping capabilities—at the more affordable price range of $300-$500. The headsets will be available in different forms via a number of hardware partners, including Dell, HP, and Samsung, and will enable users to create 3D spaces that they can personalize with media, apps, browser windows, and more.

As Microsoft sees it, introducing a platform that lets anyone in the general public build their own digital world is the first step in achieving that leap into the world of tomorrow. “If you believe, as we do, that mixed reality is the inevitable next secular trend of computing, it’s going to involve productivity, creativity, education, and the entire spectrum of entertainment, from casual to hardcore gaming,” Kipman says.

Perfecting Mixed Reality

Kipman is not the only one who’s bullish about mixed reality. The California-based startup Avegant is working on a platform that presents detailed 3D images by stacking multiple focal planes, which the company calls “light field” technology. “Applications are endless,” says Avegant CEO Joerg Tewes, “from designers and engineers directly manipulating 3D models with their hands, to medical professors illustrating different heart conditions through a lifelike model of the human heart for their students. At home, consumers might find themselves surrounded by virtual shelves full of their favorite products. Mixed reality enables people to interact directly with their ideas rather than screens or keyboards.”

Yet in order to do all of that, mixed reality devices need to support virtual imagery that seems to be a plausible part of the real world and act in a cohesive way with it. According to Professor Gregory Welch, a computer scientist at the University of Central Florida, most of the technology developed so far has yet to achieve that balance. “MR is particularly difficult in that respect because there is no hiding the imperfections of the virtual, nor the awesomeness of the real,” he says via email.

He and his research collaborators found that in some cases, the relatively wide real-world view afforded by the HoloLens could harm that all-important sense of presence. Where a healthy human can see approximately 210 degrees, the display of the HoloLens only augments the middle of your field of view 30 degrees or so. In the experiments that Welch and his team conducted, the disconnect between the real and augmented landscape diminished the sense of immersion and presence in their subjects.

“That means that if you are looking at a virtual human in front of you (as we did in our experiment), you will only see a portion of them floating in space in front of you,” Welch says. “You have to move your head up and down to ‘paint’ a perception of them, as you cannot see the entire person at once, unless you look at them from far away (so they appear smaller). The problem appears to be that your brain is constantly seeing the ‘normal’ world all around, and that apparently ‘overrides’ many perceptions or behaviors you might otherwise have.”

Welch further explains that in demonstrations we see today with HoloLens or Apple ARKit, for example, virtual objects can appear to be fixed on a flat surface, but beyond the basic shape and visual appearance, the software usually doesn’t recognize many important physical characteristics of the object, such as weight, center of mass, and behaviors, or the surface it’s on—much less any idea about the real-world activities occurring around the objects. “If I somehow roll a pair of dice on a virtual table, it will likely not ‘fall’ when it reaches the edge, and surely won’t bounce according to its type and the material of the floor,” he explains.

In a paper that Welch coauthored with Professor Jeremy Bailenson, the director of Stanford University’s Virtual Human Interaction Lab (VHIL), they outline some of the results of their research, which shows how virtual content is much more impactful when it behaves in the way we would expect physical objects to behave in the real world.

“In my lab, we are starting to use the HoloLens to understand the relationship between AR [augmented reality] experiences and subsequent psychological attitudes and behaviors toward the physical space itself,” Bailenson says. For example, he explains that his experiments indicated that virtual humans who “ghosted” through real objects—i.e., passed through them rather than going around or avoiding them as you would in the real world—were perceived as less “real” than ones who visibly obeyed the laws of physics.

Advances in mixed reality are likely to bring us headsets that are increasingly affordable and lightweight, but it is also possible that at least some of our future interaction with this technology will not involve wearables at all. “Spatial Augmented Reality” (SAR), for instance, which Welch developed with colleagues years ago, allows you to use projectors to change the appearance of physical objects around you, such as a table’s material or the color of a couch—without glasses.

“Of course SAR won’t work for all situations, but when it works, it’s really compelling and liberating,” Welch says. “There is something magical about the world around you changing when you don’t have anything to do with it—no head-worn display, no phone, nothing. You just exist in a physical world that is changing virtually around you.”

A Virtual Tool For A Collaborative Real World

Nonny de la Peña, founder and CEO of the immersive media company Emblematic, helped pioneer the use of virtual reality as a reporting and storytelling medium. Known as the “godmother of VR,” she believes immersive technologies are the closest thing to giving audiences “the view from the ground”—i.e., putting them right on the scene of a journalist’s report as it’s unfolding. She sees the HoloLens as having the potential to increase the quality and depth of our understanding of the world, particularly with the volumetric capture technique, which creates a 3D model of subjects via multiple cameras and green screen.

“Microsoft started offering high levels of realism using volumetric capture, something that’s just becoming part of the journalism tool set,” de la Peña says. Emblematic’s own After Solitary, an award-winning documentary produced in partnership with PBS and the Knight Foundation, used this technique to give the audience a more visceral sense of the psychological trauma of long-term imprisonment.

The main shift that mixed reality promises to bring about is that content will not be anchored to any one particular device. MR uses building blocks (real-world objects or computer-generated ones) to create environments that people enter and use to interact with one another. In that context, devices become a window that allows you to look into and access those worlds, rather than a repository where your personal content lives (like your smartphone).

Kipman points out that in these shared real/virtual environments, our relationship with computing changes from a personal to a collaborative one—from devices storing your own individual content, to common creative spaces mediated by technology.

This has profound implications for how we will design apps in the future, according to Kipman. If, for example, you create a virtual statue and place it as a hologram on top of a table in your living room, another person with a different mixed reality device should still be able to see your statue when they enter that room and move it around if they wish. That’s because the device does not store your content, but rather scans and maps the environment to determine what objects (both real and virtual) inhabit it.

“These concepts require you to redefine an operating system in the context of mixed reality,” Kipman says. “You have to build a foundation that goes from the silicon to the cloud architecture that enables this shift from personal computing to collaborative computing. And these things take time.” Kipman smiles. “Until it doesn’t, then it just picks up and you’re like, What happened?”

Fast Company , Read Full Story

(33)