Meet BERT, Google’s Latest Neural Algorithm For Natural-Language Processing

Meet BERT, Google’s Latest Neural Algorithm For Natural-Language Processing

Google is applying its BERT models to search to help the engine better understand language. BERT stands for Bidirectional Encoder Representations from Transformers, a neural network-based technique for natural language processing (NLP). It was introduced and open-sourced last year.

This type of technology will become especially helpful as more consumers use voice to search for answers or information.

When it comes to ranking results, BERT will help search better understand one in 10 searches, according to Pandu Nayak, Google Fellow and VP of search.

In fact, lots of information is based on search queries that Google sees frequently and for the first time. “We see billions of searches every day, and 15 percent of those queries are ones we haven’t seen before — so we’ve built ways to return results for queries we can’t anticipate,” Nayak wrote in a post.

By applying BERT models to ranking and featured snippets in Search, Google can do a much better job of helping those searching find useful information.

This is especially true for longer, more conversational search queries, and for those where the meaning relies heavily on prepositions like “for” and “to.” In these cases, search can understand the context of the words in queries much faster.

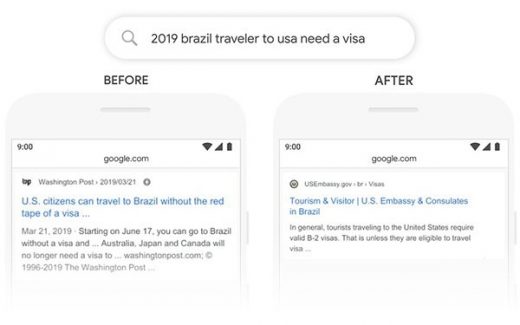

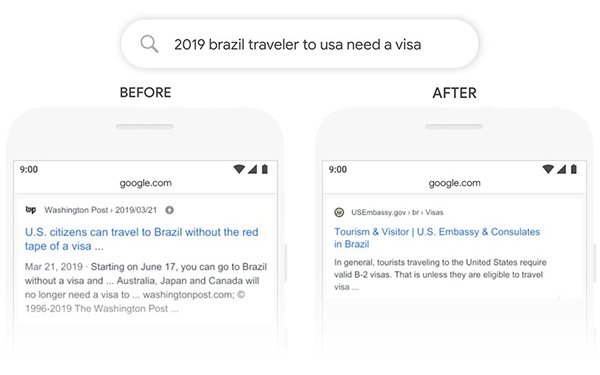

For example, the meaning of the sentence “2019 brazil traveler to usa need a visa” heavily relies on the word “to” and its relationship to the other words in the query.

Algorithms have been challenged when it comes to understanding the importance of the connection between words. BERT will help search easily grasp the nuances of the language and the words, even common words like “to” that matter to the outcome of the meaning.

Google also will use a BERT model to improve featured snippets in the two dozen countries where this feature is available. It’s seeing significant improvements in languages like Korean, Hindi and Portuguese.

BERT enables anyone to develop their question answering system, and processes words in relation to the others a sentence to better understand the language. The model uses what Google calls the latest Cloud TPUs — custom-designed machine-learning ASIC — to serve search results to speed the delivery of the information.

(32)