Microsoft and Google’s new AI sales pitches: We’re your last line of defense against your scatterbrained self

Microsoft and Google’s new AI sales pitches: We’re your last line of defense against your scatterbrained self

Would you trust an on-device AI to listen to your calls to stop scams in progress or to record all of your computing activity so you never forget any of it?

BY Rob Pegoraro

Google and Microsoft have spent the last few weeks unspooling enormous ambitions for artificial intelligence, but their smallest-scale showings of this technology—what they call on-device AI, but which you can also think of as offline or cloud-free AI—look the most interesting. And unsettling.

Both companies pose the same basic question: If we add an AI tool to your next device but bolt it down to run only offline, would you trust that to provide a level of real-time assistance that you might get from an experienced and trusted human standing over your shoulder?

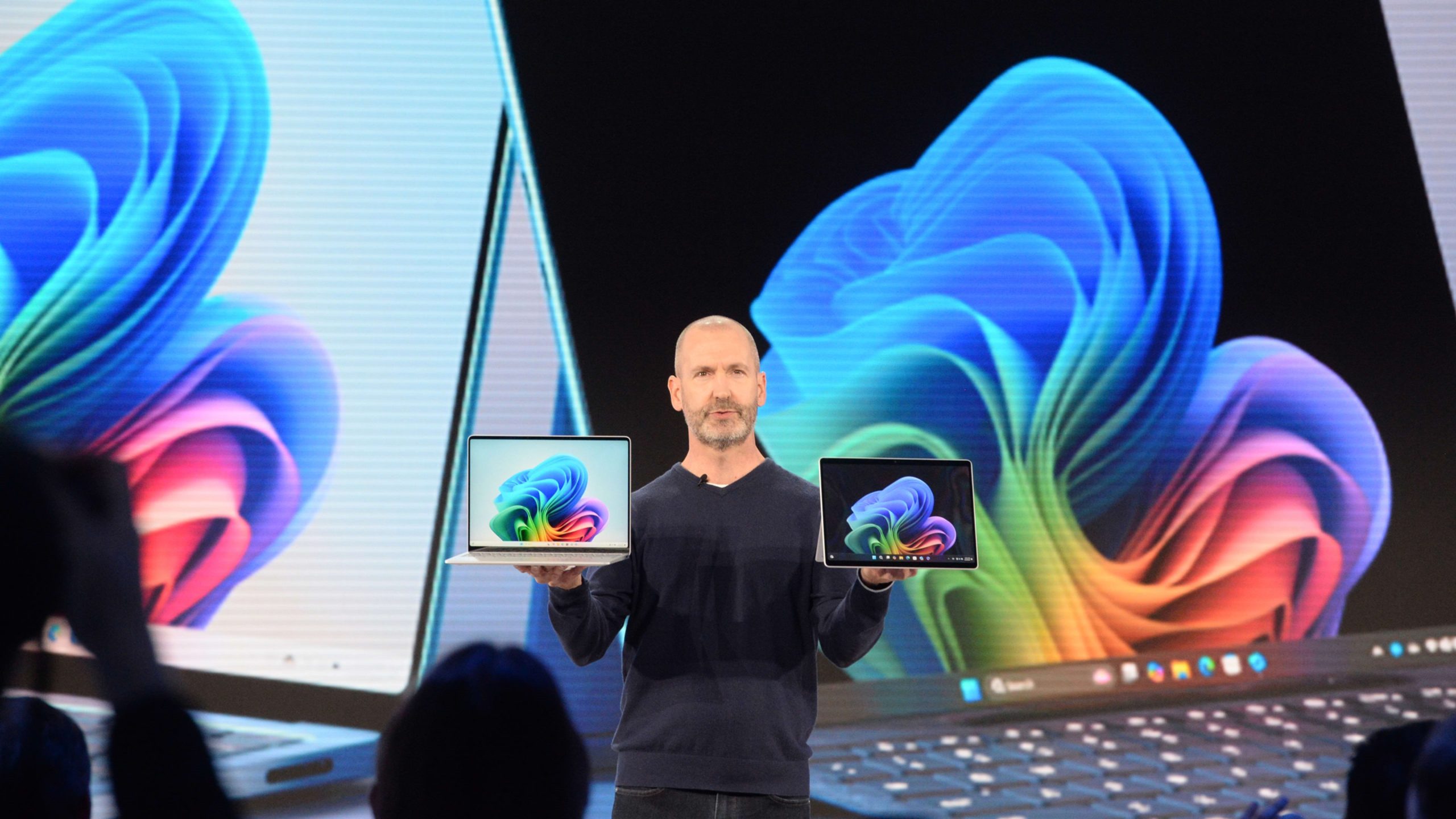

Microsoft put this offer on the table Monday with its introduction of “Copilot+” PCs that use enhanced on-device processing—the first batch feature Qualcomm’s Snapdragon X processor instead of the usual Intel and AMD suspects—to weave AI into the Windows experience.

These changes include a sweeping new feature called Recall that lets you ask Windows to track down something you read, saw, watched, listened to, or were working on months ago.

“With Recall, we’re going to leverage the power of AI and the new system performance to make it possible to access virtually anything you have ever seen on your PC,” said Yusuf Mehdi, a vice president and consumer chief marketing officer, during the event hosted at Microsoft’s Redmond, Washington, campus. “It’s going to be, honestly, as if you have photographic memory.”

That’s because Recall itself will have a photographic memory, constantly refreshed by capturing the content of your screen, which it analyzes with machine-vision software.

A support note explains that Recall takes these snapshots “every five seconds while content on the screen is different from the previous snapshot” and stores them in an encrypted on-device database that will need at least 50 gigabytes of free storage.

That note and a separate FAQ page explain that Recall will not capture private browsing sessions in supported browsers—for now, Microsoft’s Edge and Google’s Chrome as well as browsers based on Google’s Chromium open-source code.

You can also tell Recall to avert its gaze from specific apps and, in Edge, specific sites, and you can pause or stop its snapshot recording. Depending on how much storage you allot, its memory could go as far back as 18 months.

Neither document breaks down Recall’s default settings. Microsoft provided a statement from Katy Asher, general manager of communications, that Recall will be on by default, with a setup experience outlining the privacy options available.

“We educate the customer on what the controls are, what toggles are like, and so on,” says Pavan Davuluri, corporate VP for Windows and devices, of that OOBE (“out of box experience”).

Recall’s scope makes it far more powerful than the tools any one app already provides to search through its data.

“What Recall is collecting is much more comprehensive than any typical data collection: It’s a very granular view of everything you were actively doing,” says Blake E. Reid, a law professor at the University of Colorado Law School and director of the telecom and platforms initiative at the school’s Silicon Flatirons center. If some other app collected this much data this comprehensively without “extremely conspicuous notice,” he says, “I would assume it was malware.”

Reid says he appreciates Microsoft keeping this data offline but calls it a giant target: “The comprehensiveness of this data creates incentives for various actors to overcome barriers to local storage, including law enforcement, employers, and malware creators.”

Google made its own sales pitch for on-device AI a week earlier at its I/O conference in Mountain View, California, when an executive took a break from demonstrations of cloud-based AI to tout Android’s on-device Gemini Nano AI layer.

“The experience is . . . faster while also protecting your privacy,” said Dave Burke, VP of engineering for Android. “Android can help protect you from the bad guys, no matter how they try to reach you.”

He demoed this by answering a call to his phone onstage in which a reassuring voice explained that he was calling from Burke’s bank to report suspicious activity in his account.

As the fake caller said, “I’m going to help you transfer your money to a secure account we’ve set up for you,” the audience laughed knowingly and Burke’s phone served up its own reaction: a “Likely scam” dialog with a prominent “End call” button.

Gemini Nano, listening all along, had picked up on that fraud pattern without invoking any of Google’s cloud services. Said Burke: “Everything happens right on my phone, so the audio processing stays completely private to me and on my device.”

He did not describe what might trigger Gemini Nano to listen to a call and how long it might store the resulting data, and Google has not answered questions about those details since.

The in-person attendees applauded, but subsequent reaction was less welcoming. Meredith Whittaker, president of the encrypted messaging service Signal, tweeted a common reaction: “This is incredibly dangerous. It lays the path for centralized, device-level client-side scanning.”

Matthew Green, a computer-science professor at Johns Hopkins University, says that while Google’s call monitor appears “focused on protecting users,” its existence could encourage governments to require more local scanning for threats like child sex abuse.

See, for instance, so-far unsuccessful moves by European Union leaders to push for on-device scanning of encrypted messages for child sexual-abuse material (CSAM) and Apple’s 2021 plan to have iOS scan local images for matches with known CSAM.

Apple dropped that idea after complaints that once the company had built such a monitoring system, governments that have already compelled Apple to clamp down on iOS features would force it to leverage this code base for their own purposes.

Concludes Green: “I see this development as worrying for what it might enable in the hands of well-meaning legislators (and less-well-meaning governments) not so much because it’s ominous by itself.”

Microsoft’s proposal is far more ambitious than Google’s and gives adversarial governments far more to consider, but both companies invite skepticism about whether the AI they want to put on your shoulder is an angel or a devil.

That’s not only because of their past misadventures, but because both opted to show off an ambitious new capability without spelling out such basics as how it will work and whether it will be on by default. And neither should need to hit up an AI chatbot for marketing advice to know that this trust-us approach is likely to land with a thud before an increasingly tech-skeptical audience.

ABOUT THE AUTHOR

Fast Company

(12)