New rules from Microsoft ban use of AI for facial recognition by law enforcement

New rules from Microsoft ban use of AI for facial recognition by law enforcement

Microsoft has continued to stand firm in its stance against law enforcement using its Azure OpenAI Service for generative artificial intelligence (AI) that performs facial recognition, joining other tech giants such as Amazon and IBM in similar decisions.

The Washington-based tech giant amended the terms of service of its Azure OpenAI offering to explicitly prohibit its use ‘by or for’ police departments for facial recognition in the US.

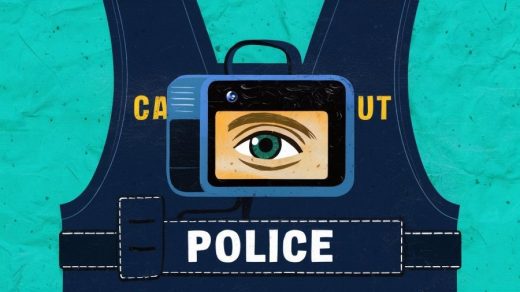

Also now explicitly prohibited is the use of “real-time facial recognition technology on mobile cameras used by any law enforcement globally to attempt to identify an individual [sic] in uncontrolled, ‘in the wild’ environments, which includes (without limitation) police officers on patrol using body-worn or dash-mounted cameras using facial recognition technology to attempt to identify individuals present in a database of suspects or prior inmates.”

The company has since claimed its original change to the terms of service contained an error. They told TechCrunch the ban applies only to facial recognition in the U.S. rather than an outright ban on police departments using the service.

Why has Microsoft banned facial recognition with their generative AI service?

This update to Microsoft’s Azure OpenAI terms of service has been made just a week after an announcement from Axon, a military tech and weapons company, announced a tool built using OpenAI’s GPT-4 to summarize body camera audio.

Functions that use generative AI like this have many pitfalls, such as the propensity for these tools to ‘hallucinate’ and make false claims (OpenAI is currently subject to a privacy complaint due to its failure to correct inaccurate data from ChatGPT), and the rampant racial bias present in facial recognition caused by racist training data (such as late last year where incorrect facial recognition led to a false imprisonment of an innocent black man).

These recent changes reinforce a stance that Microsoft has maintained for several years. In 2020 during the Black Lives Matter protests, speaking to The Washington Post, Microsoft President Brad Smith said, “We will not sell facial-recognition technology to police departments in the United States until we have a national law in place, grounded in human rights, that will govern this technology.”

The current spate of protests across the world over the genocide of Palestinians in Gaza has prompted a renewed commitment to the protection of human rights by tech companies, as issues of police brutality towards protestors arise in the press.

Featured image credit: generated with Ideogram

The post New rules from Microsoft ban use of AI for facial recognition by law enforcement appeared first on ReadWrite.

(20)