It’s been almost four years since my friends Sal Randazzo, Charlie Whitney, and I released a free-to-play mobile game called Specimen. Since then, about 300,000 people have played 1.5 million sessions. And collectively, the world has tapped almost 60 million little colored blobs.

Specimen is a game about color perception, easy to learn, tough to master. Players simply tap a vibrant blob that matches a background color. As you advance, you earn boosters and coins to combat an ever faster clock. (Here’s a bit more if you so please.)

Though we put light ads in the game, Specimen was a loss leader, to say the least. After a year-and-a-half of design and development, we brought in less than $1,000. But the project was always more about curiosity then optimizing for financial return. We wanted to see if we could design a game that had an intellectual seed planted inside of it: If we made a game about perception, could we generate a global, anonymized dataset about how the world saw color? Could Specimen‘s gameplay be akin to Louis von Ahn’s dually purposed CAPTCHA project, a ubiquitous online security test that helped digitize thousands of old books?

When we launched the game, we set our analytics to record every specimen tapped, which included both the player-selected color, as well as the correct target color. This meant we could log the RGB value each time the player was correct. More interestingly, we used the LAB color model, in addition to hue, saturation, and value, to measure a delta between incorrect color choices and the correct color target. (Aside from collecting this color comparison data, we did not request or expressly collect personally identifying data. Reasonable privacy was a concern.)

However, we quickly realized that because there were so many taps, we were collecting tens of millions of data points. How to process the data in any meaningful way felt out of reach. We were in over our head.

[Image: courtesy PepRally]

But sometimes when you put something out on the internet, the internet gives back. About six months after we released Specimen, we were contacted by Phillip Stanley Marbell, a researcher at MIT, now an assistant professor at Cambridge and a Turing Fellow. He was a fan of the game and asked us if we were willing to share our data or collaborate. As it turns out, Marbell was working on something he dubbed Project Crayon, which explores ways to harness humans’ color perception limits to improve screens’ battery life. From there, Marbell looped in other academics: Martin Rinard and José Pablo Cambronero at MIT, and Virginia Estellers at UCLA. After adding a few tweaks to what color data we captured, they cleaned up the dataset and built tools to query that data.

Marbell and Cambronero then ran experiments to answer our team’s original question about global variances in perception. While their working-paper is not yet peer-reviewed, their preliminary analysis seems to indicate there does appear to be variation across countries, specifically regions of the world. For example, median user accuracy to correctly match colors in the game ranged from 83.5% in Norway to 73.8% in Saudi Arabia, with standard deviations of 5.98% and 13.02%, respectively. (We infer the user’s country based on their service provider.) The research also began to look at specific color accuracy and found, for example, Scandinavian countries shined when it came to correctly identifying Red-Purple hues, while India and Pakistan underperformed across these colors. Singapore struggled to identify “standard” greens, but was above average when those greens leaned closer to yellow. Gaining an explanation for this geographic variance in perception is complex. But the vast size of the Specimen dataset provides clues to a basic curiosity: Do you see what I see?

[Image: courtesy PepRally]

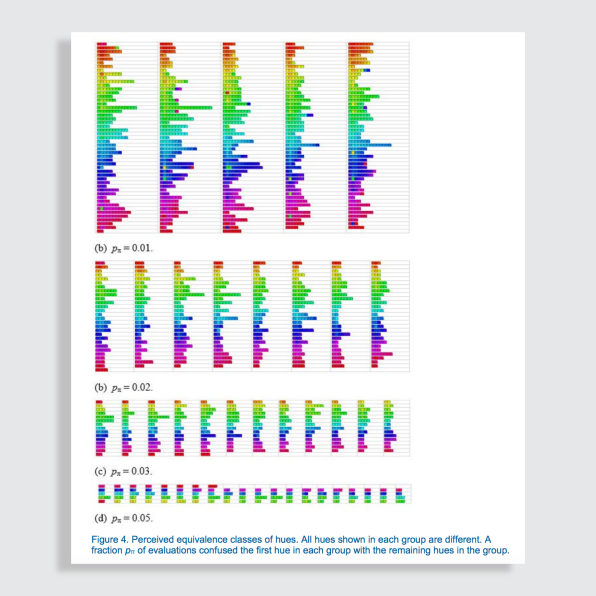

The Specimen data also advanced Marbell’s Crayon Project. He and Rinard published an academic paper entitled Perceived-Color Approximation Transforms for Programs that Draw. (Examples of programs that draw include web browsers, messaging apps, and yes, games–essentially any program that displays graphics. “Programs that draw” make up the majority of apps on the app store.)

Marbell and Rinard’s paper introduces Ishihara–a new language that enables computer programs to automatically make decisions about which colors to display to optimize battery life. For example, if one shade of green is reasonably close to a slightly different shade of green, Ishihara can tell your device to display the shade that is less power-intensive. Marbell and Rinard’s paper used the Specimen dataset to calibrate what could be defined as “reasonable” in terms of color closeness. Top line, Ishihara caused up to a 15% improvement in OLED display power dissipation. How ironic that an addictive tapping game that drained your phone could ultimately lead to longer battery life.

[Image: courtesy PepRally]

The Specimen data was further used for an academic paper about “DaltonQuant”–a new bespoke method of compressing images based on a viewer’s color perception deficiencies. The algorithm used Specimen‘s 28 million color matching data points to determine a more aggressive form of color quantization–a process to strategically reduce the number of distinct colors in an image. DaltonQuant’s compression resulted in a 22% to 29% reduction in file size, as compared to current state of the art algorithms. (Note this paper has not been peer-reviewed.) Image compression is a hidden but ubiquitous factor in our digital lives, whether we realize it or not. As a designer, I’m well-acquainted with compressing that .png, .mp4, or .gif to be small enough to load when you’ve only got three out of five bars of service.

I am not sure where Ishihara or DaltonQuant may lead. Or what Marbell, Rinard, Cambronero, and Estellers’s subsequent research may yield, Specimen-related or not. But I love that our little game ricocheted into the universe and proved useful to academics who had questions I would never have thought to ask. Curiosity can provide quite the ride.

While you can still download Specimen if you have an earlier iPhone than an iPhoneX, the Specimen team has decided not to continue to support development. The economics of the app store are tough, and we’d prefer to focus on new endeavors rather than tweak financial levers that would frankly, make the game less fun. This labor of love has run its course.

But the fruits of the project live on in open source. A generic version of Jose’s tools to query the Specimen dataset are hosted here on github. My greatest hope is other researchers find and make use of what was gathered, and that other designers and engineers consider leveraging play in unexpected ways.

Erica Gorochow is a motion design director and illustrator. Her practice, PepRally, is based in Brooklyn.

Fast Company , Read Full Story

(17)