POV: What Biden gets right—and wrong—in his calls for tech accountability

By Daniel Barber

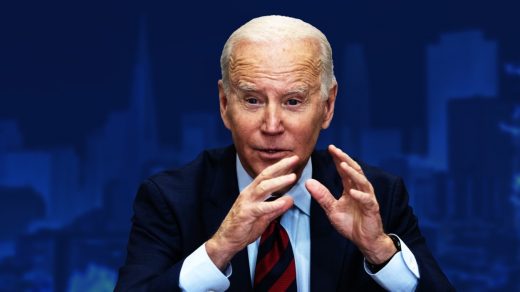

Last week, President Biden penned an op-ed in the Wall Street Journal calling on Congress to “hold Big Tech accountable.” This sounds like a noble goal after some of their practices have been made public.

People like Frances Haugen shocked the world with the release of the Facebook Papers. Foreign governments have sought to punish companies like Amazon and Meta for GDPR violations, and even individual states, in absence of a federal data privacy law, have gone after Big Tech (e.g. the $392 million settlement with Google). Within this context, further targeting of these companies could be seen as a huge win for Biden, Congress, and the American people.

I am a fan of actions that bring awareness to privacy issues and protection of individuals’ human rights. That said, a couple of things are keeping me up at night.

Conflation of legislation

President Biden highlighted three specific areas of reform in his op-ed:

First off, these are three very distinct points that often get lumped together when legislators talk tech, which makes sense from a practical perspective. Different issues spur a myriad of opinions, stalling the legislative process. In hopes of circumventing the inevitable quagmire, everything “Big Tech” gets conflated so that if you give one lawmaker what he/she wants on privacy, someone else gets stricter antitrust provisions, and a law can move forward. The problem is that legislation passed this way remains so close to surface level in an effort to avoid offending anyone that it is rarely an effective mechanism for meaningful change.

The American Data Privacy and Protection Act is a prime example. The U.S. is one of the only leading nations not to have a national data privacy law on the books. As such, the House Committee on Energy and Commerce developed a tech-focused law and advanced it to the full House of Representatives in July 2022. But as the bill is now open for debate, it has been criticized for going too soft, with critics advocating for something more akin to a federal version of California’s Consumer Privacy Act (CPRA) or the European Union’s GDPR—something with some teeth.

Making Big Tech the enemy is an easier sell

The second—and much larger—issue I have with Biden’s call for reform lies specifically in terms of data privacy. As mentioned, it is really easy to make Big Tech the villain, and this is perhaps the best way to sell a piece of legislation. But it’s not the full story.

Focusing on Big Tech to protect children’s rights, to protect women’s rights, to protect human rights is not enough. In fact, it’s nowhere close. With the cloud explosion, personal data sprawls across any company’s tech stack, which poses significant risks.

Let’s take the goal of safeguarding children’s information online as an example. The Big Tech players have certainly had some massive slipups, but they are being watched very carefully. Their moves are much more transparent to regulators than they once were. You know who’s aren’t? Niche companies created for children’s needs and interests.

These sites and apps contain large volumes of children’s data, collected for seemingly legitimate purposes, like learning. But what else are companies doing with that information? It’s unclear.

Are they selling it? Legislation protects things like names or a home address. The dirty little secret though is that companies could be selling the child’s IP address and location data as a child moves around, or behavior data about how a child uses a particular game that could then be applied to construct a profile for ad targeting. It’s horrifying when you really dive into the details.

Scenarios like this are precisely why Sephora was fined $1.2 million by the State of California in August. The official complaint was over a technical compliance obligation, but if you read between the lines, it was also about holding onto the location data of women. Ad providers were tracking women at a point in time when there was a lot of discussion about reproductive rights, and that made the practice that much more egregious. If location data on female customers got into the wrong hands, it could be very, very dangerous.

While it’s easy for legislators to cry foul on Meta, Amazon, and Google, they should also pay a bit more attention to how smaller, specialty companies are operating. This is where the scary stuff lives—and often the companies engaging in such data collection practices don’t even realize it is dangerous. They’re retailers and marketers who need guidance on why certain types of data should not be collected and retained.

Another twist

The assumption can be drawn that Big Tech companies could be opposed to federal legislation. They’ve spent millions lobbying against it. But again, this has to do with what’s conflated within proposed legislation. While Big Tech may grumble about data privacy, that’s a lot easier and less expensive to contend with than being targeted for anticompetitive behavior and antitrust violations that could break up their respective companies. Privacy is a battle Big Tech is willing to yield. In fact, they are now actively seeking federal legislation on data privacy.

As an increasing number of states pass their own privacy regulations (and in California’s case, bolster an existing law), this creates a tapestry of different, confusing, and convoluted privacy laws that companies must decipher and contend with. Tack on the fact that other nations have been able to fully dictate the data privacy agenda, doling out significant fines to U.S. companies, and it gets even worse.

We’ve reached the moment when it is in the best interest of Big Tech—and everyone else—to have one clear and consistent set of rules to follow. And by cooperating and changing certain practices, it could remove the glare of deeper scrutiny for certain companies.

Summary judgment

There are downsides to conflating issues for the sake of passing a law, and there are still holes that must be filled. To do so, we need to take a comprehensive approach that addresses the ways in which data is collected, used, and shared. This includes ensuring that all companies are transparent about their data practices and giving individuals control over their own data.

I applaud those who shine a light on these very important issues and appreciate the desire to push for the passage of legislation that safeguards the privacy and civil rights of all Americans.

Daniel Barber is the CEO and co-founder of DataGrail, the first integrated privacy solution for modern brands to build trust with transparency.

(45)