Read real stories of how YouTube pushed people down shocking rabbit holes

There’s a gamer out there who doesn’t smoke weed, and a horse enthusiast who doesn’t enjoy equine porn. But they say they got lots of YouTube recommendations for videos on those topics.

These are two of a curated group of 28 “YouTube regrets” stories that the Mozilla Foundation, best known for the Firefox browser, is publishing today. In the collection of stories, anonymous people describe the way that they began watching something innocuous on YouTube before the platform’s recommendation algorithm started showing them extreme videos.

Included are stories of recommended videos featuring gratuitous violence, UFO conspiracy theories, anti-LGBT+ sentiment, white supremacist propaganda, and anti-women “incel” rants. One parent recounts how their 10-year-old daughter began by searching for tap dance videos and was directed to “horrible unsafe body-harming and body-image-damaging advice.” The girl has restricted how much she eats and even drinks to maintain a super-slender appearance, the parent says.

The foundation received over 2,000 such tales within a few days of putting out a call for submissions to its email list of followers, says Ashley Boyd, the foundation’s vice president of advocacy. The goal of sharing these stories with the world? To pressure YouTube into collaborating with outside experts on reforming a recommendation system that sometimes goes off the rails.

None of the stories have been independently verified. Even a couple thousand stories of bad recommendations are minuscule for a service where people watch over a billion hours of video every day. “It’s not meant to be a scientific or rigorous accounting, but it does give a flavor for the way in which people are experiencing [YouYube],” says Boyd.

YouTube says its algorithms send people “on average” toward popular videos of subjects like music and gaming, not “borderline” content, like conspiracy theories, flat-earth theories, and misinformation. Borderline content makes up, “only a fraction of 1%” of watch time, says YouTube.

In some of Mozilla’s cases, the recommendations may have worked as they should, even if that made users uncomfortable. One viewer who watched videos by conservative commentator Ben Shapiro, for instance, was upset that videos like “Person X DESTROYS liberal” showed up in their recommended video feed.

Many of the genuinely disturbing videos that the stories describe would seem to violate YouTube community guidelines—for example, prohibitions against “hateful content,” “harassment and cyberbullying,” and “violent or graphic content.” And many are supposed to be addressed by measures YouTube has been working on. In June, for instance, YouTube announced new efforts for “removing more hateful and supremacist content” that proclaims one group superior to others.

YouTube claims a lot of success already, after making more than 30 changes to the recommendation system in the United States this year. And the company says it’s recommending more “authoritative” videos to counteract the conspiracy and quackery clips folks are viewing (although it’s not necessarily taking those clips down). Most of Mozilla’s horror stories don’t specify how long ago the extreme recommendations happened, although one complaint about conspiracy videos dates back to 2013.

The struggle to reform recommendations

YouTube says that views from recommendations of “borderline content and harmful misinformation” have dropped by 50% in the past year.

But Mozilla wants proof. “I’m asking them to verify their own claim,” says Boyd. “If you want to rebuild trust, the way to do that is to really show your work, show how you came to that conclusion, and open yourself up to independent verification.”

YouTube has a lot of rebuilding to do. A June New York Times article labeled the site an “Open Gate for Pedophiles.” In the story, Harvard researchers say its algorithms aggregated innocent videos of kids, such as girls playing at the pool, and recommended them as suggestive content. Users watching erotic videos would be recommended clips of ever-younger women—including videos of girls ages 5 and 6 in swimsuits.

Even sincere efforts at reform could be hampered by the overall way that YouTube works, says Brittan Heller, counsel for corporate social responsibility at law firm Foley Hoag. “As long as their algorithm is premised on engagement, they’re going to have a rough time weeding out some of the most controversial but engaging kinds of content,” says Heller, who is not affiliated with the Mozilla campaign. YouTube says it has not seen a correlation between harmful misinformation and increased engagement.

Mozilla professes a conciliatory approach. “We have every reason to believe you want to take this very seriously and really align resources internally to this,” Boyd says she told YouTube. “But the era of ‘trust us’ is sort of past.” So Mozilla is asking that YouTube open its data to scrutiny by all outside researchers, such as former YouTube engineer Guillaume Chaslot, who runs the AlgoTransparency project.

The demands are specific and extensive, including the ability to query the number of times a video is recommended and the number of views that result from a recommendation. Mozilla and the researchers it represents also want to access data like whether a video was reported or considered for removal.

And they want help developing “simulation tools” to evaluate YouTube’s recommendation algorithm, so they can see how and why viewers get led down a particular rabbit hole.

Boyd and colleagues met with Google for what she describes as “a very good meeting” in September. They presented some of the recommendation horror stories to YouTube, she says, and also laid out their demands. “[YouTube was] clear they’re not ready to be more public or open about their efforts along the lines of what we asked at this time,” Boyd says.

How to shield your eyes, in the meantime

If you’re getting offensive, annoying, or just plain bonkers video recommendations on YouTube, there’s plenty you can do without waiting for the company or anyone else to fix things.

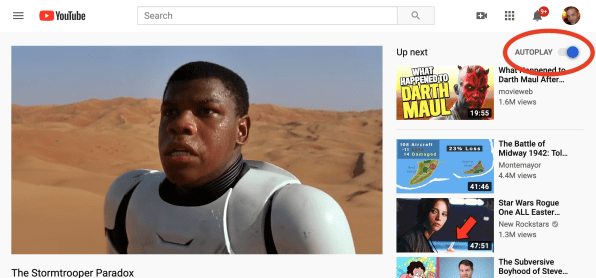

For starters, consider turning off autoplay by clicking the toggle switch in the upper right of the screen. Even if YouTube’s algorithms want to send you dreck, at least it won’t play automatically and endlessly. Heller suggests that YouTube simply disable autoplay for politically related content because the recommendation algorithm “ends up privileging fringe views.” Several researchers have advocated turning off recommendations for videos featuring children, and YouTube has reportedly considered turning off autoplay for kids’ videos.

Also, you can simply ignore whatever video thumbnail recommendations appear to the right of what you’re watching—though that may be hard for most people. YouTube told the New York Times that recommendations drive 70% of views on the site.

If you can’t resist the temptation to watch what pops up, make an effort to refine the process. One of the Mozilla horror stories is about an astronomy enthusiast who was inundated with “all kinds of ‘Area 51’ weird pseudoscience.” But, they confessed, “All I had to do is click ‘not interested’ a few times and they gradually disappeared.”

“We urge people to take advantage of settings and tools that currently exist,” says Boyd, but she adds, “If you were to wrangle all the settings and lock down every service and device you have, it could be a part-time job.”

Ultimately, there are limits to what anyone can do on the receiving end when big tech is omnipresent. But critics say that YouTube can do a lot more to limit the ways its recommendation algorithms show harmful content, and they’re asking for a chance to help.

(49)