The good news: They’re not evolving very fast, and new user IDs like biometrics may eventually challenge their growth.

You know that any report on bad bots is not going to be optimistic when it starts with a comparison to Pearl Harbor.

In fact, the fourth annual “Bad Bot Report” from anti-bot service Distil Networks is downright depressing. It continues to document the onslaught of hostile bots that are swarming over websites, login pages and anything else that pokes up its head online.

Pearl Harbor is mentioned because, early on the morning of December 7, 1941, a young radar operator saw a huge blip coming toward the naval base. But, because the tech was new and it was difficult to tell friendly planes from hostile ones, the warning was ignored until it was too late.

By comparison, the report said, there is a similar challenge in identifying bad bots from friendly ones, even as a major attack is underway.

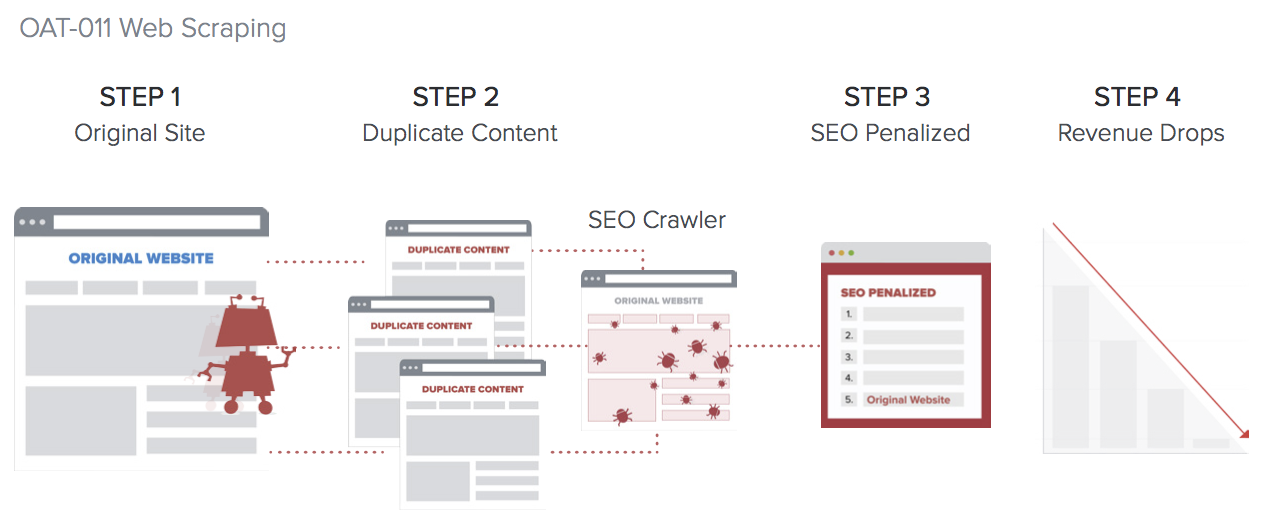

Some bots wear white hats, such as search engine spiders, and they’re relatively easy to spot. Some wear gray hats, like those scraping findings on opinion-gathering sites or gathering competitive info like pricing and using it against you, without your permission. They are more difficult to recognize. Ninety-seven percent of sites with proprietary content or pricing were scraped involuntarily in 2016 by bots, according to Distil. From the report:

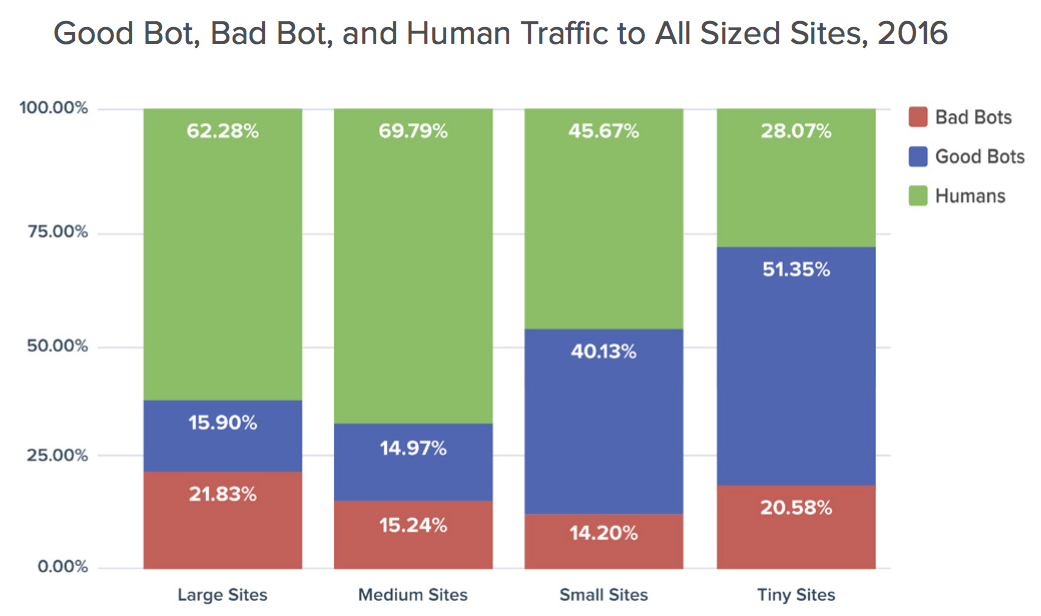

And black-hatted bots are the ones that want to steal from your site, take it over, crash it or impersonate users (16.1 percent of bad bots self-reported as mobile users). The bad ones accounted for an astounding one-fifth of all web traffic last year — almost seven percent more than in 2015.

Actually, the report says, 96 percent of sites with login pages experienced attacks last year from bad bots, defined as automated programs that carry out malicious activities.

Ninety-three percent of marketing analytics trackers and performance measurement tools — marketers’ daily guidance instruments — were hit by bad bots. The price of those attacks could be misleading SEO stats or distorted advertising priorities.

Nearly a third of sites with forms sustained attacks with spam bots, and 82 percent of sites with signup pages were hit by bots intent on creating fake accounts.

One difference between this year’s report and last year’s, Distil Networks CEO and co-founder Rami Essaid told me, is the increasing use of data centers for dissemination — about 60 percent of bad bots.

For instance, Amazon Web Services accounts for 16.37 percent of all bad bot traffic, four times more than the next ISP. But, Essaid said, when Amazon is alerted about bad bot traffic and shuts that server down, the perpetrators quickly spin up another server on Amazon or another ISP under false credentials.

The report offers a few signs of bad bots on your site:

You can tell bad bots are on your site when unexpected spikes in traffic cause slowdowns and downtime. In 2016, a third (32.36%) of sites had bad bot traffic spikes of 3x the mean, and averaged 16 such spikes per year.

You’ll know bad bots are a problem when your site’s SEO rankings plummet due to price scraping and misguided ad spend as a result of skewed analytics. 93.9% of sites were visited by bad bots that trigger marketing analytics trackers and performance measuring tools.

Because of bad bots your company will have a plethora of chargebacks to resolve with your bank due to fraudulent transactions. You’ll see high numbers of failed login attempts and increased customer complaints regarding account lockouts.

Bad bots will leave fake posts, malicious backlinks, and competitor ads in your forums and customer review sections. 31.1% of sites were hit with bots spamming their web forms.

And, lest you collapse into a total funk over these findings, the report offers some tips for combat:

One way to choke off bad bots is to geo-fence your website by blocking users from foreign nations where your company doesn’t do business. China and Russia are a good start.

Ask yourself if there is a good reason for your users to be on browsers that are several years past their release date. Having a whitelist policy that imposes browser version age limits stops up to 10 percent of bad bots.

Also ask yourself if all automated programs, even ones that aren’t search engine crawlers or pre-approved tools, belong on your site. Consider creating a whitelist policy for good bots and setting up filters to block all other bots — doing so blocks up to 25% of bad bots.

Essaid does point to a few bright spots on the horizon. A wider use of two-factor logins, or of biometric logins like voiceprints or fingerprint readers, could dramatically improve login security, he said.

He also noted that good bots are “getting more efficient,” so they are less of a load on your site when they come a-spidering.

Plus, he said, publishers are getting more serious about proactively fighting bot traffic, and it appears that bots are not evolving very fast.

If you don’t have some system in place, Distil warns, you’re in the same position as that young radar operator in December of 1941 — not understanding what is happening and totally vulnerable to a massive attack.

But, even if this report makes you as depressed as a Pearl Harbor attack metaphor might, just keep in mind that the good guys eventually won that war.

Marketing Land – Internet Marketing News, Strategies & Tips

(61)