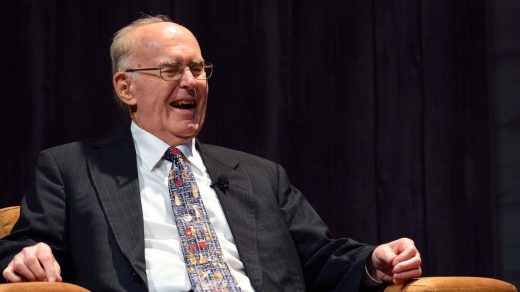

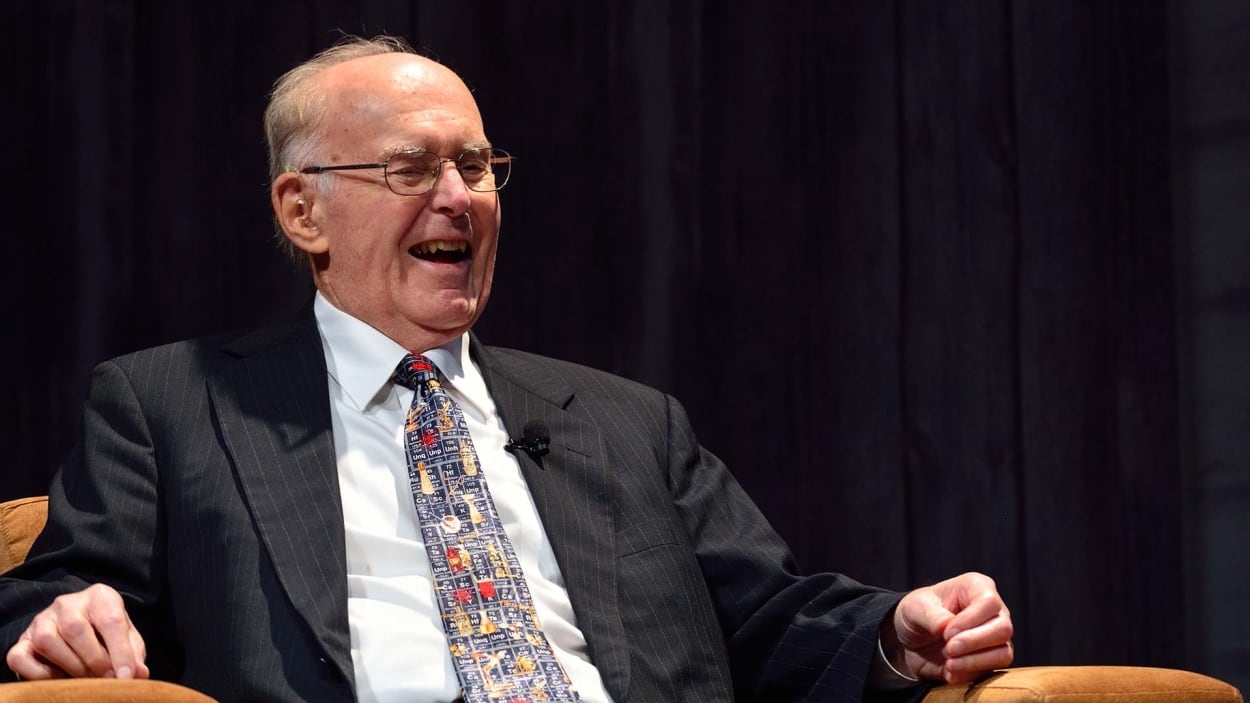

RIP, Gordon Moore, who gave us Moore’s Law—and the world it created

If Gordon Moore had only cofounded Intel, he’d be remembered as one of the tech industry’s giants. But Moore, who died at his home in Hawaii on Friday at the age of 94, also devised Moore’s Law—the 1965 prediction that the number of components that could fit on an integrated circuit would double every year, which he revised to every two years in 1975. Originally made in an article for Electronics magazine, the observation became a talisman for the entire tech industry. And though skeptics have declared Moore’s Law to be dying or dead for years, its power as a bold, optimistic explanation of the extraordinary advances in computing over the past six decades is undiminished.

A beloved elder statesman and noted philanthropist, Moore helped create Silicon Valley long before anyone called it that. In 1957, he was one of the legendary “traitorous eight” employees of pioneering firm Shockley Semiconductor who, at odds with its tyrannical founder William Shockley, left to start Fairchild Semiconductor. But it was Intel, which Moore founded in 1968 with Robert Noyce, that transformed the world. In 1971, the startup shipped the first commercially available microprocessor, the 4004. It also created the 8080, which was used in the first successful PC, MITS’ Altair 8800, in 1975.

When IBM put together its first PC in 1981, it was only logical that it picked an Intel chip—the 8088—to power it. That choice, and IBM’s use of a Microsoft operating system called MS-DOS, launched a platform that lives on—after 42 years of Moore’s Law magic—in today’s PCs.

For decades, Intel’s processor advances, from the 386 to the 486 to the Pentium and beyond, made it the tech industry’s single most indispensable foundational player. Current CEO Pat Gelsinger, who is trying to restore the beleaguered chip maker to those glory days, originally joined the company as a technician in 1979, during Moore’s 12-year tenure as CEO.

In 2001, George Anders interviewed Moore for the October 2001 issue of Fast Company. In explaining how computers kept getting faster, smaller, and cheaper, Moore brought up a famous law—but not his own. “I call it a violation of Murphy’s Law, which says that if something can go wrong, it will. If you make things smaller, everything gets better simultaneously,” he told Anders.

Moore may have left us, but that observation, like Moore’s Law, remains resonant. It’s a sure bet that people will call on his wisdom to help explain transformative progress that hasn’t even happened yet.

(29)