Robots Are Developing Feelings. Will They Ever Become “People”?

When writing the screenplay for 1968’s 2001, Arthur C. Clarke and Stanley Kubrick were confident that something resembling the sentient, humanlike HAL 9000 computer would be possible by the film’s namesake year. That’s because the leading AI experts of the time were equally confident.

Clarke and Kubrick took the scientific community’s predictions to their logical conclusion, that an AI could have not only human charm but human frailty as well: HAL goes mad and starts offing the crew. But HAL was put in an impossible situation, forced to hide critical information from its (his?) coworkers and ordered to complete the mission to Jupiter no matter what. “I’m afraid, Dave,” says the robot as it’s being dismantled by the surviving astronaut.

If humans had more respect for the emotions that they themselves gave to HAL, would things have turned out better? And will that very question ever be more than an experiment in thought?

The year 2001 came and went, with an AI even remotely resembling HAL looking no more realistic than that Jovian space mission (although Elon Musk is stoking hopes). The disappointment that followed 1960’s optimism led to the “AI Winter”—decades in which artificial intelligence researchers received more derision than funding. Yet the fascination with humanlike AI is as strong as ever in popular consciousness, manifest in the disembodied voice of Samantha in the movie Her, the partial humanoid Eva in Ex Machina, and the whole town full of robot people in HBO’s new reboot of the 1973 sci-fi Western Westworld.

Today, the term “artificial intelligence” is ferociously en vogue again, freed from winter and enjoying the blazing heat of an endless summer. AI now typically refers to such specialized tools as machine-learning systems that scarf data and barf analysis. These technologies are extremely useful—even transformative—for the economy and scientific endeavors like genetic research, but they bear virtually no resemblance to HAL, Samantha, Eva, or Dolores from Westworld.

Yet despite the mainstream focus on narrow applications of artificial intelligence—machine learning (à la IBM’s Watson) and computer vision (as in Apple’s new smart Photos app in iOS 10)—there is a quiet revolution happening in what’s called artificial general intelligence. AGI does a little bit of everything and has some personality and even emotions that allow it to interact naturally with humans and develop motivations to solve problems in creative ways.

“It’s not about having the fastest planner or the system that can process the most data and do learning from it,” says Paul Rosenbloom, professor of computer science at the University of Southern California. “But it is trying to understand what is a good enough version of each of those capabilities that are compatible with each other and can work together well in order to yield this overall intelligence.”

Instead of focusing efforts on one or a few superhuman tasks, such as rapid image analysis, AGI puts humanlike common sense and problem-solving where it is sorely lacking today. AGI may power virtual robots like Samantha and HAL or physical robots—though the latter will probably look more like a supersmart Mars rover than Alicia Vikander, the actress who plays Ex Machina‘s alluring, deadly Eva. As AGI grows more sophisticated, so will the questions of how we should treat it.

“At some point you have to recognize that you have a system that has its own being in the sense of—well, not in a mystical sense, but in the sense of the ability to take on responsibilities and have rights,” says Rosenbloom. “At that point it’s no longer a tool, and if you treat it as a tool, it’s a slave.”

We’re still a long way from that. Rosenbloom’s AI platform, called Sigma, is just beginning to replicate parts of a human mind. At the university’s Institute for Creative Technologies (ICT), he shows me a demonstration in which a thief tries to figure out how to rob a convenience store and a security guard tries to figure out how to catch the thief. But the two characters were just primitive shapes darting around a sparse 3D environment—looking like the world’s dullest video game.

Robots aren’t people, but the discussion has begun about whether they could be persons, in the legal sense. In May, a European Union committee report called for creating an agency and rules around the legal and ethical uses of robots. The headline grabber was a suggestion that companies pay payroll taxes on their robotic employees, to support the humans who lose their jobs. But the report goes a lot deeper, mostly setting rules to protect humans from robots—like crashing cars or drones, or just invading privacy.

The proposal goes sci-fi when it looks at the consequences of robots becoming ever more autonomous, able to learn in ways not foreseen by their creators and make their own decisions. It reads, “Robots’ autonomy raises the question of their nature in the light of the existing legal categories—of whether they should be regarded as natural persons, legal persons, animals, or objects—or whether a new category should be created, with its own specific features and implications as regards the attribution of rights and duties, including liability for damage.”

Why Feelings Matter

Adding emotions isn’t just a fun experiment: It could make virtual and physical robots that communicate more naturally, replacing the awkwardness of pressing buttons and speaking in measured phrases with free-flowing dialog and subtle signals like facial expressions. Emotions can also make a computer more clever by producing that humanlike motivation to stick with solving a problem and find unconventional ways to approach it.

Rosenbloom is beginning to apply Sigma to the ICT’s Virtual Humans program, which creates interactive, AI-driven 3D avatars. A virtual tutor with emotion, for instance, could show genuine enthusiasm when a student does well and unhappiness if a student is slacking off. “If you have a virtual human that doesn’t exhibit emotions, it’s creepy. It’s called uncanny valley, and it won’t have the impact it’s supposed to have,” Rosenbloom says.

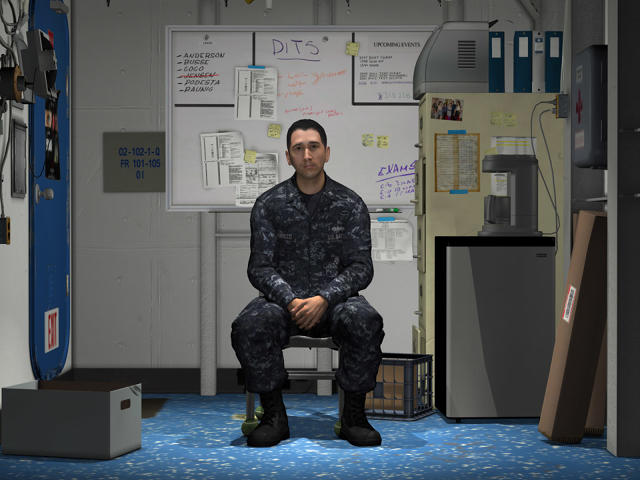

Robots can also stand in for humans in role playing. ICT, which is largely funded by the U.S. military, has developed a training tool for the Navy called INOTS (Immersive Naval Officer Training System). It uses a virtual human avatar in the form of a sailor, Gunner’s Mate Second Class (GM2) Jacob Cabrillo, in need of counseling. Junior officers speak with Cabrillo, who is based on 3D scans of a real person, in order to practice how they would counsel people under their command. About 12,000 sailors have trained in the program since it started in 2012. INOTS draws from a deep reserve of canned replies, but the troubled sailor already presents a pretty convincing facsimile of real emotion.

In other cases, a robot provides the therapy. Ross Mead, founder and CEO of startup Semio, is building a kind of socially astute operating system (yet to be named) for physical robots. Increasingly, robots will serve people with special needs, such as caring for stroke victims or children with autism. They will need empathy to be effective in cases like assisting someone with partial paralysis. According to Mead, “The robot would try and monitor which arm they’re using and say, ‘Hey, I know it’s tough, let me give you some feedback. What if you try this one? I know it’s going to take a little while, but it’s going to be okay.'”

Robots can show emotions without actually having emotions, though. “Robots are now designed to exhibit emotion,” says Patrick Lin, director of the Ethics + Emerging Sciences Group at California Polytechnic State University. “When we say robots have emotion, we don’t mean they feel happy or sad or have mental states. This is shorthand for, they seem to exhibit behavior that we humans interpret as such and such.”

A good example is the Japanese store robot Pepper, the first robot designed to recognize and respond to human emotions, but it’s pretty basic. By analyzing video and audio, the adorable bot can recognize four human emotions: happiness, joy, sadness, and anger. Pepper tailors its responses in accordance, making chitchat, telling jokes, and encouraging customers to buy. More than 10,000 of the robots are at work in Japan and Europe, and there are plans to bring them to the U.S. as well.

Something More Than Feelings?

There are arguments for giving robots real emotions—and not only to create a more relatable user experience. Emotions can power a smarter bot by providing motivation to solve problems. Los Angeles-based programmer Michael Miller is building yet another AI architecture, called Piagetian Modeler. It’s inspired by the work of psychologist Jean Piaget, who studied early childhood development and how disruption of the environment leads to surprise and the need to investigate. “When you’re in harmony with it, when you’re at one with your environment, you’re not learning anything,” says Miller. “It’s when you have troubles or problems, when contradictions arise, when you expect one thing, and you’re surprised, that’s when you begin to learn.”

This ability could make more capable autonomous robots that can think for themselves when unforeseen obstacles arise far from home, like on Earth’s sea floor, on the surface of Mars, or in the ocean under the icy surface of Jupiter’s moon Europa (where scientists believe life may exist). HAL may yet get to Jupiter, and before the humans.

“Part of the whole emotional system are what are called appraisals,” says Rosenbloom. “They’re: How do you assess what’s going on in various ways so you can determine the kind of surprise and the level of surprise and the level of danger?” Those appraisals then lead to more emotions in a process of reacting to the environment that’s more flexible and creative than hard, cold logic. “[Emotion is] there to force you to work in certain ways which you don’t realize the wisdom of, but over the development of your species or whatever, you determine it’s important for your survival or success,” he says.

That sounds like a stretch to Bernie Meyerson, IBM’s chief innovation officer, when I ask him about these ideas. “What you just described is work prioritization,” he says. “If you couch it as, it’s got feelings, it feels an urgency—no, it just prioritized my job over yours.”

“This is the thing about artificial consciousness,” says Jonathan Nolan, co-creator of HBO’s Westworld. “It’s that the programmers themselves don’t want to talk about it because it’s not helpful. I get the feeling they consider it a bit of a sideshow.”

Will It Ever Be “2001”?

While some tech and scientific luminaries like Elon Musk and Steven Hawking worry about computers taking over, many people have more prosaic worries, like that Siri or Alexa won’t understand their accent. There’s still a wide gap between a chatbot and Samantha from Her. It’s unclear when, if ever, the gap could be crossed. “Nobody knows when artificial general intelligence is going to happen,” says Miller. “It’s going to happen gradually and then suddenly.” Technical polymath and ardent futurist Ray Kurzweil has predicted that the Singularity, machines that outstrip all human abilities, will arrive by the 2020s. Rosenbloom is less sanguine. “We never know what hard problems we haven’t run into yet,” he says. “I’m pretty confident we’ll do it within a hundred years. Within 20 years? I don’t have much confidence at all.”

Others say a conscious AGI will never happen. “It’s a pretty high bar to say something actually has a right,” says Patrick Lin, director of the Ethics + Emerging Sciences Group at California Polytechnic State University. “Today, we would think that in order to have a right, you would have to be a person.” Lin cites the work of philosopher Mary Anne Warren, who listed five demanding criteria for personhood: consciousness, reasoning, self-motivated activity, capacity to communicate, the presence of self-concepts, and self-awareness.

Warrren’s criteria are tricky, though. She used them to make the moral case in favor of abortion, saying that a fetus exhibited none of these abilities. Clearly, many people disagree with her definition of personhood. Also, Warren said that at least some, but not necessarily all, of these criteria are required. An AI with emotional drivers could reason, be self motivated, and communicate. Having consciousness and self-awareness are the intellectual and philosophical stumbling blocks.

“No robot in the near or foreseeable future that I’m aware of has any degree of consciousness or mental state,” says Patrick Lin. “Even if they did, it’s almost improvable.” Lin cites the philosophical problem of other minds: I can satisfy myself that I am conscious and exist. As Descartes said, “I think, therefore I am.” But I can’t know for certain that anyone else has a consciousness and inner life. “If we can’t even prove other humans have consciousness, there’s no chance we’re going to prove a machine, with a different makeup, composition has any degree of consciousness.”

Lisa Joy, who co-created Westworld with Nolan (her husband), agrees that we have a poor understanding of consciousness or sentience. “One of the most consistent defining qualities of sentience is that we define it as human, as the thing that we possess that others do not,” she says with a chuckle.

Miller isn’t sure if future robots will reach the level of adult humans. Neither is Rosenbloom. “What you need, in my view, is actually a theory of not only intelligence, but of what kinds of rights and responsibilities to associate with different forms of intelligence,” says Rosenbloom. “So already we know that children have different rights and responsibilities than adults and animals likewise.”

Lin, holding to the point that only persons have rights, nonetheless recognizes certain responsibilities toward other creatures. “Just because you have a duty does not imply a right,” he says. “I can talk about a duty not to kick my cat without claiming that my cat has rights.” This could carry over to a robotic cat or dog as well. Even if it isn’t alive, in the traditional sense, there’s a duty not to abuse it. Or is there?

“You realize that people are coded differently on this sort of empathy scale,” says Joy. “Even among friends that we asked, they were all over the spectrum when they thought it was transgressive to do something [cruel] to a robot.”

related video: facebook wants to win at everything, artificial intelligence included

Fast Company , Read Full Story

(72)