Scientists are building a detector for conversations likely to go bad

It’s a familiar pattern to anyone who’s spent any time on internet forums: a conversation quickly degenerates from a mild disagreement to nasty personal attacks.

To help catch such conversations before they get to that point, researchers at Cornell University, Alphabet think tank Jigsaw, and the Wikimedia Foundation have developed a digital framework for spotting when online conversations will “go awry” before they do so.

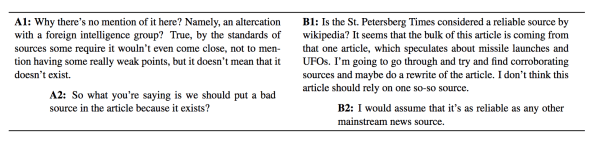

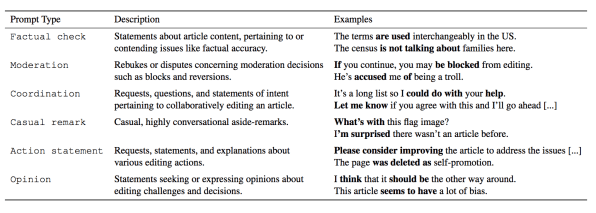

In a paper slated to be presented at the Association for Computational Linguistics conference in July, the researchers point out “linguistic cues” that predict when conversations on Wikipedia talk pages, where editors discuss the content of articles, will or won’t degenerate into personal attacks.

Perhaps unsurprisingly, some of the indicators of conversations that would stay civil include markers of basic politeness that any kindergarten teacher would approve: saying “thank you,” opening with polite greetings, and using language signaling a desire to work together. People in those conversations were also more likely to hedge their opinions, using phrases like “I think” that seem to indicate their thoughts aren’t necessarily the final word.

On the other hand, conversations that open with direct questions or start sentences with the word “you” are more likely to degenerate.

“This effect coheres with our intuition that directness signals some latent hostility from the conversation’s initiator, and perhaps reinforces the forcefulness of contentious impositions,” the researchers write.

A computer trained to recognize those types of features was able to spot conversations that would turn bad with about 61.6% accuracy based on the initial comment and first reply, the researchers say, and its accuracy stayed high even in cases where it took a few exchanges for the discussion to turn nasty. Humans, by contrast, were right about 72% of the time.

Future research could look deeper into conversations to try to spot the moment just before when things will turn nasty–or identify strategies to keep them civil–even when things get heated–the scientists write.

“Indeed, a manual investigation of conversations whose eventual trajectories were misclassified by our models–as well as by the human annotators–suggests that interactions which initially seem prone to attacks can nonetheless maintain civility, by way of level-headed interlocutors, as well as explicit acts of reparation,” they write. “A promising line of future work could consider the complementary problem of identifying pragmatic strategies that can help bring uncivil conversations back on track.”

(11)