Scientists want to define just how smart robot surgeons are

For roughly three decades, medical robots have assisted surgeons in the operating theater. They provide a steady hand and can make tiny incisions with pinpoint accuracy. But as robotics improve, a new question has emerged: How should autonomous robots be treated? The US Food and Drug Administration (FDA) approves medical devices, while medical societies monitor doctors. A robot that can operate on its own falls somewhere in between. To help, Science Robotics has produced a scale for grading autonomy in robot-assisted surgery. If adopted, it could help regulators decide when and how machines should be treated like humans.

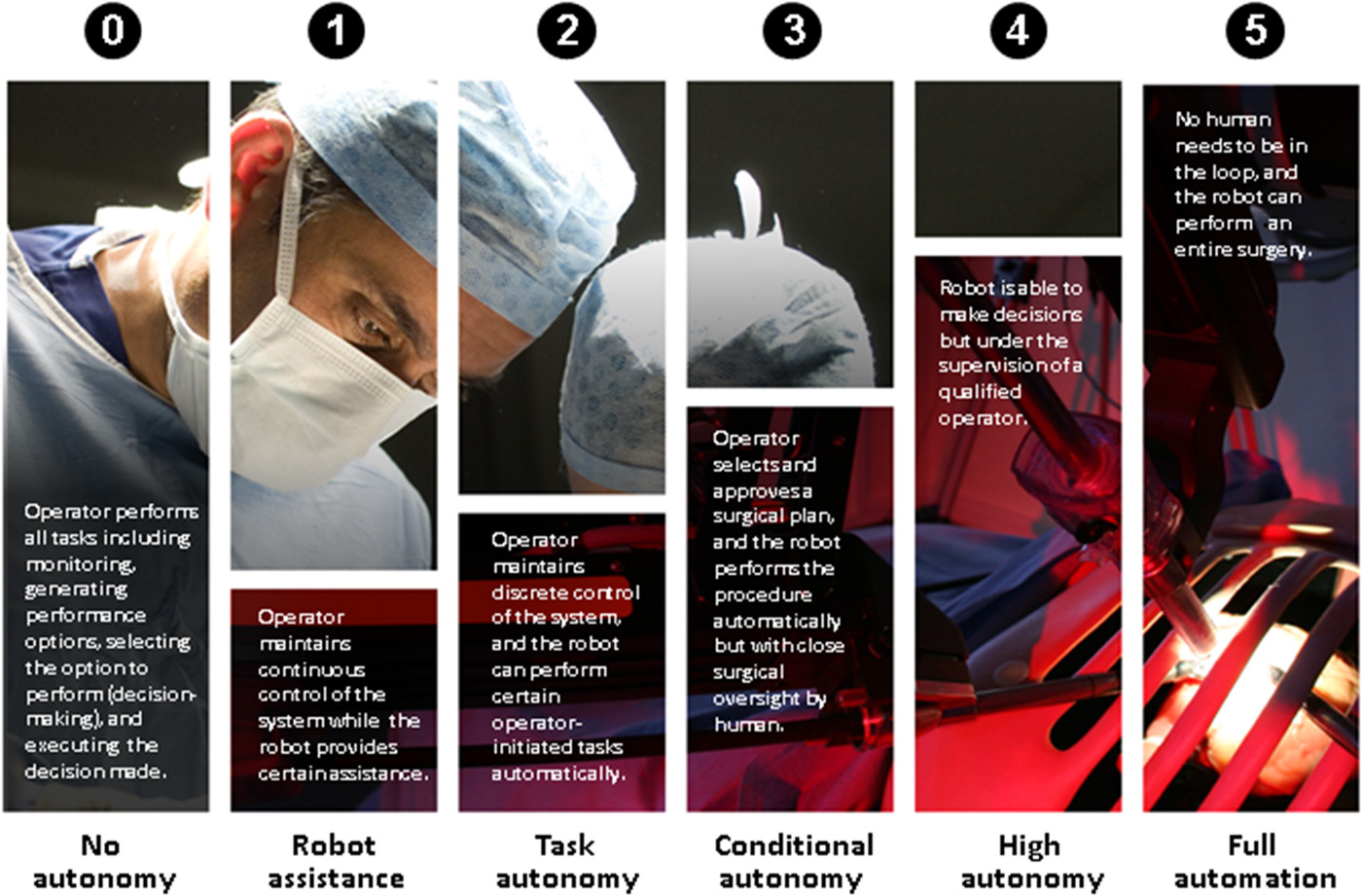

The system ranges from level zero to level five. The lowest one describes tele-operated robots that have no autonomous abilities. It then rises to level one, where a robot can provide some “mechanical guidance” while a human has “continuous control.” Level two describes a robot that can handle small tasks on its own, chosen and initiated by an operator. Level three allows the robot to create “strategies,” which a human has to approve first. Level four means the robot can execute decisions on its own, but with the supervision of a qualified doctor, while level five grants full autonomy.

Science Robotics doesn’t pretend to have all the answers. It says the overlap between the FDA and medical associations is “challenging” and will require an “orchestrated effort” from all parties. The research journal is also concerned about the effect autonomous robots will have on human surgical skills. If a machine becomes the dominant choice, it’s possible that more traditional techniques — those that involve a doctor’s own hands — will be lost, or at least downplayed in training courses. As with self-driving cars, there’s also an issue of trust. A machine might be more efficient, but what happens when it needs to make a tough, ethically murky call? Who is to blame if a patient or family member disagrees with the decision?

(69)