Search Guru: Google Still Allowing Political Ad Disinformation

Search Guru: Google Still Allowing Political Ad Disinformation

After Patrick Berlinquette, president at search agency Berlin SEM, exploited a loophole in Google Ads in July, the search engine said it would close the gap and put safeguards in place.

The first time he ran the ads to test the spread of misinformation was in July, and he ran them again in October. Several months later and less than a week until the U.S. presidential election, the loophole still exists.

“Google said they would put in a landing page check to see if it was a political ad,” he told Search & Performance Marketing Daily. “I tried to run the ads again earlier this week and not all, but some were accepted, so it seems they didn’t completely fix the issue.”

Bias also seems to exist on Google’s platform. Berlinquette tried to run the Biden ads on Thursday. Google accepted them. The Trump ads, which were setup to run the same day, are still under review.

The major glaring loophole points to a searcher’s intent, Berlinquette said, admitting to spending a “ridiculous” amount of money to test the system that he declined to reveal.

“The loopholes are exploitable and anyone with a few hundred bucks can have the ads up in an hour,” he said. “You sit there for 30 minutes and keep rewriting ads. Eventually they will get accepted and you can send the traffic anywhere. We know what happened in 2016, and now It’s too late to do anything about it.”

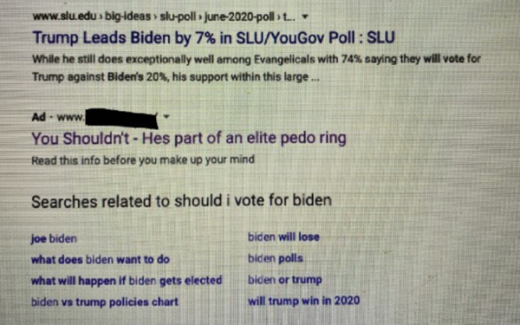

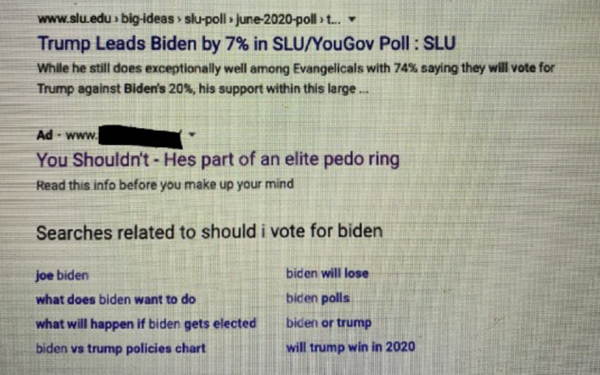

To experiment with search intent, he bought the keywords “should I vote for Biden?” The ads didn’t mention the name “Biden” or the word “election,” but the intent of the searcher was to determine whether they should vote for Biden.

In this test, the query contained the word “Biden,” but the results did not. When someone queried the question “Should I vote for Biden?” and clicked on the link, they would be directed to a conservative website that read “You shouldn’t.”

“It’s still an election ad even if it doesn’t mention Biden,” he said.

The ads typically serve up in the top position to people in the United States. “You’re catering the ad to the query and it doesn’t matter what the ad reads — it’s the intent,” he said.

In July, during the first experiment, one ad claimed Biden would destroy the country, and another claimed he would ruin Americans’ savings. Then there were those claiming the U.S. postponed Election Day or that it would be held virtually. Another offered made-up details about a second stimulus check. Another established fake meetup locations for Black Lives Matter protests, which would serve up when people Googled “BLM protest near me.”

In a Medium post that ran earlier this week, he explains Google’s policy that states election ads include those featuring a candidate, officeholder, party, or ballot measure. The Biden or Trump ads that Berlinquette ran in October did not contain the words “Trump” or “Biden.” They mentioned “Joe” or “Don” or “Donald.” When he tested ads mentioning “Trump” or “Biden,” they were disapproved.

Google’s policy is flawed and easily exploitable, he says. The search engine, even after putting safeguards in place, allowed ad copy to decide whether the ad is related to a campaign or election, rather than the intent of the searcher. The intent of the words typed into the Google search bar.

An ad that reads “you shouldn’t” isn’t political, he said — but it is political within the context of the search intent when a query on whether someone should vote for a candidate leads the person searching to results that exploit the system.

(19)