Siri’s Cautious Expansion To Third-Party Apps Is Vintage Apple

Almost immediately after Apple introduced Siri in 2011, people started speculating about when the virtual assistant might open up to third-party apps.

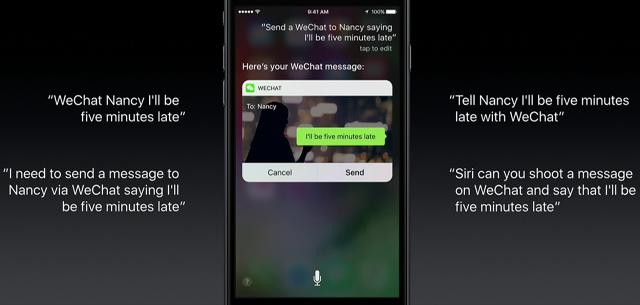

Five years later, Apple is making it happen. Come this fall, Siri on iOS will let you call a car from Lyft, dictate messages directly into Slack, and start a video chat in Skype. And these aren’t specific partnerships; with iOS 10, Apple will allow any app developer to tap into Siri voice commands—within limits.

In a classic Apple maneuver, Siri’s skills are expanding slowly, and under a strict set of rules. It’s not exactly an open system, but it may be more useful in the long run than giving developers free reign.

Limited Skillset By Design

At the outset, apps can only work with Siri if they fall into one of six specific categories: audio or video calling, messaging, payments, photo search, workouts, and ride booking. Want to use Siri to queue up a playlist in Spotify, or add to your grocery list in Wunderlist? You’re out of luck for the foreseeable future.

The narrow focus is reminiscent of the way Apple introduced multitasking six years ago. With iOS 4, Apple laid out six specific cases in which apps could run in the background. These uses included playing music and getting turn-by-turn directions, but excluded background refreshing of email apps or uploading bulk files to cloud storage. Only by making those trade-offs did iOS remain battery- and resource-efficient.

With Siri, Apple is trading capability for consistency. Third-party apps are only allowed to provide data and some visuals; Apple will still control the flow of conversation, and the way certain information appears on the screen. In other words, Apple can’t expand to new categories, like to-do lists or directions, until it figures out how those interactions should work.

“That’s very much Apple’s way,” says Charles Jolley, the founder of an information-finding virtual assistant called Ozlo. “They tend to open things up bit by bit so they can make sure it’s behaving and doing the right things for people.”

As for why Apple started with the six categories it did, Jolley believes these are low-hanging fruit. Requesting a car to your location is a fairly simple command, as is initiating a workout. And in Jolley’s experience, those are popular types of queries for voice assistants anyway.

“Anyone in the industry who’s had one of these assistants knows, you just see this as the most common stuff people want to do with their phones,” Jolley says. “We’ve seen that ourselves with our own data as well.”

The Pizza Problem

For evidence of how a more open system would work, just look to Alexa, the voice assistant that lives inside Amazon’s Echo speaker. Beyond Alexa’s built-in skills (which work fairly well) and a set of developer tools for smart home controls, Amazon’s system is a free-for-all, with no restrictions for developers, and no attempts to organize the many types of interactions they’ve created.

As a result, every third-party voice action in Alexa requires specific syntax. The magic words to order a pizza from Domino’s, for instance, is “Alexa, open Domino’s and place my Easy Order.” Deviate from that structure, and Alexa won’t know what you meant. Realistically, users aren’t going to memorize more than a few trigger phrases, severely limiting Alexa’s usefulness when talking to third-party services.

That’s not to say an open voice assistant is without advantages. To create an Alexa skill, Domino’s simply had to hook into its existing “Easy Order” system—in which users create a favorite order through the company’s apps or website—and set up a voice command to trigger it.

By comparison, a generalized “food delivery” category in Siri would demand much more complexity, says Kelly Garcia, vice president of development for Domino’s. At the very least, every restaurant would need its own “Easy Order” mechanism that Siri could trigger. In a worst-case scenario, Apple would have to handle an endless number of variables across countless restaurants, from the size of the pie to the distribution of toppings to the type of crust. And that’s just for one kind of food.

“If they want to own that entire dialogue, they have to build in all of the rule sets to be able to accommodate the restaurant category,” Garcia says. “As soon as you start peeling back the onion a little bit, you find out, wow, there’s some big challenges there.”

Formative Years

Garcia believes the way forward involves a hybrid approach between an open system like Alexa and a tightly controlled system like Siri. The top-level assistant would steer you along to your eatery of choice using its knowledge of restaurants, but Domino’s would still control the conversation when it’s time to order.

“I would like to see us own the dialogue model of our customers, because we’re servicing them, and we know best how to service a pizza customer,” he says. “I would like [virtual assistants] to understand the customers in a broader context, so understanding how customers behave above those industry layers.”

Getting to that point, however, is more complicated than it might seen. At the moment, assistants like Siri and Alexa just aren’t smart enough handle the decision-making behind picking a pizza place, booking a hotel, choosing a flight, or finding a doctor. Jolley sees the current state of voice assistants as roughly analogous to Yahoo in the mid 1990s—a giant directory of services that’s only useful when you know what you want.

“As a user, you really want an expert that you can turn to. You don’t want to have to go talk to 10 different services, even if it’s just through one interface,” Jolley says. “You want to just turn to one expert and get advice and have an answer.”

Jolley believes the next step for virtual assistants will be to index large swaths of information, and then train the AI to make decisions on that information. (It’s worth noting that Jolley’s company, Ozlo, is a rough sketch of this idea, culling from multiple food-related services to suggest a place to eat.)

Does that mean Siri will eventually need to start from scratch? Not exactly.

As Jolley points out, last year Apple started allowing developers to index data within their apps, so that they can link to and from other parts of iOS. Siri’s new skills seem like an extension of that concept, tapping into large amounts of app data to create more useful links.

Getting Siri to the next level—where it makes informed decisions based on that data—will take time, but Apple appears to be laying the groundwork by making deep connections to its apps in iOS. In the long run, that’s far more important than whether or not you’ll get to control Spotify by voice next fall.

“The right way to think of this is, it’s just the first volley in a pretty long series of developments that will happen over the next couple of years,” Jolley says. “And definitely, Apple is not behind here.”

Fast Company , Read Full Story

(25)