Smartphones Are Leading The Global Charge Against Blindness

“Seven hundred years after glasses were invented there are still 2.5 billion people in the world with poor vision and no access to vision correction,” says Hong Kong philanthropist James Chen. Chairman of his family’s Nigeria-based manufacturing company, Wahum Group, Chen is funding a contest called the Clearly Vision Prize that will award a total of $250,000 to projects that improve eyesight, especially in poor countries. Thirty-six semifinalists were announced this week (the five winners will be awarded September 15). Among the contenders: 3D printed eyeglass frames, drones that deliver medical supplies, and several smartphone-based technologies. Some of the smartphones help nonexperts test vision, and one uses artificial intelligence to “see” for blind people.

The Clearly Vision semifinalists represent just a sampling of the smartphone projects fighting vision loss, a growing field that is bringing critical care to remote regions far from hospitals and doctors offices. The more people I spoke to for this story, the more apps and mobile gadgets I discovered. Vision-care projects can use smartphone screens to display eye-test images, cameras to spot damage, computing power to process images, and wireless connections to link fieldworkers and remote specialists.

A Seeing-Eye Phone

The most ambitious technology is from the Melbourne, Australia-based Aipoly, whose app recognizes objects and verbalizes descriptions in seven languages. Cofounders Alberto Rizzoli and Marita Cheng were inspired by their own experiences with blind friends who lost sight midlife. “They still remember the physical world very well. They still remember the colors of the plants and trees around them, and they’ve been deprived of this,” Rizzoli says. He particularly remembers an older friend. “When I was a kid, I would walk with this person, and he would ask me to tell him what was around in Tuscany, where we spent our summers.”

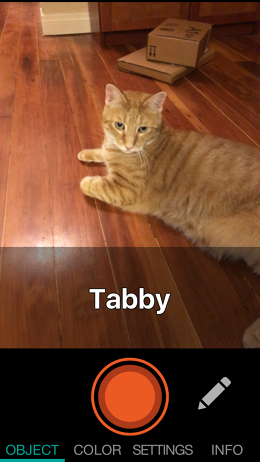

Other vision apps, such as TapTapSee, use cloud-based servers to process images, which requires a wireless connection and results in a several-second delay. Aipoly runs on the phone, using AI called a “convolutional neural network.” It doesn’t need a Wi-Fi connection and works much faster than cloud-based apps; it’s able to identify up to seven images per second. Aipoly utilizes both the phone’s CPU and graphics processing unit (GPU) to perform many tasks simultaneously. “Technology will just get better and better, so eventually these AI-type applications will be ubiquitous,” says Nardo Manaloto, an AI engineer and consultant focused on health care (who is not affiliated with Aipoly).

When I tried it, Aipoly correctly recognized things like a microwave oven and a champagne glass—well, actually it just said “glass.” It identified an apple as “fruit.” I was impressed when several times it correctly identified my friend’s cat as “tabby,” although it did once call it “elephant.”

The next version of the app, expected by the end of the year, will boost recognition abilities from 1,000 objects to 5,000. It will also cut power use and expand support beyond the iPhone 6 and 6s, to older iPhones and a broad range of Android phones. Already, users can teach the app new objects, with the data uploaded and distributed in updates. Aipoly is also testing smartphone-connected glasses fitted with a camera so people don’t have to hold up their phones.

An Rx Over Text

Aipoly helps people with severe and incurable vision loss. But most blindness is curable with a very simple device: Glasses. Ninety percent of blind and visually impaired people live in poor countries, and 43% of them have a refractive error: They are nearsighted or farsighted. Prices are dropping to around $2.50 or less for a basic pair of glasses in developing countries. A bigger problem is getting professionals and their equipment out to evaluate people in those places.

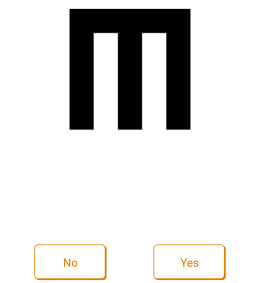

Another of Chen’s semifinalists is Vula Mobile, a diagnostic app that links nurses and aid workers in the field to doctors. Holding the phone about 6 feet away from the patient, the user activates the app, which displays a letter “E” facing different directions. It moves to ever-smaller versions of the letter until the patient can no longer determine the direction. I got results of 20/20 in my left eye and 20/40 in my right. I also answered questions, like if I had any pain. I filled in a comments section and took a photo of each eye.

Pressing a button sends results to a doctor—in my case, to Will Mapham, the app’s creator, in Cape Town, South Africa. He conceived of the app while volunteering at a clinic in Swaziland called Vula Emehlo—meaning “open your eyes” in the Siswati, Zulu, and Xhosa languages. Mapham texted me through the app in a few minutes, and we discussed some dark flecks and blurry spots in my vision. Such dialog allows doctors to guide remote nurses or volunteers through tests and treatments. “This is a way of having local health workers providing specialist care wherever their patient is,” Mapham says during a follow-up Skype call. He estimates that up to 30% of patients can get treatment without making the long journey to the nearest hospital.

Mapham and his cofounder Debré Barrett (who funded the project) hired programmers and launched Vula Mobile in 2014. Since then, they have further developed the app so that it can evaluate eight other health problems (and counting), including cardiology, HIV, dermatology, and oncology. They also plan to expand beyond South Africa, to Namibia, Rwanda, and elsewhere.

Doctor In An App

A third semifinalist, the Israeli startup 6over6 (i.e., the metric version of 20/20), uses a trick of physics to let users measure their prescription. Its app, GlassesOn, hasn’t launched yet, but CEO Dr. Ofer Limon walked me through how it works. (He also showed a simulation, on the condition we not publish it.) GlassesOn displays a pattern of red and green lines that blur together to form yellow for people who are nearsighted. But held at a specific distance from each eye, which varies by patient, the lines become sharp, and true colors emerge. Users move the phone back and forth to find the right distance for each eye, which the app uses to calculate the lens prescription. The user holds up a credit card, student ID, or any card with a magnetic strip (since they are the same size everywhere) so the app can determine distance by how big the card appears.

A prescription, even from an app, is all opticians in some countries require to make a pair of glasses. In the U.S., 6over6 is applying to the Food and Drug Administration to certify the accuracy of the program. If it gets FDA approval, GlassesOn could actually perform some work that currently requires a doctor. “GlassesOn does not aim to replace the ophthalmologist or optometrist, as we limit our services only for refraction purposes and for healthy users only,” Limon tells me in an email.

Tackling Serious Eye Diseases

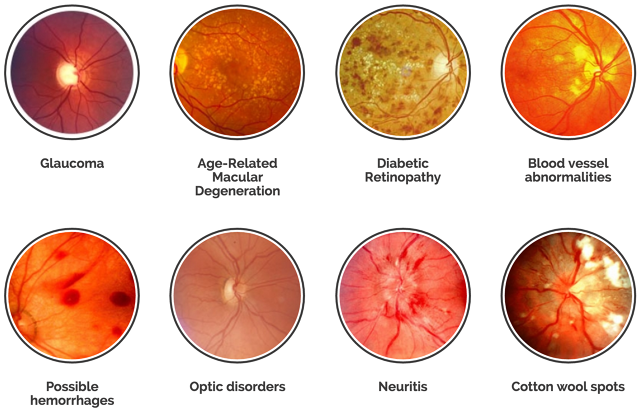

More complex vision problems like cataracts—the clouding of the eye’s lens—can’t be diagnosed by an eye chart. This is where the cell phone’s camera comes in, to photograph damage of the cornea at the front of the eye or of the lens. Getting to the retina—the nerve tissue at the back of the eye that captures images—is more difficult and requires special lenses. Retina ailments such as diabetic retinopathy and age-related macular degeneration are a growing concern as the population ages and diabetes cases soar. Retinas can also break down due to rare genetic conditions like Stargardt Disease (which causes the flecks and blurry spots in my vision).

British ophthalmologist Andrew Bastawrous leads an organization named Peek Vision Foundation that’s developed a smartphone app and lens attachment, Peek Retina, to capture sharp images of the back of the eye. Peek isn’t among the Clearly Vision semifinalists, but James Chen has donated 50,000 pounds (about $65,000) in seed funding. (Peek raised over twice as much on Indiegogo to manufacture the device.) Deliveries have been delayed, but prototypes have been extensively tested with tens of thousands of people in Africa; tests are beginning in India in a few months.

“We had to do a complete redesign to make it something that can work on almost every smartphone,” says Bastawrous. Prototypes he showed at his 2014 TED talk were 3D printed for specific phone models. The final version will be traditionally manufactured. Bastawrous expects it to sell for “well under” $500 when it arrives in early 2017.

“Peek Retina is only a small part of what we do,” says Bastawrous. The main task is coordinating care. While we were talking on Skype, Bastawrous sent me screen shots from his computer that showed real-time progress in screening students in Botswana and referring them to specialists. And while Peek is the best-known project of its kind, devices by for-profit companies (also not part of the Clearly Vision awards) are already available. They include the $435 D-Eye and $399 Paxos Scope. Another company, oDocs Eye Care, is launching a $299 product, called visoScope, in the fall.) “We’re not going to say that people have to use Peek Retina to use this,” he says, noting that the other devices work in Peek’s system.

Future plans for Peek include image recognition, but Bastawrous thinks that will be limited to pre-screening the eye photos that human experts will still have to view. “[AI] is one of those things—because it’s doable, people are doing it,” he says. “It does have value, but in context.” There are bigger challenges, he says, like preventing conditions that cause blindness. “I would love to see the great brains that go into AI investing in preventing people from becoming diabetic in the first place,” he says. “The impact would be a thousand-fold more.”

Fast Company , Read Full Story

(50)