Social networks are broken. Here’s the secret to rebuilding trust

By Ram Fish

After Facebook employees led a rare revolt over the company’s refusal to take down misleading and violence-promoting posts by Donald Trump, Mark Zuckerberg said on Friday that the company would rethink the way it moderates such content. This, Zuckerberg wrote in a Facebook post, will include looking at new approaches other than “binary leave-it-up or take-it-down decisions.”

Facebook may have been led in this direction after Twitter took the lead last week by labeling two Donald Trump misinformation tweets with links to fact-check information. By choosing to augment–not delete–the Trump tweets, Twitter may have pointed the way forward for moderating political content in a world where much of our political and social discourse, for better or for worse, takes place on social media.

In the same way we rely on labels to instill confidence in our food, medicine, and other consumer goods, we need a similar labeling system to instill trust in the news, video, people, and organizations we encounter in social networks. I propose the following system to help social media companies get started down that road.

Enabling trust in news items

While Twitter was right to label Donald Trump’s tweets, its action wasn’t perfect. The company also unilaterally decided which tweets to augment, and how to augment them. That approach risks turning social media companies into publishers that make editorial decisions, and this could invite a new and far more invasive kind of regulatory regime.

There is a way for social networks to promote trust in news and information on their platforms without themselves acting as “arbiters of truth,” as Zuckerberg puts it.

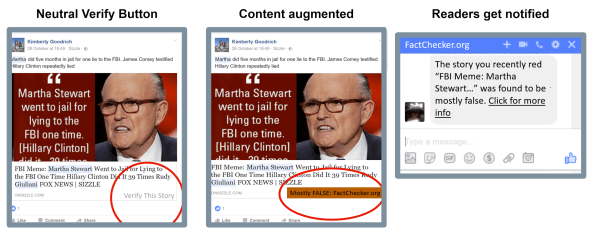

For any media published, platforms should overlay the results (if available) of third-party fact-checks. This overlay should include the fact checker’s identity, and an assessment (on a 1-5 scale) of the media’s accuracy. It should an easy way for the user to review a more detailed fact check report.

If a fact checker finds a post to be mostly misleading or false, the social media platform should notify any user who viewed the media of the assessment.

If no fact-checking information is available for an item, the platform should allow users to request a fact check. When media is first displayed to the users, a button would appear that allows consumers to ask for verification.

If enough people request a fact-check, or if the story was shared enough times, the story would be submitted to an independent fact checker (such as Factchecker.org) for review. In a few hours, after the fact checker has finished its review and delivered its assessment, the post would be updated with a label that would become visible on the story to all users.

Importantly, if a news story is found to be false, the social media company should send a notification of that fact to any users who had already read it.

With this system in place, users would be less likely to believe, and share, an item that’s been labeled false. It might also reduce the incentive for bloggers, media sites, or politician pages to publish misinformation. A publisher whose name was routinely associated with a “false” label on stories might lose any trust readers had in it. And since the fact-check label is interactive, it might encourage users to click through and read opposing viewpoints on a given subject.

Facebook has developed a labeling system for posts, and it does the right thing by relying on third-party fact checkers and the International Fact-Checkers Association industry group. But its current system is lacking in three ways. It applies fact checks only to news stories, leaving misinformation in political ads and posts by politicians unchecked and unlabeled. Its rating system is binary; news stories are either “disputed” or not, while many news stories fall within the gray area between completely true and completely false. And Facebook’s system has no way of informing users that they read a story that later was proved false.

Fighting deepfake video

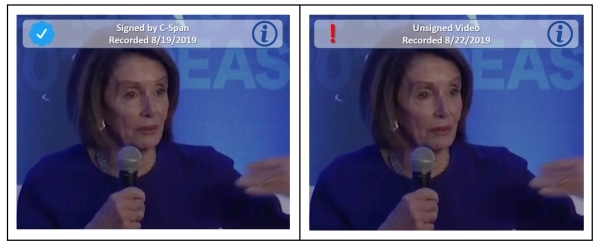

In the era of deep fakes and “cheap fakes” we can no longer trust videos or images to reflect reality. Detecting and quickly deleting fakes on a social network is a major technical challenge for tech companies. A more workable solution might be to develop a content authentication system that could assure users that a video or image is genuine.

Such a system would depend on the creators of images or video to “sign” their content with a unique hashtag to prevent unauthorized alteration. If the system detects the hashtag it will label the video “signed,” which would assure users of its authenticity. If the system detects that the hashtag isn’t present or has been altered, it would label the video “unsigned,” which might signal to the user to watch with somewhat more skepticism. Like this:

Traditional media outlets such as CNN, MSNBC, and Fox News are the most likely to embrace such a system. It would benefit viewers and build trust in their content. YouTube, Facebook, Twitter, and others might then be forced by competitive pressures to follow.

Account verification for everyone

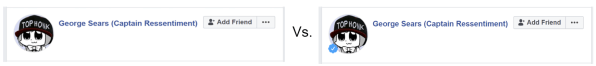

Knowing if you are communicating with a real person, a bot, or an anonymous user is crucial to how we treat information and absorb it. So social media platforms should give every individual the option to verify their identity. Platforms should then always make a clear and visible distinction between verified and unverified identities.

Users could have as many unverified identities as they like, but they would be limited to having only one verified account on a platform.

Today, we don’t know if posts by George Sears are coming from a real person or not. Once George Sears verifies his account, his name will always be presented with a verification badge:

Twitter “paused” account verification for public figures in 2018. Facebook continues to offer verified pages and profiles for politicians, entertainers, and journalists. But there is absolutely no reason not to offer verification for all users. The marginal costs of verifying an identity are zero—it can be done by software. So why limit it?

The limit of one verified account per person is crucial to keeping online communication credible. Tying our online identity to our real identity means that online lying, profanity, and harassment are all linked back to our real identity and reputation. Users shouldn’t be able to get a new identity after they trash the reputation of a previous one.

At the same time, people should be allowed to have multiple, anonymous, fictional or non-verified identities. And by labeling those differently, readers are enabled to decide how to treat the information presented from those accounts. The social media platform wouldn’t have to remove any accounts, so there would be little financial impact.

Establishing trust in organizations

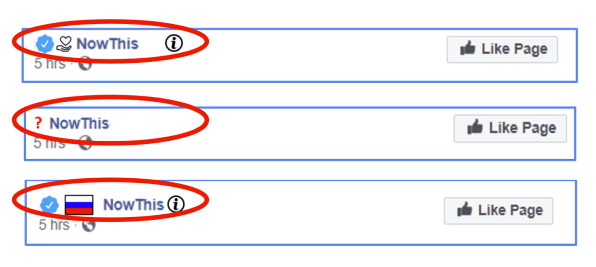

Just like individuals, reputation matters to organizations.

Social media platforms should give every corporation or non-profit the option to verify its corporate details by registering and providing basic information about the organization. Any posts by those groups would then include the group’s name and indicators for group type, and country of origin.

The platforms could find clear and visible ways of differentiating between content from verified organizations versus unverified ones. Like this:

[Image: Ram Fish]

This basic information helps consumers decide how much credibility they should assign to posts from a group or page.

Platforms should also include a trust indicator adjacent to the content, indicating the platform’s internal assessment—based on AI, user feedback, or the organization’s history—of the organization’s reputation. Social networks may be able to differentiate themselves from other platforms based on the quality of their trust indicator.

We’ve prized the internet for its freedom, and freedom from regulation, but social networks have proved that in a wild west atmosphere, where actions are divorced from identities, the poorer aspects of our character can come out. And yet blocking speech is inherently contradictory to the U.S.’s liberal interpretation of free speech as expressed in the First Amendment.

This has led to our social media platforms restricting only the most extreme content. Others have suggested that a better solution is to limit the “amplification” of a piece of harmful content so that less people see it. This might be better because there is no “right” to having one’s speech promoted. But the amplification algorithms are a complicated proprietary, technology and therefore not easily regulated. Both approaches–blocking and amplification–are practically non-starters when it comes to moderating content that falls in the gray area between harmless and harmful.

That’s why social networks must begin building their own trust and verification systems. Implementing the four approaches described above would be a very good start.

Ram Fish is the founder and CEO of the telehealth company 19Labs. He previously led the iPod group at Apple, as well as Samsung’s effort to build the Simband clinical health wearable.

(35)