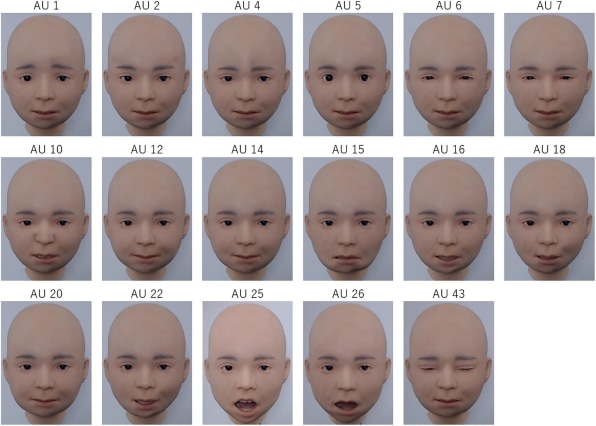

Test your emotional intelligence by trying to identify what this android face is feeling

Artificial intelligence may have achieved superhuman feats—it can drive a video game race car with surgical precision, or analyze every possible opening to a chess game in mere seconds—but one feat that still eludes it is just being a normal human.

It’s a deceptively simple task, and nowhere has AI’s failure thus far been more obvious than in the realm of human emotion. Scientists and artists alike have long pondered whether a robot could ever think or feel like a person. In A.I., Steven Spielberg’s sprawling 2001 sci-fi epic about an android child programmed to love, a stunned crowd rejects the claim that the boy is a robot when he pleads for his life—fear and desperation evident in his petrified expression. Mecha don’t cry, they insist.

But this mecha might.

Researchers from the RIKEN Guardian Robot Project in Japan say they’ve created an android child, which they’ve named Nikola, that is capable of successfully conveying six basic emotions. It’s the first time the quality of android-expressed emotions has been tested and confirmed in these emotions.

Nikola’s face contains 29 pneumatic actuators that control a network of artificial muscles, allowing it to approximate the twists and tugs of the 43-or-so real muscles in the human face. Another six actuators move the head and eyeballs. Movement is powered by air pressure, keeping the motions smooth and silent—more like a human, less like a clanking machine.

But most crucially, Nikola was trained to mimic expressions using past research on distinctive quirks characteristic of particular emotions—a raise of the eyebrow, a stretch of the cheek, a drop of the jaw, a curl of the mouth, for example.

Below is a result of the work, published in the journal Frontiers in Psychology. The 17 prototypes represent six emotions in differing states of intensity—from slight to extreme.

Can you identify them?

According to RIKEN’s study, everyday people could recognize these emotions, “albeit to varying accuracies.”

(For those still guessing: The answer key here.)

The team chalks up the varying accuracies to Nikola’s silicone skin, which is less elastic than human skin and thus cannot form wrinkles well—which is pivotal when you consider that wrinkling the nose, for example, is a key indicator of emotions such as disgust (clue for the above!).

However, there might be more to it. The human face is a map of complexity, and novelists could write reams about the nuance etched into the folds of one’s countenance. There’s a certain subtlety—a flash in the eye that reveals anger, annoyance, or amusement—that je ne sais quoi of a human, which we’ve come to understand. But subtlety in an android translates into more of a blank slate—which in this case, might be even more puzzling.

Regardless, RIKEN—which was previously responsible for a teddy-bear-shaped robot nurse—is forging ahead. The next step is to build Nikola a body (he’s currently just a head). They hope that one day, he might become an empathetic robot that helps people who live alone. “Androids that can emotionally communicate with us will be useful in a wide range of real-life situations, such as caring for older people, and can promote human wellbeing,” says the study’s lead author, Wataru Sato.

(83)