“The Impossible Statue” is an impressive 5-foot, half-a-ton stainless steel figure now standing at the Tekniska Museet, the Swedish National Museum of Science and Technology in Stockholm. It’s a statue not made by humans, but by a generative artificial intelligence trained in the styles of five of the greatest sculptors of all time: Michelangelo, Auguste Rodin, Käthe Kollwitz, Takamura Kotaro, and Augusta Savage.

As its name suggests, creating the statue was a complex process that involved creating new AI models and manufacturing and assembling the hulking piece of steel. It was led by Richard Luciani—a computer scientist at artificial intelligence consultancy firm The AI Framework—who created the artificial intelligence models and workflow, and Sandvik, the Swedish engineering company that made the statue to showcase its CNC manufacturing capabilities.

In an email interview, Sofia Sirvell, Sandvik’s Group Chief Digital Officer, tells me that the statue was designed by training multiple AI models on the work of five of the world’s greatest and most renowned sculptors, balancing some of their best-known attributes: “We incorporated the dynamic off-balance poses of Michelangelo and the musculature and reflectiveness of Rodin. We also drew upon the expressionist feeling of Kollwitz, and Kotaro’s focus on momentum and mass. This collaboration was completed with influences from Savage, drawing on the defiance reflected in her figures.”

To make a statue that could represent each artist required a 3D model. But the very idea of using an AI to imagine a complex 3D object was a seemingly impossible challenge when Sandvik’s team started this project in August 2022. While some generative AIs available today can create crude 3D models, Sandvik’s team was forced to use 2D generative AI tools like Stable Diffusion, DALL-E, and Midjourney, instead relying on additional AI tools to turn the 2D images into 3D models ready for manufacturing with computer-controlled machinery.

They started the process by training a Stable Diffusion (SD) model on a relatively small data set of photos of statues generated with a 3D program. This step, according to Sirvell, was to ensure SD could reliably output images that were consistent with each other.

However, this model didn’t have knowledge of the artists’ styles they wanted to infuse into the statue. It needed additional training, so they turned to Midjourney and DALL-E to synthesize additional images that showed poses and themes from the five masters. These images were then fed to the Stable Diffusion model. “Producing coherent and final results in the first versions of generative AI is a difficult task, especially when trying to balance adversarial art styles like realism and expressionism,” Sirvell says. “That’s why we used more than one tool: each has a different strength.”

While DALL-E has a deep grasp of semantic and syntactic content and understands language very well, Midjourney’s strength is in interpreting artistic style and more subjective attributes like ambiance, emotion, and style. Meanwhile, Stable Diffusion excels at producing high-quality images, Sirvell says. Together, these tools could create an “impossible” statue.

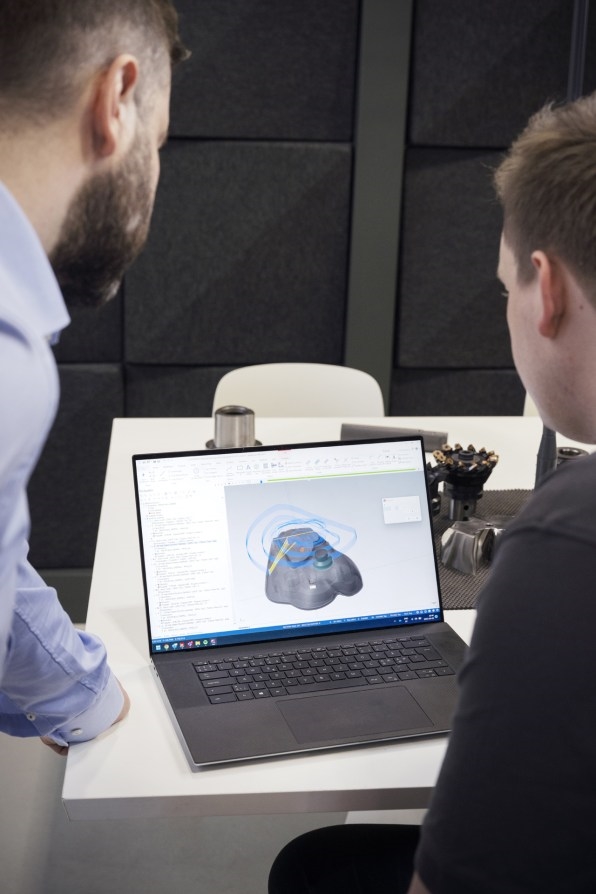

The team repeatedly generated 2D images until they found an output that adequately represented the artistic traits they were looking for. From there, the 2D image had to be translated into a 3D model—a point cloud and subsequent solid model that could be manufactured.

Sandvik customized AI tools like the Midas depth estimator, an AI algorithm capable of estimating a 3D model from a flat, monocular image, to get to the final form. The initial 3D model of the body was then refined with human-pose estimators, which further interpreted the position of the flat human figure. Finally, to generate the fabric that flowed from the statue’s body, Sandvik applied AI algorithms typically used in video games to achieve the folds and wrinkles needed for it to look realistic.

At the time “The Impossible Statue” was designed, the team had to cobble together the tools to make it happen. Not even a year later, several generators exist that are capable of doing this process on their own. “Many of these models have improved tremendously since the project started, but even today, creating an equivalent 3D mesh would require multiple custom models and is still nontrivial,” Sirvell explains.

With the final 3D point cloud model in hand, they needed to bring it into the real world. The company wanted to make the statue from stainless steel to showcase its manufacturing capabilities, which proved to be another challenge given the sculpture’s size and complexity. These features forced Sandvik to use multiple blocks of material, which would be later assembled into a single figure.

To create the final manufacturing model, the team used Mastercam computer-aided manufacturing software, which produced a statue that has more than nine million polygons and complex detailing. To give you an idea of how complex this is, a typical character in a well-produced video game is formed by anywhere from 30,000 to 60,000 polygons. In a movie, a generated figure can require a million or more polygons for highly detailed close-ups. For manufacturing, the required number of polygons skyrockets because a sculpture exists in the physical world and requires much more fine-grained detail.

Before starting the actual machining, the company used its own manufacturing simulation software to make a digital twin, a step designed to avoid mistakes in the real world that would force them to scrap any part. This program recommended the creation of 17 separate pieces and 40 million lines of G-code, the program that guides the machines to sculpt the parts by turning, milling, and drilling the steel blocks until they are ready to seamlessly assemble into their final form.

The company says that this entire process reduced the amount of steel needed by half. Sandvik also claims that the precision of the finished work—measured by their metrology laser tools and software—only has 0.0012 inches from the original 3D model, which ”makes intersections between the different parts almost invisible to the naked eye.”

For sure, it does sound like an impressive manufacturing feat. But what really matters to me is how it all came together from conception to completion. The way an AI-based creation process can be guided by humans and work with machines at different levels to create something tangible, not just an image, is a brilliant glimpse at what these technologies may bring to us, like the AI-generated NASA’s spaceship alien parts we wrote about some months ago. A bright future awaits, if we can manage to stay on the right course.

(52)