the following large Tech Revolution will likely be in your Ear

“I want I might contact you,” Theodore says, laying in bed. He’s met with silence. Rejection. except she speaks up, tentatively. “How would you touch me?”

It’s a famously poignant scene from the film Her, as the personality Theodore is ready to make vocal love to an artificial intelligence residing in his ear. however in line with 1/2 a dozen consultants I interviewed, starting from industrial clothier Gadi Amit to the usability guru Don Norman, in-ear assistants are not science fiction. they’re an imminent fact.

in truth, a striking pile of discreet, wi-fi earbuds enabling simply this idea are coming to market now. Sony not too long ago launched its first in-ear assistant, the Xperia Ear. Intel showed off a similar proof-of-concept closing 12 months. The talking, bio-monitoring Bragi dash might be reaching early Kickstarters quickly, while fellow startup here has raised $17 million to compete within the good earbud house. after which there’s Apple: Reportedly, the company is disposing of the headphone jack in future iPhones and changing it with a pair of wireless Beats. “individuals do not know how shut we’re to Her,” says Mark Stephen Meadows, founding father of the conversational interface firm Botanic.io.

however to succeed in the husky “pherotones” of Scarlett Johansson, we have now considerable cultural, ergonomic, and technological design problems to resolve first.

Voice keep watch over’s iPhone second

Thanks partly to Amazon’s success with voice control—the corporate released two new versions of Echo ultimate week—talking to a computer in your house is ultimately feeling downright domestic. but whereas Amazon may just own the mindshare now, consistent with a study by using MindMeld, most effective 4% of all smartphone customers have used Alexa. in the meantime, 62% of this market has tried mobile-centric voice keep watch over AIs equivalent to Siri, Google Now, and Cortana. that’s why Echo’s early success in this house could quickly be eclipsed by using a brand new wave of private devices from Sony, Apple, and a small pile of startups—unless Amazon finds a way to sneak into your ear, too.

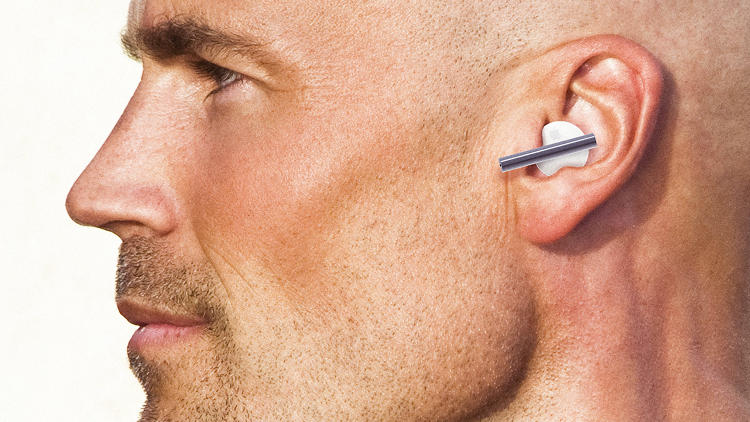

The product category is new: an in-ear assistant that can hear you and reply with an intimate whisper. It lets Siri or Alexa curl up next to your eardrum. It’s exceptional what experts from across the trade consider this know-how could do inside just a few years. imagine a private assistant that takes notes about your conversations, a helpful researcher who routinely tests IMDb for that actress’s name which you can’t do not forget, a partner who listens to your issues, and even suggests psychiatric treatment, silently consulting the collective data of specialists in the business.

the shape factor—a discrete, wireless speaker and microphone that lives on your ear—sounds sci-fi, nevertheless it’s hitting markets now. “i believe the in-ear pattern is the development that could be the one for [voice] to have that ‘iPhone moment,'” says Jason Mars, assistant professor at the college of Michigan and codirector of readability-Lab. “With [Amazon] Echo, there are some in reality interesting ideas in that you may discuss to your house. Now, with in-ear technology, that you would be able to think about the assistant is always connected to you.”

a brand new stage Of Intimacy . . . however With Whom?

The inherent intimacy of those devices will dictate how and where we use them. any person walking by means of can see what’s on your pc display. Even our telephones aren’t totally personal. but even if an AI doesn’t recognize your deepest secrets and techniques, it’s nonetheless for your ear—the an identical to anyone preserving their lips simply inches from your ear.

“With the Apple Watch, I’m addressing a computer. I’m talking to a thing on my wrist. while it’s received a Dick Tracy-like schtick to it, it is nonetheless an awfully specific invocation of a operate,” says Mark Rolston, former CCO of Frog and founder of Argodesign. “Whereas, talking to myself, and having a ghost, angel, or devil on my shoulder, is a way more, I don’t know—it has deeper psychological implications, the concept that there may be any person else in my head.”

Rolston suggests that the character of this personal interface will change your relationship to AI. You’ll naturally depend on it for more secretive issues—whereas chances are you’ll no longer want your Apple Watch reminding you it’s time to take your beginning keep watch over, a voice that best you can hear telling you the same knowledge becomes perfectly suitable. And slowly, any process that might be impolite or embarrassing to Google in front of someone else for your cellphone, turns into invisibly useful when managed by an AI in your ear.

“think about I’m listening to you with my proper ear, and Siri is instruction me in my left,” he says. “and that i’m conducting this kick-ass interview as a result of i have a computer feeding me all forms of parallel questions and ideas.”

at the same time, having a apparently all-realizing voice whispering for your ear will make it very simple to set unrealistic expectations for that voice’s purposeful capabilities, and that poses an issue for designers. In everyday existence, we repeatedly set real looking expectations for the individuals around us in keeping with context. We don’t, for instance, ask our dry cleaner to calculate the 12.98% ARP on our bank card, or our accountant to tell us a bedtime story. it can be harder to understand what a cheap expectation is for a know-how so nascent as in-ear assistants, which means users may just deal with these platforms as omniscient gods that take note the whole lot in all contexts slightly than single pieces of instrument—and be sorely dissatisfied.

That disparity—between what an AI assistant can do for us and what we predict it to do for us—is already an issue for existing AI know-how, corresponding to Siri. “when you go too fast too quickly, there shall be too many nook circumstances that aren’t working,” says Dan Eisenhardt, GM of Headworn Division for Intel’s New units group. “Like Siri, I preserve giving Siri a chance, but it’s the one or two times she doesn’t work a day that I get upset . . . so i do not use Siri.”

At Intel, Eisenhardt is solving this downside with the aid of creating audio-based wearables keyed to extra specific contexts. right through CES, Intel debuted a collaboration with Oakley known as Radar, a combination of glasses and earbuds that allows runners and cyclists to ask questions comparable to “how some distance have I run?” or “what’s my coronary heart charge?” as a result of this system is aware of your restricted context, it can be specialized to remember your possible subject of debate. This raises overall accuracy, and it permits for some small moments of magic, reminiscent of should you ask about your cadence (or working velocity) after which follow up later with “how about now?” the system will keep in mind that you’re still considering cadence.

some other unknown about these in-ear assistants? whether it’s going to be a single particular person speaking to us all the time, or whether individual firms will improve their very own voice-based totally personalities. so far, third events were anxious to adopt Amazon’s Alexa to regulate their apps or products. however are expecting this pattern to change, as these corporations elbow to create their very own voice identities.

“i can order Domino’s pizza with Alexa. i will order an Uber. but these are all manufacturers that spend tens of millions of dollars establishing their voice as a model,” Rolston says. “Now we’re getting to a place the place we are able to do rich things with a whole bunch to heaps of brands on this planet, however those are going to be black boxed in Siri and Alexa. And they are not excellent representatives of the Pizza Pizza pizza parlor down the road. right now it’s Alexa. i want the stoned pizza man.”

“the answer is also that the voice of every brand must quite literally be the voice of every brand,” Rolston continues. “So if i’ve an app within Siri that’s a pizza company, possibly I don’t say, ‘hiya Siri.’ I say, ‘hey Pizza Pizza.’ for the reason that pizza company, these guys don’t wish to be Siri, they want to be them. we have to unravel that.”

At Botanic, Mark Meadows has pioneered a possible solution. He calls them “avatars,” they usually’re basically a method to provide completely different chatbots you’re talking to various personalities. These personalities may also be informed with the aid of consultants. So, as an example, psychologists may share their collective data thru a single digital psychologist—or mechanics may contribute to their own collective mechanic. Meadows has in reality patented a ranking machine for these avatars, too, because as he explains, people are irrationally trusting of machines—and the intimacy quotient offers them incredible power.

Meadows points to a contemporary McDonald’s merchandising that turned satisfied Meal boxes into digital fact headsets. He imagines that the short food franchise could use such technology to create a Ronald McDonald avatar that talks directly to your youngster in a model-to-shopper dialog that you just, as a dad or mum, may not even be capable to examine. “The [avatar] relationship gives manufacturers this capacity to interact so intimately and so affectively that Ronald McDonald is not only a peculiar clown you see on television,” he says, “however an extraordinarily personal pal whispering recommendation into your child’s face.”

Meadows thinks a ranking gadget may serve as a counterbalance to that energy, so he is actually patented a “license plate” that will identify possible abusers of AI chatbots—mainly a pass between being demonstrated on Twitter and celebrity-rated on Amazon.

The Infrastructure problem

For an iPhone person, Siri appears like nothing greater than a software replace. That’s because her real price is invisible. It’s tucked away in North Carolina, where Apple constructed the arena’s first $1 billion knowledge middle earlier than deploying Siri’s AI technology. The cloud, as it seems, is actual. And it’s dear. The hidden computing value of those assistants lend a hand to give an explanation for why Amazon, which runs considered one of the most important server networks in the world, is so dominant in voice intelligence. Even still, our present servers aren’t just about sufficient to allow our Her future. in reality, they couldn’t scale our moderately dumb Siri of these days.

“If each individual on the earth today wanted to have interaction regularly with Siri, or Cortana, always, we simply don’t have the dimensions of cycles in information facilities to enhance that sort of load,” Mars says. “there’s a certain amount of scale that may’t be realized technologically. very similar to we will’t have every cell phone regularly downloading videos on the earth because cell indicators can’t improve it. in a similar fashion, we should not have the computational infrastructure in place to be constantly talking to these shrewd assistants on the scale of hundreds of thousands and billions of individuals.”

believe when Siri launched. there have been steady outages. Did Apple iron out one of the most kinks? for sure. but are individuals the use of Siri less than after they first bought their new iPhones with Siri? most definitely. Mars implies that Siri couldn’t scale today, and “with just a bit of more desirable quality or more users, the cost goes throughout the roof.” The smarter AI gets, the extra processing it requires, and the solution isn’t purely hooking up just a few extra big server farms both. We require orders of magnitude extra processing than we have now. It’s why, in Mars’s lab, he researches get 10x to 100x enhancements out of the way in which we design servers. If somebody’s phone can handle extra of the weight, for instance, whereas servers install specialised hardware just to run singular pieces of software equivalent to AIs, that could be that you can imagine.

So it’s uncertain if it’s even possible to produce the important computational firepower needed to make these assistants ubiquitous. If the infrastructure can best toughen a small subsection of users, how will corporations choose who gets the tech first? and the way so much smarter will those individuals be than the rest of us? Mars believes these abruptly improving in-ear assistants will accelerate the server bottleneck. What occurs subsequent is any person’s guess.

Designing In-Ear Hardware

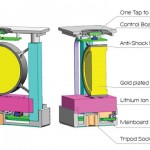

in fact, server farms are just one piece of the hardware drawback. simply because smart earbuds are at present on sale doesn’t mean they’re polished for top-time use. And Gadi Amit, the founding father of Silicon Valley design agency NewDealDesign, doesn’t believe that the current in-ear hardware itself is somewhat as good as Sony and different startups may paint it.

For one, earbuds are notoriously difficult to fit in the case of relief. consider, as an example, how some individuals consider Apple’s earbuds are good, whereas others can’t stand to put on them for even a couple of seconds. once designers eliminate the cords—which in truth stabilize earbuds within your ear with their very own weight—technologies from Sony and right here provide no other form of anchor to hold them in position rather then your ear canal itself.

“some of the primary considerations is that they fall out. they usually fall and can proceed to fall any time you do bodily task,” Amit says. “there’s no technique to that. the answer is to get out of the ear, and wrap some roughly function around your ear.” And whenever you do wrap the device around the ear, all the subtlety of the software is gone—plus you’re left with a second ache point round your cartilage.

“The comfort drawback is there, and it’s going to be very so much a personal choice. Some people will find it okay; some people will find it unacceptable,” he says. “it is going to never have one hundred% acceptability, especially when operating. It’ll hover more round a 30% or 50%.” He compares that to touch monitors, which work almost all the time for almost one hundred% of the inhabitants.

any other issue Amit is quick to point out is that of audio quality. the patron audio market already has individuals chasing larger quality, over-the-ear headphones. And he says that given the sluggish percent of change within the last 10 years, improvements in micro-audio aren’t likely in the near future. in a similar fashion, there are limitations with microphones and voice reputation techniques, which whereas excellent, characteristic accuracy in real-existence use tends to hover close to ninety%.

“That sounds like a lot, however it’s horrific. should you’re having an ordinary conversation, and 5% is meaningless, for you, it’d be horrible to take heed to,” Amit says. “It’s pretty nice for some purposes. however we’re not going anyplace with your ear that’s eradicating GUI in the following couple of years.”

as an alternative, Amit imagines the near future is a “tapestry” of interactions, of which an ear-pc or voice-regulate system would be only one component. whereas he believes that photo user interfaces peaked in 2015, he’s doubtful that anybody step forward—such as the iPhone’s contact reveal—will cannibalize all other UX from right here on out. Now we’ve got applied sciences that may read our hand gestures and facial feelings, we have now VR headsets that may immerse us in video content material, and we have haptics that may pass along physical feelings, too.

“we’ve five senses, and we’ve got to make use of all of them to engage with smart technology,” Amit says. “And the real difficulty, once we’re designing these tasks now, is to find the correct blend, and allow folks sufficient flexibility to adapt to their alleviation degree. Hybridization is the problem we have now. we’ve got all of these technologies; how do you set the right mix collectively?”

As Meadows factors out, when these technologies work collectively, they grow extra syntactically accurate and emotionally conscious. they may be able to take note what we’re pronouncing and what we’re feeling.

that may be why Apple just lately made two acquisitions that you probably didn’t hear about: Emotient, a mood-reputation software that may learn emotion from a human’s face with cut up-2d speed, and Faceshift, a software that may document and primarily puppet an avatar’s face from a human one. collectively, these acquisitions point out that Siri could be so much extra sensible if she might not simply hear you, but see you. and he or she may well be that much more empathetic if you may see her, too.

The lacking Piece: Social instinct

the most important challenge with the upward push of in-ear assistants, then again—larger than the data facilities, the ergonomics, and even the prospective corporate abuse of intimacy—will likely be all the tiny, social concerns that an AI residing in your ear should get good.

“Now you could have assistants announcing your favourite Italian restaurant opened, and it’s going to very neatly be that you’re delighted,” says Don Norman, director of the design lab at UC San Diego and writer of The Design of everyday issues. “but it could be when using or crossing a side road, or when I’m finally having a deep, tough dialogue with a lover. the toughest elements to get right are the social niceties, the timing, knowing when it’s appropriate or to not provide knowledge.”

In-ear assistants will have to juggle these intuitively social moments frequently, provided that Norman believes a few of its biggest possible advantages exist in its potential to utilize 5-, 10-, or 30-second bursts in his day—those moments when he might be capable to get ahead on e mail or check his textual content messages, that in combination might add as much as a big period of time. however he’s additionally involved in regards to the doubtlessly unhealthy rudeness of a socially inept laptop.

“I’m worried about safety. We already be aware of individuals injure themselves studying their cellphone whereas walking. They stumble upon things, however at least the cellular phone is beneath your regulate. which you can stop every time you want. which you could force your self,” he says. “I never read the cellphone when crossing the street. but when it’s an assistant, giving recommendation, recommending issues to me, telling me issues it might think are attention-grabbing, I wouldn’t have control of when it occurs, and it would in a perilous scenario.”

In his lab, Norman is finding out a few of these sophisticated social boundaries through the lens of automobile automation—namely, how does a automotive with no driver navigate thru busy pedestrian intersections? “The cars need to be aggressive, or they will by no means get thru a circulate of pedestrians,” he says. So this requires the vehicles in truth be programmed to local automotive-pedestrian tradition, which in California way the automobile inches forward slowly, and the humans will naturally make way. but in Asia, it way the auto should push its means via a crowd far extra forcefully, kind of ramming its manner via. every works, however in case you were to swap these strategies, the California automobile would take a seat at an intersection in Asia all day, whereas the Asian automobile would run Californians over.

So it’s difficult.

but for the doomsdayers who imagine Her expertise will lead us all to tune every other out, it’s price noting that we already test our smartphones one hundred fifty times a day. If that hasn’t defeated humanity yet, it’s not likely one new know-how will convey society to its knees.

“I stroll to my administrative center, but it method I stroll past plenty of college students, and that i’m amazed that ninety% of them are reading their telephones as they stroll throughout campus,” Norman says. “i attempt to have in mind what they may be doing, but largely, they seem chuffed. they appear engaged. I don’t suppose they’re doing it on account of the technology. i feel it keeps them related.”

cover photograph: Mark Rolston and Hayes city, Argodesign

(50)