The real reason there are so many female voice assistants: biased data

Voice assistants have historically been female. From Siri and Alexa to Cortana and the Google Assistant, most computerized versions of administrative assistants launched with a female voice and, in most cases, a female name.

For years, the companies behind these voice assistants have been criticized for using female names and voices, in part because their disembodied helpers act akin to household servants—a decision that plays into historical gender roles. Most egregiously, Microsoft’s Cortana was named for a barely dressed character in the video game Halo. (Perhaps the company’s engineers thought that bossing around Cortana would be a user’s idea of a good time.) Though male voices are now usually available as an option, the female ones remain the default.

There’s another, oft-cited reason that these voice assistants, along with their predecessors in recorded voice systems such as voicemail menus, are predominantly female as well. Studies have found more people tend to prefer listening to female voices, possibly because the experience dates back to when we were all in utero. However, this idea has also been disputed by some real-world experiences: Women sometimes receive complaints about their vocal ticks or are treated less seriously as a result of them (even when men’s voices have the same quirks).

But according to Google, these aren’t the most important reasons why, when it launched the Google Assistant back in 2016, it chose a female voice—and, not coincidentally, a gender-neutral name. That’s because Google actually wanted to launch its flagship voice assistant with both a male voice and a female voice. But there was a technical reason why it couldn’t: There was a historical bias in its text-to-speech systems, which had been trained primarily on female voices.

“Because [the systems] were trained on female data, they performed typically better with female voices,” says Brant Ward, the global engineering manager for text-to-speech at Google.

While the team working on the Google Assistant’s personality was pushing for both a female and male voice, the company ultimately decided against creating a male one.

“We just weren’t confident we could get the quality at the time,” Ward says. “It took over a year to do it, and you don’t want after a year to say, ‘It’s just not good enough.’ Google really needs to deliver great quality.”

He explains that part of Google’s older text-to-speech system, which stitched pieces of audio together from recordings, used a speech recognition algorithm that would place markers in different places in sentences to teach the system where sounds and words began and ended.

“If I remember correctly, those markers weren’t as precisely placed [for a male voice],” Ward says. “I’ve worked on many systems, and it’s always been harder to get the quality on a male voice, likely because these systems, regardless of their origin, were trained on more female [data] than male data.”

A hard-to-shake paradigm

Why were Google’s systems primarily trained on female voice data in the first place? Ward claims that higher-pitched voices tend to be easier to understand, another argument that’s often been used to explain why so many voice assistants are female. However, there’s no evidence that higher pitches are easier to hear—in fact, people tend to lose their ability to hear high-pitched noises as they age. Still, an influential study from 1996 did show that people tend to understand women better than men, but because women tend to articulate vowel sounds more clearly, not because of the pitch of their voices.

The idea that female voices are more intelligible has been embedded within the text-to-speech discipline for decades, and Ward even references it as the reason that early phone operators were predominantly women (the other reason being that women were cheap laborers since so few professions were open to them in the early 20th century). Ward calls the prevalent use of female voices in text-to-speech an established “paradigm”—one that got so deeply encoded into Google’s system that the company decided against trying to create a male voice. The reason that the text-to-speech system ended up with biased data appears to be a confluence of research, widely held perceptions, and possibly inertia in the industry.

Other text-to-speech experts say that there isn’t any technical difference between engineering female and male voices. “Personally, as a developer, I think it’s probably a bit of a biased statement,” says Johan Wouters, the director of text-to-speech technologies at Cerence, a company that builds voices for businesses that was recently spin out of Nuance, a longtime leader in voice technologies. “I haven’t seen any scientific evidence . . . We can build very high-quality voices for both genders, and in my view, the ease of development is not the main factor here.”

At Fast Company’s request, Wouters analyzed the combined libraries of Cerence and Nuance, which include more than 140 off-the-shelf voices and more than 50 custom voices. He did not find any statistically significant differences in quality between its male and female voices, including for voices built using methods that stitch vocal recordings together, similar to the way Ward describes creating the Google Assistant’s original voice. (When asked about Wouters’s opinion, Ward—who used to work at Nuance—said that his comments only apply to Google’s system.)

Toward a multitude of voices

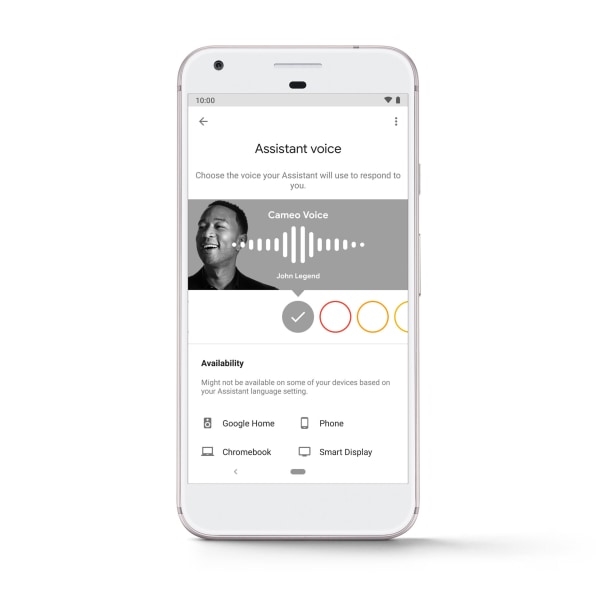

With the advent of new, machine learning technology, Google’s older text-to-speech system soon became outdated. Just months after launching the Google Assistant in May 2016 with a female voice, Google’s voice researchers joined with Alphabet’s AI lab DeepMind to create a new kind of algorithm that not only reduced the amount of voice recordings needed but could also generate much more realistic voices. Within a year or so, the researchers were able to use the algorithm, called WaveNet, to launch a new, more naturalistic female voice for the Google Assistant, quickly followed by a male one in October 2017. WaveNet now powers all of the Google Assistant’s voices. It’s so realistic that Google even used it to create a familiar voice: that of John Legend. It’s one thing to create a voice that sounds humanlike, but it’s another thing entirely to realistically mimic a voice that many people will recognize.

[Screenshot: Google]

Google is just getting started with WaveNet. Today, Google is announcing that it is bringing male voices to seven new countries that previously only had female ones. Additionally, Google is bringing female voices to Korea and Italy, which originally launched default male voices that were made using the WaveNet technology. (The gender of the default voice varies by country.)

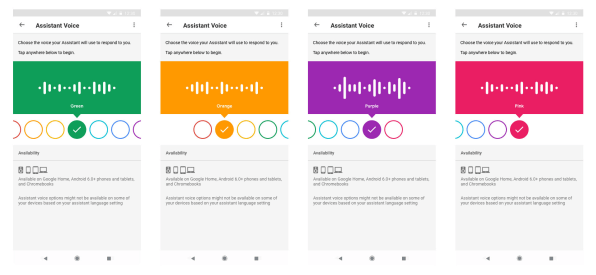

The company has also instituted other attempts to be more inclusive. The American version of Google Assistant currently offers a total of 11 voices, including ones with a British accent and a slight Southern drawl. But there’s a good chance that most users never switch the voice that’s loaded on their Android phone, Google Home speaker, or other Google Assistant-enabled device by default. To remedy this, Google now randomizes which of its two basic voices, one male and one female, are assigned to new users, giving users a 50-50 chance of getting each one.

Looking toward the future, Ward hopes to one day be able to offer even more personalization. Imagine that instead of picking a single voice, you could mix and match different attributes or elements of your Google Assistant, such as setting it to be more professional during business hours and more casual after hours. Ward imagines something like the computer system in Interstellar, which Matthew McConaughey’s character asks to lower its humor setting after some not-so-entertaining jokes.

While Google’s text-to-speech tech has vastly improved, the story of why the Google Assistant is female holds an important lesson about the ways that gender bias—among other sorts of bias—can seep into technology. In this case, a perceived preference for female voices led to systems trained on more female data that were actively worse at creating male voices, creating a feedback loop.

“Looking back, it’s easier to say that’s probably why it was,” says Google’s Ward. “At the time, you’re just trying to advance the work, and it was a data-driven effort. You’re only as good as your data.”

Fast Company , Read Full Story

(32)