The Unreal, Bleeding-Edge Tech That’s Helping Doctors Make The Cut

I couldn’t quite decide whether it was his agonized expression or the detailed tattoos covering his arms that bewildered me the most, but the full-size dummy in a hospital gown wasn’t there to freak people out. He was there to help improve health care for U.S. veterans, part of the technology arsenal of the Veterans Health Administration’s high-tech SimLearn facility, housed in an impressive building on the outskirts of Orlando.

Medical institutions invest so heavily in anatomically correct dummies like these—not to mention elaborate dissections of actual cadavers—because being able to act out scenarios in a safe learning environment can drastically enhance learning and improve patient care. For surgeons, simulation is an essential part of training.

But the equipment is costly, and clunky, and only a few medical students can work with it at a time.

“Right now, the way they’re doing it is people have these devices in their trunks, you can only fit like one in and they drive around with hundreds of dollars in disposable, simulated bones to allow people to practice one procedure once,” Dr. Justin Barad, an orthopedic surgeon and entrepreneur, told an audience at Health 2.0 last year. Last year he cofounded Palo Alto-based Osso VR, one of a number of companies turning technologies that are typically thought of as vehicles for fantasy into tools meant to teach surgery—better, faster, and ultimately cheaper, they say.

“I’ve done surgeries where I just sat there reading the instruction manual like we were putting together Ikea furniture because people don’t have a training option that’s something like [virtual reality],”wi he said. The technology could “increase patient safety, decrease complications, and increase the learning curve for complex medical devices.”

As countries around the world struggle to find health care provision models that balance the needs of aging populations with shrinking budgets—and as startups jostle to get into operating rooms—the virtual world is already being used to impact the health care of real people.

“I could be in Cleveland and teach a group of students in, say, London with all of us able to see one another and the holograms simultaneously,” says Professor Mark Griswold, Case Western Reserve’s faculty leader for the university’s efforts with Microsoft’s HoloLens, the $3,000 developer-edition-only augmented reality glasses. “The professor can see how students are interacting with the hologram in real time, and respond immediately with additional explanations or encouragement as needed.”

Devices like the HoloLens and consumer VR helmets like Samsung’s Gear VR and Facebook’s Oculus have received the most attention as vehicles for escape, but their real-world applications are growing fastest in the workplace. IDC, which pegs current industry revenues at around $5.6 billion, says much of the growth in shipments of VR and AR headsets over the next five years will come from industrial uses (80% a year), versus consumer uses (50%), like video games, films, porn, and other entertainment. Among current non-health care HoloLens customers, for instance, are companies like Lowe’s, Volvo, and ThyssenKrupp, whose technicians use the glasses to operate on ailing elevators.

In health care, revenues for VR and AR technologies reached nearly a billion dollars in 2016, according to Kalorama Research. Some estimate that by 2025, that number could reach over $5 billion, thanks to uses in areas like telemedicine, pain relief, robotic surgery, and, increasingly, medical simulations.

Medical instructors say a VR helmet, coupled with haptic-feedback “syringes,” can help a surgeon practice a complex operation in detail before carrying it out—or help a doctor with limited access to education locally get better medical training, improving patient experience and outcomes. A pair of augmented reality goggles can put an animated “patient” in front of students, making the expensive dummies obsolete altogether. And as medical operations become more sophisticated and high-tech, computer glasses could help get practitioners up to speed faster.

Health care experts have proposed new technologies like these as one of various solutions to what some have called a crisis in medicine: The United States could be facing a shortfall of between 48,000 and 100,000 physicians by 2030, according to the Association of American Medical Colleges. Since it takes between five and 10 years on average to train a new physician, medical industry experts say the U.S. urgently needs more people to enter that training pipeline now, particularly in highly specialized fields: The greatest shortfall, on a percentage basis, will be in the demand for surgeons, especially those who treat cancer and other diseases more common to older people.

Alleviating that shortfall and upgrading decades-old simulations with mixed reality could also ferry in cost savings that can’t come soon enough. In the U.S., the cost of health care continues to surge, far beyond the price of drugs: Open-heart surgery is 70% more than the next highest country; an appendectomy over two times more. And the price for a day in the hospital is about five times more in the U.S. than other developed countries.

Better Learning Through Virtuality?

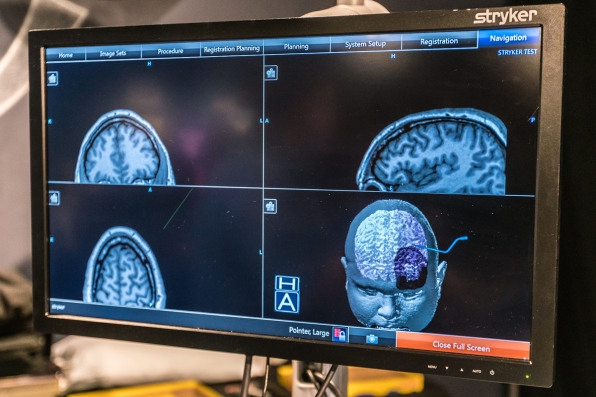

For years, surgeons have relied on 3D modeling on computers to plan complex procedures down to the millimeter, so there are as few surprises as possible. The technology proved valuable for the team that separated conjoined twins Erika and Eva Sandoval at Stanford’s Lucile Packard Children’s Hospital in Palo Alto in 2013. In that case, surgeons donned 3D glasses to study digital renderings of the twins’ organs, allowing them to perform a heart valve replacement using an incision less than half the normal size. More recently, 3D modeling has merged with VR at Stanford Health Care, where an app called True 3D technology, developed with Mountain View-based company EchoPixel, promises to increase a surgeon’s ability to visualize and plan complex procedures beforehand.

“Our biggest problem is cutting into an artery or vein that we did not expect,” says Eric Wickstrom, a professor of biochemistry and molecular biology at the medical school at Thomas Jefferson University in Philadelphia, who co-authored a 2013 study on the use of 3D models in surgery.

A clinical study published last year in the Journal of Neurosurgery looked at how surgeons rehearsed their operations using a VR-based brain modeling platform developed by the company Surgical Theater. The tool, in use at New York University, University Hospitals in Cleveland and Mount Sinai among others, appeared to help surgeons reduce the time it took to repair aneurysms, suggesting it also made the surgeries safer.

Making Virtual Bodies Feel Real And Real Bodies More Virtual

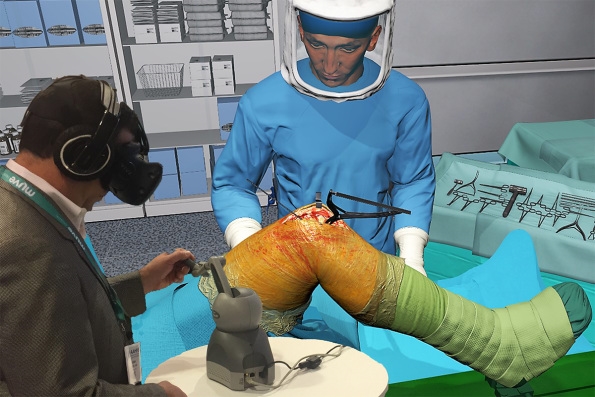

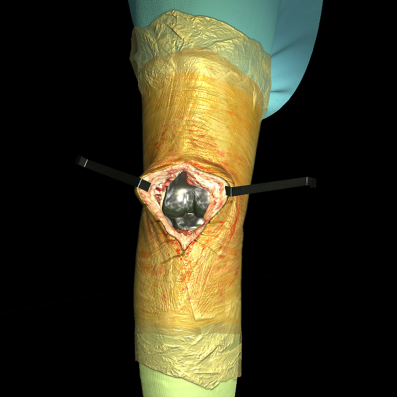

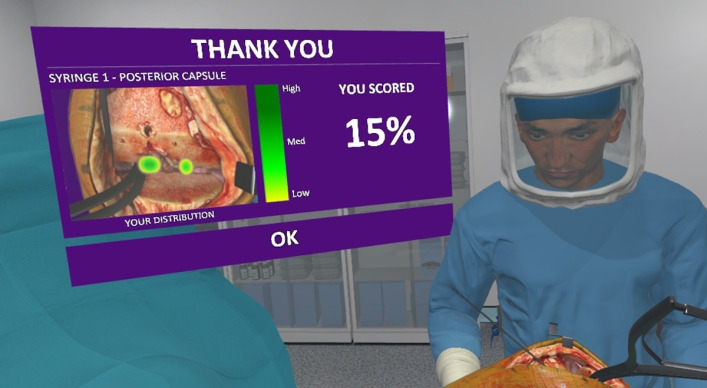

The next step in the equation will be to accurately reproduce not only the look but the feel of any surgical procedure. HoloLens partner FundamentalVR is already working on the addition of haptic feedback to surgery simulations, says Richard Vincent, the company’s founder. The London-based startup is developing a tool called FeelRealVR, which it describes as a “flight simulator” for surgery. In its current version, students use a stylus in place of a syringe, which provides realistic levels of pressure and resistance in relation to a hologram of a patient’s open knee joint, for instance.

Vincent argues that tools like this can make for better learners. The enhanced cognitive involvement that comes from not only seeing, but interacting with the holograms triggers active “Involved Learning,” he says, a recognized teaching methodology in which students have been found to retain much more of the subject matter than with traditional approaches.

AR is also augmenting instruction during real-life surgeries. Hands-on, face-to-face lessons during real-life operations are in short supply, often limited to the limited number of students who can fit inside an operating theater. Surgeons who can teach are in such high demand, meanwhile, that experiential learning can be increasingly infrequent at medical schools, taught only once to a small group.

“Surgery is very visual. You can read it in a book if you want but it’s not the same as watching it live,” says Dr. Nadine Hachach-Haram, a NHS registrar in plastic surgery at the Chelsea and Westminster Hospital. “Yet it’s physically difficult to get many medical students in the operating room at any time.”

Hachach-Haram is cofounder of a company called Proximie that developed a way to use augmented reality to let a distant surgeon virtually place his or her “hands” or instruments onto a patient’s body. The idea is to let experienced practitioners guide operating teams on where each incision should be made and how to proceed. After being selected by the U.K. Lebanon Tech Hub Accelerator, Proximie is now rolling out a training pilot at The Royal Free Hospital, allowing 150 of its students to log in remotely to watch surgery through the application.

Mixed reality is also improving operating rooms in less obvious ways. ByDesign, a HoloLens app, helps surgeons, nurses, and technicians save precious time in configuring the setup of operating theaters. Whenever there’s a rotation, operating rooms need to be carefully reconfigured to meet each team’s very specific requirements, since even minute errors in that context can have dangerous consequences for both patients and practitioners.

Traditionally that means multiple people moving around heavy, delicate, and expensive equipment to test various configurations. In an environment where most facilities already operate near capacity, this resource-intensive process translates into higher costs and slower delivery of care, says Andy Pierce, president of Global Endoscopy at Stryker.

By donning an AR headset, practitioners can visualize objects in full-scale 3D, with the flexibility of being able to easily move virtual objects. Two surgeons hundreds of miles apart can both stand in different rooms looking at accurate holographic renditions of the same equipment, moving them around until they’re satisfied the optimum setup has been reached. This can then be saved and relayed to those in charge of setting up the operating rooms themselves.

Upgrading The Cadaver

A review of augmented reality in medical training published last year in the journal Surgical Endoscopy couldn’t say whether the technology would contribute to patient safety. But author Esther Barsom, a researcher in the department of surgery at the University of Amsterdam, noted that AR is “preeminently suitable” for helping improve the training of situational awareness during operations, a facet that is “lacking in medical curricula.” And, she wrote, “as training methods become more engaging and reliable, learning curves may be expected to become steeper and patients will ultimately benefit.”

Prof. Wickstrom of Thomas Jefferson University suggests that virtual simulations could also widen the recruiting pipeline: By making medical education more interactive and engaging, the medical field could become more accessible and attractive to those who previously might have been put off by traditional medical learning.

The time-honored way of teaching anatomy, for instance, is to have students spend months dissecting cadavers. But these procedures—not unlike the realistic mannequins at SimLearn—can cost tens of thousands of dollars. And cadavers can only be used by a limited number of students, and, naturally, only once.

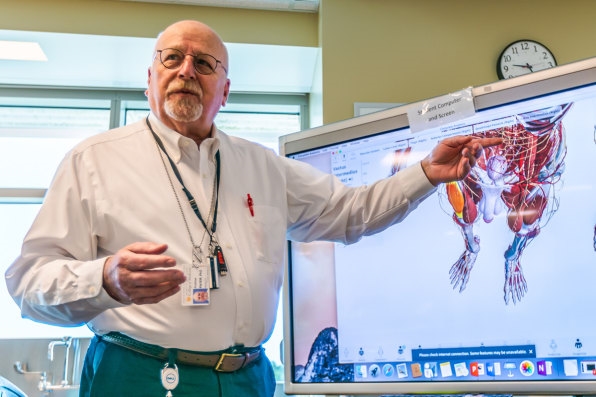

Under Prof. Griswold at Case Western Reserve, anatomy students use the HoloLens to interact with virtual patients and organs, allowing them to pull back the various layers of the human body, visualize the muscles on top of the skeleton, and understand exactly where things are located. AR means that medical students are not confined to learning the terrain of a single body, but can see accurate visualizations of particular conditions such as cancers, heart disease, or spinal injuries.

“In the fall we did a pilot test of HoloLens with medical students who already had studied the cardiothoracic region for several weeks in the cadaver lab,”Griswold says. “After one session viewing the same area of the body wearing the devices, 85% said they had learned something new.”

Just 15 minutes with the HoloLens could have saved them dozens of hours in the lab, students who participated in the pilot told Pamela Davis, dean of the school of medicine. Case Western is now in the process of developing a broader holographic anatomy curriculum. “The quicker our students learn facts like these, the more time they have to think with them,” Davis said at last year’s Microsoft’s Build conference. “We are teaching them to think like a doctor.”

Amid a looming shortage in surgeons and ever-complex procedures, doctors and startups say computer glasses can move simulation far beyond expensive dummies.

I couldn’t quite decide whether it was his agonized expression or the detailed tattoos covering his arms that bewildered me the most, but the full-size dummy in a hospital gown wasn’t there to freak people out. He was there to help improve health care for U.S. veterans, part of the technology arsenal of the Veterans Health Administration’s high-tech SimLearn facility, housed in an impressive building on the outskirts of Orlando.

Case Western Reserve University [Photo: courtesy of Case Western Reserve University]

[Photo: courtesy of Case Western Reserve University]

[Photo: courtesy of Case Western Reserve University]

Case Western Reserve University [Photo: courtesy of Case Western Reserve University]

[Photo: courtesy of Case Western Reserve University]

[Photo: courtesy of Case Western Reserve University]

Otronicon HealthTech

Otronicon HealthTech

Orlando VA Medical Center [Photos: Tom Atkinson at R3Digital]

Anatomy Lab UCF

Anatomy Lab UCF

Fast Company , Read Full Story

(75)