These researchers found an easier way to teach robots housework

Unlike humans, computers have a hard time breaking a request such as “Make coffee” into multiple steps they can execute in succession. Today researchers working at MIT and the University of Toronto announced a system, called VirtualHome, which aims to teach home helper robots the components of such activities.

The researchers identified sub-tasks to describe thousands of duties in settings such as kitchens, dining rooms, and home offices. “The way we define it is by an action and an object that the action is directed to,” says MIT PhD student Xavier Puig, lead author on the research paper. “So for setting up a table, it would be: Go to cabinet, open up cabinet, grab plate, go to table, and put plate on table.”

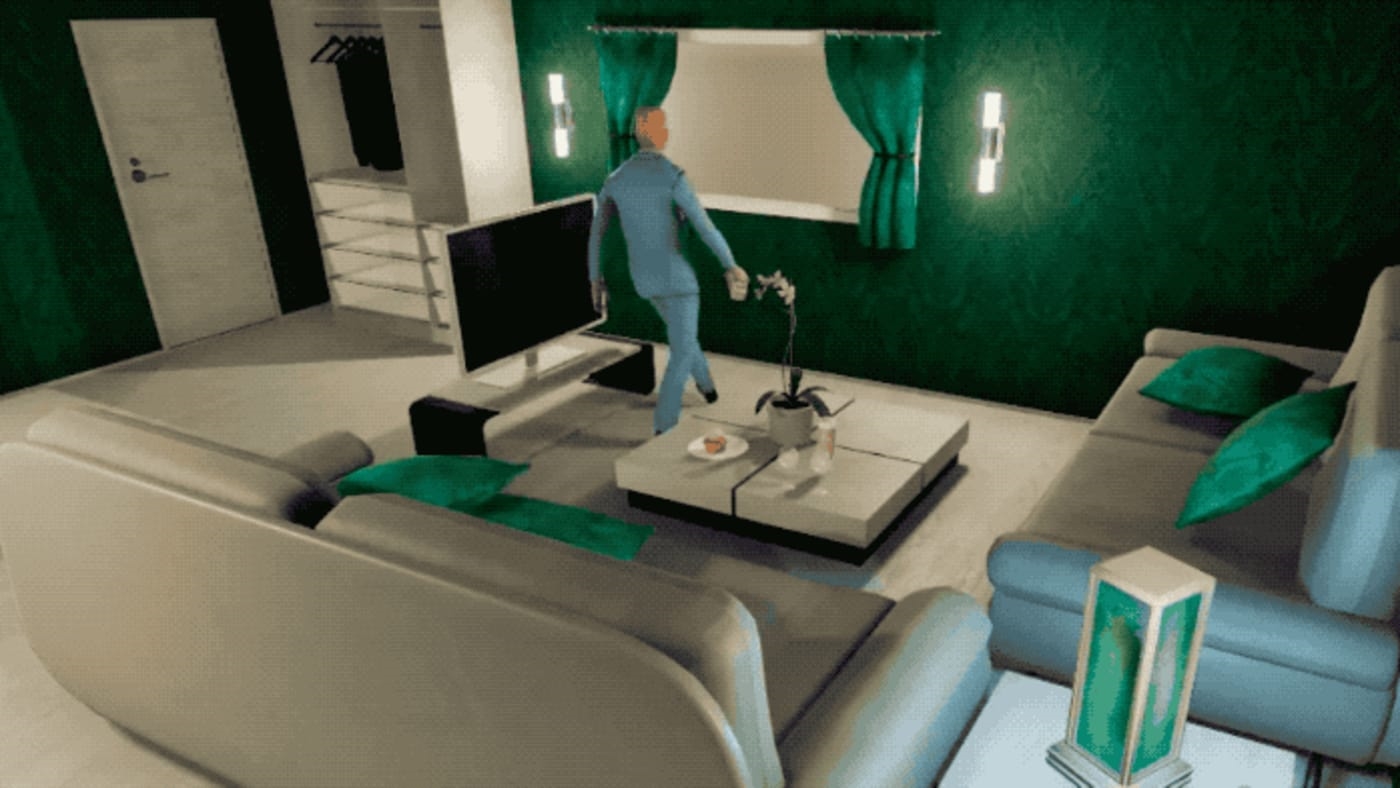

To teach robots, researchers used the Unity game engine to create well-defined instructional videos in a Sims-style environment. Danny Lange, head of AI at Unity—which was not involved in the research project—says that a meticulously labeled database of tasks and motions such as VirtualHome could provide “limitless” opportunities for training robots.

Lange adds that VirtualHome isn’t the first of its kind. A team at the Allen Institute for Artificial Intelligence is working on a similar system. It defines objects and capabilities, such as what a cup or microwave does, and ways a robot can interact with them, such as picking up or opening. Within these parameters, robots learn tasks by trial and error—in the safety of a virtual environment called Thor, also built in Unity.

Along with its other benefits, a system such as VirtualHome could ease interoperability across the robotics field, says Ross Mead, founder of robotics software company Semio, who was also not involved in the research. “These high-level scripted actions provide a level of abstraction for the actions so that they can be ported to different robot platforms with different sensing and actuation capabilities,” he says.

(28)