These Tesla Vets Are Taking On Tech Giants With Robo-Car Maps Made By The Crowd

In most wars, generals plan battles by gathering around maps. And historically, the victors of those battles get to redraw those maps.

An updated version of that battle is raging in the war over autonomous cars. Despite the growing ability of sensor-laden robots to recognize and react to their surroundings, many in the industry believe that self-driving cars need hyper-detailed digital maps to find their way safely.

These HD maps go way beyond smartphone navigation apps, down to a 10-centimeter (four-inch) level of accuracy, in order to keep cars in their lanes and make turns precisely. Fortunes are being invested in mapmaking by companies like Google, TomTom, and Here Technologies, which was one of Nokia’s crown jewels before Intel and several automakers bought it last year.

Into the fray come three twentysomething veterans of Tesla and iRobot with a more guerrilla approach: Their crowdsourced mapping company, lvl5, is collecting valuable mapping data from e-hail and other drivers who are already on the road. “We said, who drives a lot? Well, there are Uber drivers,” says lvl5’s 25-year-old CEO Andrew Kouri. “So let’s just pay Uber drivers to do this for us.” Lyft and truck drivers also take part. (The ambitious name is pronounced “Level 5,” the designation for a 100% autonomous vehicle.)

Lvl5 distributes payments to drivers through another app, Payver, that awards redeemable points to those willing to mount iPhones on their dashboards. Payver collects and uploads video of the road and data from phone sensors like GPS, gyroscope, and accelerometer to make 3D maps that, says lvl5, surpass the maps that companies like Here produce using hundreds of vehicles equipped with radar and laser scanners.

That’s quite a boast, but it’s convinced investors to drop $2 million into lvl5, the San Francisco startup announced today. The funding round, at an unknown valuation, includes Gmail creator Paul Buchheit, now a partner in the Y Combinator accelerator where lvl5 began, and Max Altman of 9Point Ventures, and brother of Y Combinator head Sam Altman.

In three months, Kouri says lvl5 has recruited 2,500 users and mapped 500,000 miles of roads. “If we want to do this at a scale that would be very valuable to [a carmaker] we would need either 50,000 Payver users,” he says, “or we would just need a partnership with one [carmaker] and have them promise to install our software in their cars.”

That’s the strategy for other mapping companies. Cameras are becoming standard-issue in many new cars for features like adaptive cruise control and lane departure warning. Here, for instance, is installing software that collects data from the cameras and other sensors of cars made by its co-owner BMW starting in 2018. Co-owners Audi and Mercedes-Benz may follow. “The lower-quality signals from cameras are one of these data sources,” says Sanjay Sood, Here’s VP of HD mapping, in an email to Fast Company.

How much tech do they need?

Whether or not lvl5 can succeed, its chosen path furnishes fundamental examples of current debates around autonomous cars. One relates to the sensors that cars need to see the road.

Kouri and his cofounders, fellow Tesla alum Erik Reed and iRobot vet George Tall, say that cameras that cost a few bucks are all that’s needed to make detailed maps. Along with radar sensors, they are sufficient for cars to navigate themselves. It’s a philosophy shared by Kouri’s and Reed’s former boss, Tesla founder Elon Musk.

Other experts advocate using LIDAR—essentially the laser version of radar—which uses lasers to measure details of any 3D space down to a few millimeters of accuracy, claim their makers. But LIDAR is expensive: Sensors cost up to tens of thousands of dollars. Here, Google, Apple, and others use LIDAR-equipped vehicles to build detailed baseline maps.

But any map will also need to be up-to-date, with incremental updates to account for things like road construction, accidents, and busted traffic lights. Lvl5 says it can do that more cheaply by crowdsourcing from vehicles equipped with smartphone or built-in car cameras, an idea that sounds plausible to experts.

“With significant data capture you can leverage multiple images or videos to help resolve different viewpoints of the same object, which can help boost recognition performance of key objects on the road,” says Matt Zeiler, CEO of image-recognition AI company Clarifai, which is not connected with lvl5, in an email to Fast Company. “For example, seeing a street sign from 200 feet, 100 feet, and 50 feet could be combined to give a better prediction of what and where that street sign is.”

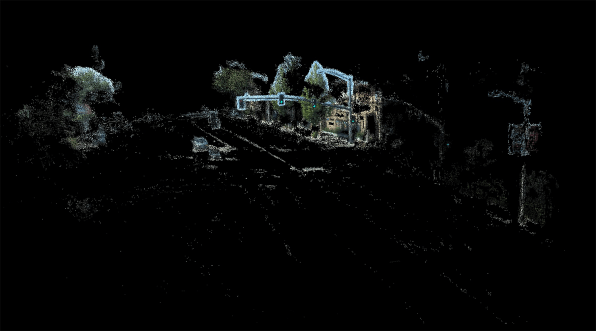

He’s describing the structure from motion process, which lvl5 and bigger rivals use. Analyzing the similarities and differences between photos taken of the same objects, from several angles, can produce 3D maps. Erik Reed showed me the results. From a single iPhone running Payver, lvl5’s software generated a ghostly 3D image showing rough outlines of things like trees and buildings, and almost-photographic images of traffic lights. The latter are among about 100 items that the software is trained to recognize, along with other features such as stop signs and lane lines (these were the only three items that Kouri would reveal).

These capabilities are not unique to lvl5. But that fact that three smart guys, an app, and an Amazon Web Services cloud processing account can do it shows how accessible the technology has become. (The company is now recruiting three more employees and an intern.)

“There’s a lot of noise here. So in this one trip, it’s picking up things that we probably don’t care about,” says Kouri, noting the outline of a car on the other side of the road. “However, if we have 10 trips down the same road, not only do we get better accuracy for the features that we do care about, but we also have more references to eliminate the noise like this car.”

All of this works well, provided it’s a clear, sunny day. “I think most autonomous car companies would ditch LIDAR nowadays if it weren’t for low-light robustness,” says Alberto Rizzoli, cofounder of image-recognition company Aipoly. But, he notes, “with cameras alone you can get most of the info LIDAR gives you.”

Kouri argues that there are enough sunny days to make accurate baseline maps. “We can drive something in broad daylight and know that in night it’s probably not going to change that much,” he says.

Sood, of Here, is unconvinced. “A camera alone does not work in all situations. It does not work when the visibility is low and/or if the light patterns are difficult to make out,” he says. “An obvious example is a snowstorm, but sunsets or simple dust can also be problematic.”

Dead reckoning

Another controversy is whether digital maps need to show where a real-world object is in absolute terms—i.e., latitude and longitude—or just where it is relative to other objects. Companies like Here are trying to get as close to exact positioning as possible, supplementing sometimes-fuzzy GPS data with more precise sources.

Lvl5, meanwhile, claims that just knowing a car’s location relative to other things on the road, as humans do, is enough.

Kouri illustrates this with a toy car on a coffee table in the sitting room of the live-work mansion the company rents in San Francisco’s tony Russian Hill neighborhood. “If you’re driving down this road and you’re trying to shoot this gap,” he says, pointing to the space between two knickknacks, “what matters is: Are you lined up to the middle of these two things?” By this logic, the exact location of the car doesn’t matter. “It could be on Mars, as long as it knows it needs to go right at this angle,” says Kouri.

Some people question whether HD maps will be necessary as cars get smarter. Tim Wong heads the technical marketing team at Nvidia, which makes an in-car autonomous driving computer and software used by Audi, Mercedes-Benz, Tesla, Toyota, and Volvo. “An HD map makes things better,” Wong says at the Automated Vehicles Symposium in San Francisco. But “if I don’t have it, I still need to be autonomous. You are able to drive a road you’ve never seen before,” he says. “I would expect an autonomous vehicle to do the same things.” He concedes however that autonomous cars aren’t yet that smart.

Kouri doubts that they ever will be, based on his experience at Tesla. In 2016, car owner Joshua Brown died when riding in Tesla’s Autopilot mode. Brown’s Model S mistook a tractor trailer pulling across the road for a bridge and concluded the car would fit under it. Instead, the top of the car was sheared off.

Kouri was on the team at Tesla that analyzed the incident. “The car has radar in it and a camera, and the radar saw some sort of bridge-like object,” he says. “But if it had access to an HD map that was always updated, it would know, ‘there’s no bridge here, so I need to slam on the brakes. This is an abnormality that we need to stop for.’”

With the help of Uber and Lyft drivers, the twentysomethings behind lvl5 are making detailed maps they say can compete with those of Google and BMW.

In most wars, generals plan battles by gathering around maps. And historically, the victors of those battles get to redraw those maps.

Fast Company , Read Full Story

(48)