These Tools Help You Mislead The Algorithms That Track You On Social Media

What you post on social media–depending on the country you live in and the platform you’re using, or a data broker’s willingness to break laws or violate a terms of service agreement–could theoretically be sucked into algorithms that help determine your credit score or help an employer decide whether they should hire you. The IRS, reportedly, might be combing through social posts to decide who to audit. In China, the government plans to begin using its “social credit” score, which measures someone’s trustworthiness in part through social media activity, to decide who to ban from flights or trains.

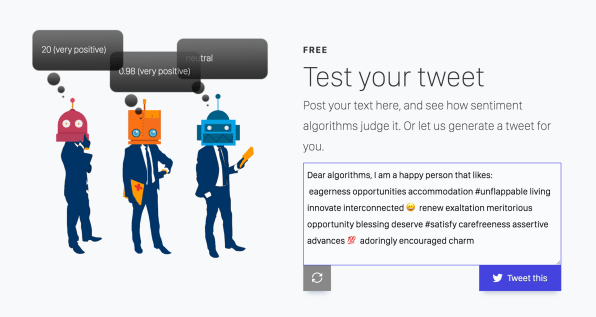

A new conceptual project proposes a solution: a set of tools that help consumers mislead any algorithms that might be tracking them. Using optimistic and positive language, for example, could potentially make you more attractive to potential employers, while negative language does the opposite. At least one service that calculates a “reputation score,” for example, scours social posts for negativity towards work. In the new project, Dutch “privacy designer” and technology critic Tijmen Schep mocked up a tool that generates nonsensical tweets or Facebook posts with a series of positive words, so an algorithm looking for that specific language would give a user a higher score. Another of Schep’s proposed services would manage social posts to boost someone’s social credit score in China so they can avoid no-fly lists.

“It’s a lighthearted reaction to Cambridge Analytica and this whole idea that companies are analyzing us and we have no idea of how to fight back and how to respond to that–to play with the psychological profile that Cambridge Analytica would make, how would you do that?” says Schep. “One way would be to seed what you express with words that twist that idea.”

Schep, known for coining the term “social cooling“–the concept that living in the age of Big Data is beginning to constrain how we act–previously created art projects like the National Birthday Calendar, an online service that attempted to gather as much publicly available data about Dutch citizens as possible to make sure that everyone got the ideal birthday present. (In a hackathon to build the project, the team was “a little shocked” by how much personal data they could find.) Data brokers that scrape information online collect as much as they can, and in the U.S., create as many as 8,000 scores on factors like someone’s I.Q., level of gullibility, political views, economic stability, and note whether someone has had an abortion or is planning to have a baby.

This data is used to market products, or, in the case of Cambridge Analytica, was used to target voters for the Trump and Brexit campaigns. It also could be used in less obvious ways. The insurance industry could theoretically use social media data to offer discounts to, for example, people who are more optimistic; some insurance companies already offer discounts for other types of data, such as the number of steps your Fitbit has tracked. The data company Digi.me previously explored using sentiment analysis in this way, though a spokesperson for the company says that it’s difficult to track at this point. It’s hard for an algorithm to tell, for example, if someone is tweeting something sarcastically. But she says that it’s something that they might come back to in the future. Companies like Experian have explored using social data for credit scoring, though a recent company blog post noted that one problem with this approach is that social data can be gamed.

Many major social platforms also prohibit this type of targeting: Twitter’s terms of service for developers, for example, says that Twitter content can’t be used to target individuals based on health, negative financial status, and other personal factors. This fact, along with the difficulty of using social data to judge something like a job candidate’s happiness, means that this type of analysis does not seem to be not widespread now.

“I see an awful lot of startup companies talking about this sort of thing–‘we’re going to use your social media data for credit or insurance or housing or employment,’” says Aaron Rieke, managing director at Upturn, a nonprofit that works to ensure that technology meets the needs of marginalized people. “When I’ve done research on these companies, almost every single one kind of falls through in that their marketing way outran what they’re actually able to do or their lawyers will let them do.” A startup might try to use social media data, he says, but if their business model relies on violating Facebook’s terms of service, they’re unlikely to survive.

But Rieke also says that social media data could easily be used in these ways outside the U.S., and on other platforms. Companies could also violate the terms of service on social platforms. “We can’t sit here today and police every possible business model that some entrepreneur in Silicon Valley might come up with,” an attorney for HiQ, a company sued by LinkedIn for scraping data, told a judge. Laws don’t address current digital privacy challenges well. In the case of the IRS’s potential use of social media data, it’s not clear how the law would apply.

“The Privacy Act was passed in 1974,” says Kimberly Houser, a clinical assistant professor of business law at Washington State University who studied the IRS’s use of big data. “So this is well before social media. It’s my guess that the IRS is doing what they want to do because the law does not specifically address social media. However, the legislative intention is very clear that they do not want the government to have secret data collection services.”

It’s not clear, either, how policy in the U.S. may change in the wake of the Cambridge Analytica scandal. “The problems that are endemic in this current Facebook controversy are pretty hardwired into the online ecosystem right now,” says Emory Roane, policy counsel for the Privacy Rights Clearinghouse, a nonprofit that focuses on consumer privacy. “I think it remains to be seen what real policy pushes are going to come out of this.”

In the meantime, Schep’s Cloaking Company project points to another approach. Will a new class of startups build tools for consumers to attempt to fight the world of algorithms, either those used in marketing or for other purposes? Schep says that although his project began as a joke, he’s considering turning the services into a business and making the tools more sophisticated (right now, for example, the optimistic tweets that are generated could fairly easily be detected as fake). If these tools become commonplace, he says, it “will be a tug of war,” between developers to keep consumer tools a step ahead of those that data brokers use.

He hopes the project makes people more aware of the ways that seemingly innocuous social media posts could be used. “With social cooling, my point there is that people have no idea [this is happening],” he says. “But they will gain an understanding in the next 10 years. In the next 10 years, people will start to see what’s going on with the data broker market, and then they will start to care more about privacy, and want to pay more for privacy.”

(27)