This AI will slap a bikini on your naughty bits

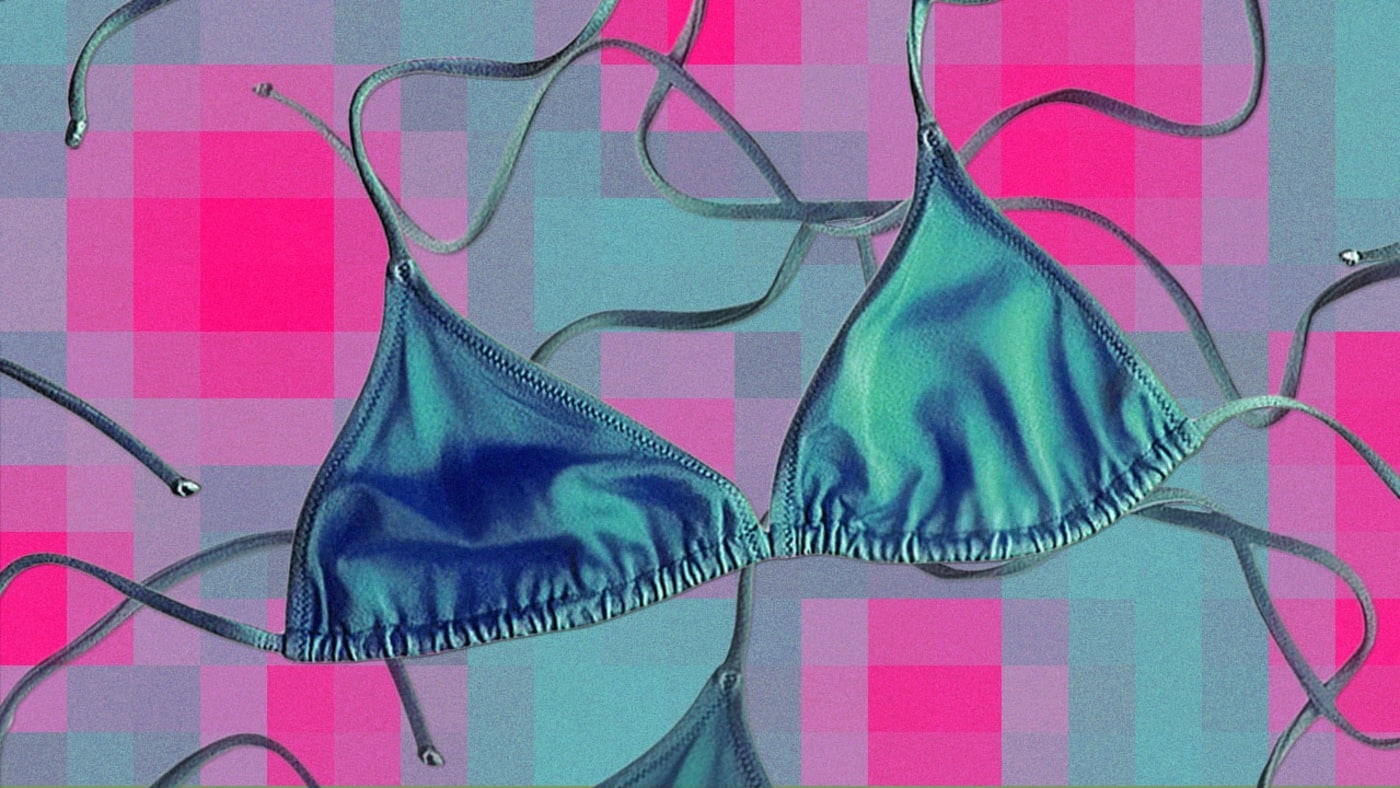

A new AI is designed to make NSFW images a little more SFW. A recent paper describes how researchers trained a type of AI algorithms known as generative adversarial networks to put bikinis on photos of nude women. By looking at examples of almost 2,000 pictures of women—both naked and bikini-clad—the AI can take an image of a lady in her birthday suit, figure out where to place a bikini and create a new, more modest shot.

The team behind the work hails from Brazil’s Pontifical Catholic University of Rio Grande do Sul, a university run jointly by the local Catholic Archdiocese and the Society of Jesus, which may explain some of the inspiration behind the work. Describing their motivations, the researchers underscore the abundance of sexual content on the web, much of it easily accessible to children. They note that more than 90 percent of boys and almost two-thirds of girls have reported seeing online pornography before the age of 18. Their software, they write, could also be useful for countering revenge porn, or for protecting anyone who might just want a PG-rated internet experience.

Rather than simply blocking media that contains nudity, they propose, internet platforms could seamlessly cover up the naughty bits. Previously, algorithms trained on hundreds of thousands of images have been designed to detect “adult content,” the researchers note, but “no work so far attempts at automatically censoring nude content.” Meanwhile, they argue, taking a simpler approach than bikinis—placing black boxes around genitals, for instance—would mean that “the censorship would still be perceived by the person consuming the content.”

“The motivation behind this task is to avoid ruining user experience while consuming content that may occasionally contain explicit content,” they write. Their study, “Seamless Nudity Censorship: an Image-to-Image Translation Approach based on Adversarial Training,” debuted last month at the IEEE International Joint Conference on Neural Networks in Rio de Janeiro.

The research joins a long history of censoring pictures of women deemed underdressed. Facebook and other online platforms use AI software and human moderators to regularly prohibit nudity, sometimes in ways that are considered excessive and inconsistent. Conservative communities around the world edit photos to add more clothes, from high school yearbooks to album art. There are far fewer examples of men being covered up, although they do exist. (The researchers, who downloaded their “data set” from torrent sites, say they didn’t use images of men because of time constraints but plan to add them in future iterations.)

There are some potential pitfalls. The technology could be tweaked and used in reverse, taking photos of bikini-clad ladies and putting anatomically correct body parts in. “We would like to emphasize that removing clothing was never our intended goal, it is merely a side-effect of the method, which is discarded anyway,” Rodrigo Barros, one of the authors of the paper, told The Register.

People could also end up using software like this to create a new kind of deep fakes, which gained widespread attention after videos began circulating of unwitting victims, usually celebrities, inserted into pornographic films with the help of AI. But judging from the limitations of this AI yet—the computer-drawn bikinis are sometimes lopsided, appear glitchy, or otherwise fake looking—horny internet users might be better off finding real naked photos than trying to reverse-engineer bikini snapshots, at least for the time being.

(15)