This camera captures 156.3 trillion frames per second

This camera captures 156.3 trillion frames per second

So much for Samsung phones’ 960 fps “Super Slow-mo.”

Scientists have created a blazing-fast scientific camera that shoots images at an encoding rate of 156.3 terahertz (THz) to individual pixels — equivalent to 156.3 trillion frames per second. Dubbed SCARF (swept-coded aperture real-time femtophotography), the research-grade camera could lead to breakthroughs in fields studying micro-events that come and go too quickly for today’s most expensive scientific sensors.

SCARF has successfully captured ultrafast events like absorption in a semiconductor and the demagnetization of a metal alloy. The research could open new frontiers in areas as diverse as shock wave mechanics or developing more effective medicine.

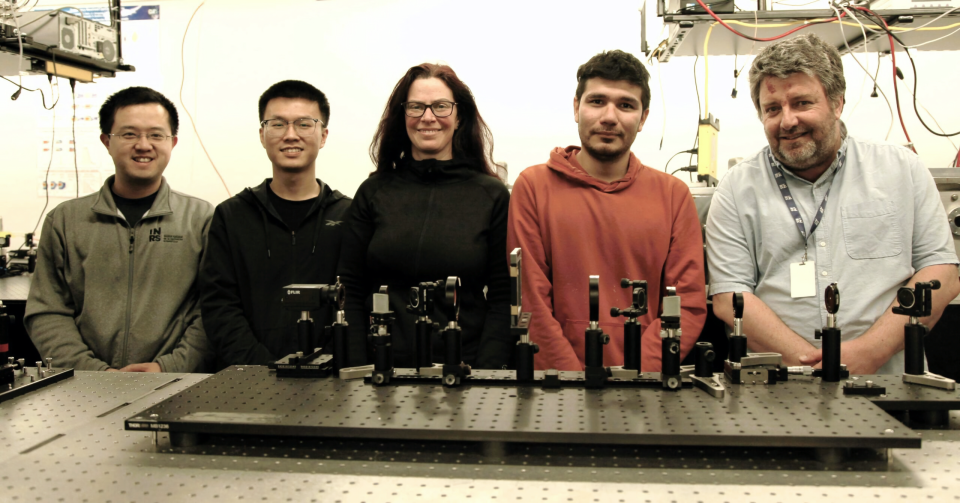

Leading the research team was Professor Jinyang Liang of Canada’s Institut national de la recherche scientifique (INRS). He’s a globally recognized pioneer in ultrafast photography who built on his breakthroughs from a separate study six years ago. The current research was published in Nature, summarized in a press release from INRS and first reported on by Science Daily.

Professor Liang and company tailored their research as a fresh take on ultrafast cameras. Typically, these systems use a sequential approach: capture frames one at a time and piece them together to observe the objects in motion. But that approach has limitations. “For example, phenomena such as femtosecond laser ablation, shock-wave interaction with living cells, and optical chaos cannot be studied this way,” Liang said.

The new camera builds on Liang’s previous research to upend traditional ultrafast camera logic. “SCARF overcomes these challenges,” INRS communication officer Julie Robert wrote in a statement. “Its imaging modality enables ultrafast sweeping of a static coded aperture while not shearing the ultrafast phenomenon. This provides full-sequence encoding rates of up to 156.3 THz to individual pixels on a camera with a charge-coupled device (CCD). These results can be obtained in a single shot at tunable frame rates and spatial scales in both reflection and transmission modes.”

In extremely simplified terms, that means the camera uses a computational imaging modality to capture spatial information by letting light enter its sensor at slightly different times. Not having to process the spatial data at the moment is part of what frees the camera to capture those extremely quick “chirped” laser pulses at up to 156.3 trillion times per second. The images’ raw data can then be processed by a computer algorithm that decodes the time-staggered inputs, transforming each of the trillions of frames into a complete picture.

Remarkably, it did so “using off-the-shelf and passive optical components,” as the paper describes. The team describes SCARF as low-cost with low power consumption and high measurement quality compared to existing techniques.

Although SCARF is focused more on research than consumers, the team is already working with two companies, Axis Photonique and Few-Cycle, to develop commercial versions, presumably for peers at other higher learning or scientific institutions.

This article originally appeared on Engadget at Engadget is a web magazine with obsessive daily coverage of everything new in gadgets and consumer electronics

(17)