To understand artificial intelligence in 2019, watch this 1960 TV show

This article is part of Fast Company’s editorial series The New Rules of AI. More than 60 years into the era of artificial intelligence, the world’s largest technology companies are just beginning to crack open what’s possible with AI—and grapple with how it might change our future. Click here to read all the stories in the series.

“If the computer is this important, why haven’t I heard more about it?”

“Well, the computer is a relatively new thing, and we’re just really getting an appreciation for the full range of its usefulness. Many people think that it’s going to spark a revolution that will change the face of the earth almost as much as the first industrial revolution did.”

The year is 1960. The skeptic posing the question is David Wayne, a crusty actor familiar to audiences of the time from movies such as Adam’s Rib and TV shows like The Twilight Zone. He’s talking to Jerome B. Wiesner, director of MIT’s Research Laboratory of Electronics and later the university’s president. The two men are cohosts of “The Thinking Machine,” a documentary about artificial intelligence aired as part of a CBS series called Tomorrow, which the network produced in conjunction with MIT. It debuted on the night of October 26, less than two weeks before John F. Kennedy defeated Richard Nixon in the U.S. presidential election.

Just in case you weren’t watching TV in 1960, you can catch up with “The Thinking Machine” on YouTube. It’s available there in its 53-minute entirety, in a crisp print that was digitized and uploaded by MIT. It’s racked up only 762 views as I write this, but deserves far more eyeballs than that.

So here it is:

Taking in this black-and-white program in 2019, your first impulse may be to dismiss it as a hokey period piece. As the hosts sit on a midcentury set that looks like office space at Mad Men’s Sterling Cooper, Wayne smokes cigarettes throughout while Wiesner puffs thoughtfully on a pipe. Wayne keeps dramatically pulling up film clips using a box that appears to be a prop, not an actual piece of technology. The whole thing feels stilted, and its leisurely pacing will likely try your patience.

But you know what? Back in 1960, this was an excellent introduction to a subject that mattered a lot—and which, as Wiesner explained, people were just beginning to understand. It includes still-fascinating demos and interviews with significant figures in the history of AI. Fifty-nine years later after its first airing, its perspective on AI’s progress and possibilities remains unexpectedly relevant.

[Screenshot: YouTube]

AI in its infancy

When “The Thinking Machine” first aired, the field of artificial intelligence was busy being born. The science had gotten its name only four years earlier, at a workshop at Dartmouth College where computer science’s brightest minds met to discuss “the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” Over the course of two months, attendees conducted research, shared works in progress, and explored the feasibility of teaching a machine to think like a human being.

Soon, these pioneers’ enthusiasm filtered out into the broader culture. Newspapers of the late 1950s and early 1960s are full of articles about AI, some of which got a little ahead of themselves in marveling at the current state of the science. “A new robot teaches complete science courses and sternly lectures erring students on the need for good study habits,” declared one 1959 story. “Electronic machines bake cakes, mine coal, inspect rockets, perform complicated calculations a million times faster than the human brain, operate motion picture theaters, and help care for hospital patients.”

Along with expressing giddy optimism, newspaper coverage of artificial intelligence worried about worst-case scenarios. Could smart machines render humans irrelevant? What would happen if the Soviet Union managed to win the AI war, which looked like it might be as strategically significant as the race to the moon?

By the time “The Thinking Machine” came along, there was a need for a clear, accurate look at AI aimed at laypeople—and the show, which was well-reviewed at the time, delivered. Among other AI applications, cohosts Wayne and Wiesner looked at work being done to teach computers to . . .

Recognize handwriting. A couple of scientists scrawl letters (“W” and “P”) on a screen using an electronic pen. A massive computer with banks of switches and whirring tape drives thinks for a few seconds, then guesses each letter. It doesn’t always get them right, but grows more confident as it goes along, proving the point that computers learn by analyzing data—the more, the better.

Play games. Not chess, Go, or Jeopardy, but checkers, which was a sufficient challenge to strain the intellect of a computer in 1960. The creator of the checkers program shown in “The Thinking Machine,” IBM’s Arthur Samuel, watches as a man takes on the machine using a real checkerboard. The human player tells the computer his moves by flipping switches, and is informed of the computer’s moves via flashing lights.

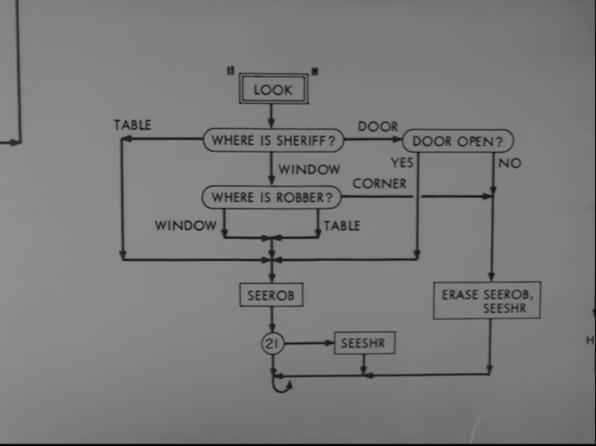

Tell stories. MIT’s Doug Ross and Harrison Morse taught the TX-0, the university’s famous computer, to write scripts for TV westerns—sans dialog—involving a shootout between a robber and a sheriff. It does so through a “Choose Your Own Adventure”-esque process involving variables such as how drunk the bandit gets before drawing his gun. The program shows dramatizations of three of TX-0’s scripts, ending with one in which a bug in the software results in an endless loop of the robber spinning his gun barrel and the sheriff gulping shots of liquor. (For the record, they’re played respectively by an uncredited Jack Gilford and Heywood Hale Broun.)

[Screenshot: YouTube]

Same as it ever was

There’s plenty that’s endearingly archaic in these and other segments. (The very idea of wanting a computer to write a TV western is as 1960 a desire as you can get.) But I was more struck by the fact that the problems these AI experts were trying to solve in the early 1960s—and the way they went about solving them—have lots of parallels today. Computers have finally gotten good at reading handwriting: Samsung’s Galaxy Note 10 has built-in recognition that understands even my scrawls. But many companies are still tackling other pattern-recognition challenges. And like the handwriting-recognition research shown in “The Thinking Machine,” they involve feeding example after example to the computer until it begins to understand.

Arthur Samuel, the creator of the checkers program, pioneered the whole notion that teaching computers how to play games is an effective way to derive lessons that can be applied to other challenges—a philosophy that continues to drive innovation at companies such as Alphabet’s DeepMind. The year before “The Thinking Machine” aired, Samuel also coined the term “machine learning,” which isn’t just still in use—it’s among the buzziest of current tech-industry buzzwords. When the phrase comes up on the 1960 show, it’s an uncanny direct connection between past and present, almost as if the program had suddenly referenced Instagram influencers or 4chan trolls.

[Screenshot: YouTube]

Meanwhile, Hollywood is currently smitten with the concept of using AI to write scripts, such as one for a 2018 Lexus commercial. Unlike MIT’s TX-0, which generated its westerns from scratch, the software responsible for the Lexus ad analyzed a vast repository of award-winning ads for inspiration. But in both cases, it seems to me, humans had a lot more to do with the results than the computer did. Wiesner’s admonishment after Wayne is astonished by TX-0’s apparent creativity—”It’s marvelous to do . . . on machines, but far from miraculous”—applies equally to AI’s role in creating the Lexus spot.

As “The Thinking Machine” progresses, it segues from tech demos to sound bites addressing whether, as Wayne puts it, “one day, machines will really be able to think?” CBS lined up an impressive roster of experts to chime in: Two of them, Claude Shannon and Oliver Selfridge, had even participated in the seminal 1956 Dartmouth workshop.

For the most part, they’re upbeat about AI’s future. “Machines can’t write good poetry or produce deathless music yet,” acknowledges Selfridge, stating the obvious. “But I don’t see any stumbling block in a line of progress that will enable them to in the long run. I am convinced that machines can and will think in our lifetime.”

Forty-six years later, in an interview he granted two years before his death, Selfridge sounded more jaded: Speaking of software, he snarked that “the program doesn’t give a shit.” But neither he nor any of his colleagues on “The Thinking Machine” say anything that seems ridiculous in retrospect—just overly optimistic on timing.

[Screenshot: YouTube]

And for every prognostication that’s a little off, there’s another that’s prescient. For instance, when Shannon explains that computers will come to incorporate “sense organs, akin to the human eye or ear, whereby the machine can take cognizance of events in its environment,” he’s anticipating the use of sensors that’s a defining aspect of modern smartphones.

Still, if there’s a key point about the future of AI that the documentary fails to capture, it’s the miniaturization that gave us the smartphone, and the laptop and desktop PC before it. Wiesner and other experts keep throwing around terms like “giant” to describe the computers running AI software, and there’s copious, drool-worthy mainframe porn depicting sprawling machines such as the TX-2, MIT’s more powerful successor to the TX-0. A 1960 TV viewer might have concluded that the even smarter computers of the future would be even more ginormous.

At the time, transistors were already changing the computer industry, but the arrival of the first commercially available microprocessor, Intel’s 4004, was still more than a decade in the future. The scientists who provide predictions in “The Thinking Machine”—none of whom are still with us—wouldn’t be astounded by the state of AI circa 2019, and might even have expected us to have made more progress by now. What they don’t seem to have anticipated is that we’d experience so much of it using machines we carry in our pockets.

But it’s not the technological details in the show that feel surprisingly contemporary; it’s the hopes and fears and reminders that predicting the future with any precision is ultimately impossible even for people who know what they’re talking about. Toward the end, Wayne—whose primary contribution has been to serve as a wet blanket—poses yet another question to Wiesner. It’s one that people are still asking today. And even if Wiesner’s answer isn’t entirely serious, I found it oddly reassuring. Here’s their exchange:

“If fabulous machines like this are being developed everywhere, what’s going to happen to us all tomorrow? Who’s going to be in charge? Machines or man?”

“Man, I hope. You know, you can always pull the plug.”

Fast Company , Read Full Story

(90)