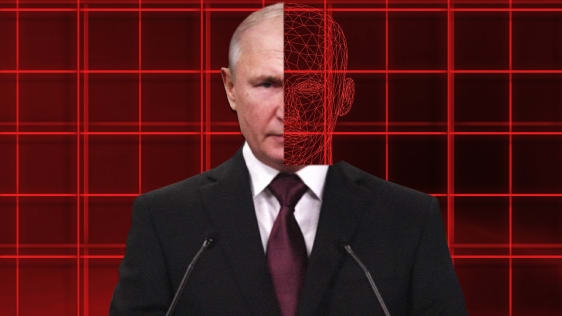

Watch chilling deepfakes of Putin and Kim Jong Un anticipating the end of U.S. democracy

“You blame me for interfering with your democracy, but I don’t have to,” Vladimir Putin says. “You are doing it to yourselves.”

While this Putin looks and sounds remarkably like the Russian president, the real Putin never spoke these works. That’s because this video is a deepfake, an algorithmically generated video that can make a subject realistically look like they’re saying something they never really said.

A nonpartisan nonprofit is using this fake video of Putin to convince people to vote. The group, called RepresentUs, recently released a pair of deepfake video ads featuring Putin and North Korean leader Kim Jong Un talking about how they need to do nothing at all to watch U.S. democracy disintegrate–that is, unless Americans exercise their right to vote. Forty percent of eligible voters did not vote in the 2016 election, according to Pew Research.

Deepfakes are made by training a machine-learning architecture with lots of video and audio of a person until the AI understands how the person might look and sound when saying new things. So far, we’ve mainly seen deepfake videos used as a malicious, high-tech way of spreading partisan disinformation. Among the best known is the deepfake of Barack Obama calling President Trump a “total and complete dipshit.”

Here, though, deepfakery is used for good. And the RepresentUs videos point out that they’re fake at the very end.

Still, the advertisements raise a thorny question for TV and social networks. Are deepfakes okay if they have warning labels? What if they identify themselves as fake, but only in some small print at the very end of the video?

Several TV networks apparently decided that even labeled deepfakes weren’t a good idea. RepresentUs says the videos were set to run as ads on Fox News, CNN, and MSNBC (in Washington, D.C.) just after the presidential debate Tuesday night, but that those networks refused to run the ads without giving a reason.

Facebook and YouTube, on the other hand, seem to have no problem with the videos, at least not yet—they’ve only been up for a few days. Facebook banned deepfakes in January, defining them as videos that have been “edited or synthesized, beyond adjustments for clarity or quality, in ways that are not apparent to an average person, and would likely mislead an average person to believe that a subject of the video said words that they did not say,” and that are “the product of artificial intelligence or machine learning, including deep learning techniques . . .”

Tech platforms such as Facebook are relying on computer vision AI to detect deepfakes, but it’s far from an exact science.

I asked Facebook if the RepresentUs “dictator” videos satisfy its community guidelines but didn’t immediately hear back. I’ll update this story if and when I do.

RepresentUs is using deepfakery to make a point about civic responsibility, not a political point about supporting a candidate or cause. These deepfakes are a bold creative choice that puts a harrowing message inside the mouths of dictators—one that effectively communicates what exactly is at stake in the 2020 election.

(52)