Welcome BERT: Google’s latest search algorithm to better understand natural language

BERT will impact 1 in 10 of all search queries. This is the biggest change in search since Google released RankBrain.

Google is making the largest change to its search system since the company introduced RankBrain, almost five years ago. The company said this will impact 1 in 10 queries in terms of changing the results that rank for those queries.

Rolling out. BERT started rolling out this week and will be fully live shortly. It is rolling out for English language queries now and will expand to other languages in the future.

Featured Snippets. This will also impact featured snippets. Google said BERT is being used globally, in all languages, on featured snippets.

What is BERT? It is Google’s neural network-based technique for natural language processing (NLP) pre-training. BERT stands for Bidirectional Encoder Representations from Transformers.

It was opened-sourced last year and written about in more detail on the Google AI blog. In short, BERT can help computers understand language a bit more like humans do.

When is BERT used? Google said BERT helps better understand the nuances and context of words in searches and better match those queries with more relevant results. It is also used for featured snippets, as described above.

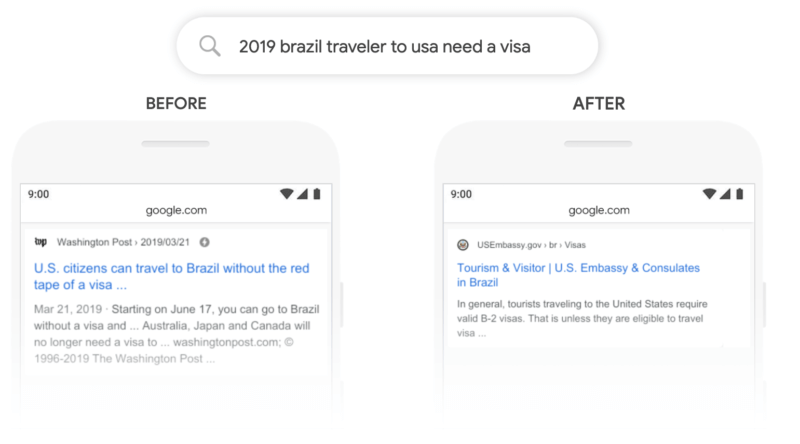

In one example, Google said, with a search for “2019 brazil traveler to usa need a visa,” the word “to” and its relationship to the other words in query are important for understanding the meaning. Previously, Google wouldn’t understand the importance of this connection and would return results about U.S. citizens traveling to Brazil. “With BERT, Search is able to grasp this nuance and know that the very common word “to” actually matters a lot here, and we can provide a much more relevant result for this query,” Google explained.

Note: The examples below are for illustrative purposes and may not work in the live search results.

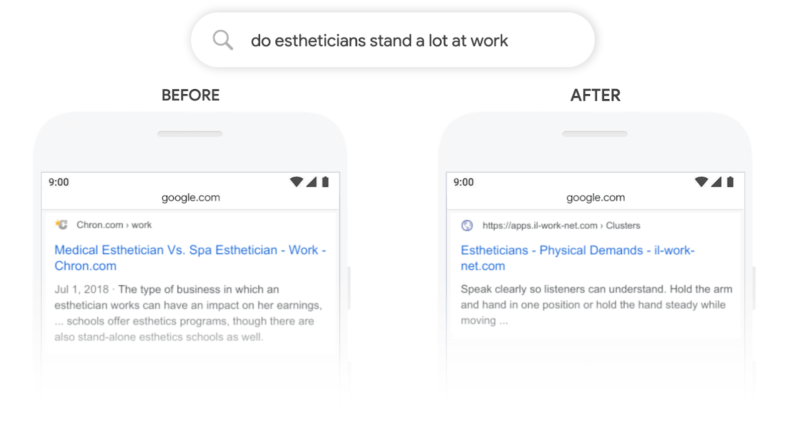

In another example, a search for “do estheticians stand a lot at work, Google Said it previously would have matched the term “stand-alone” with the word “stand” used in the query. Google’s BERT models can “understand that ‘stand’ is related to the concept of the physical demands of a job, and displays a more useful response,” Google said.

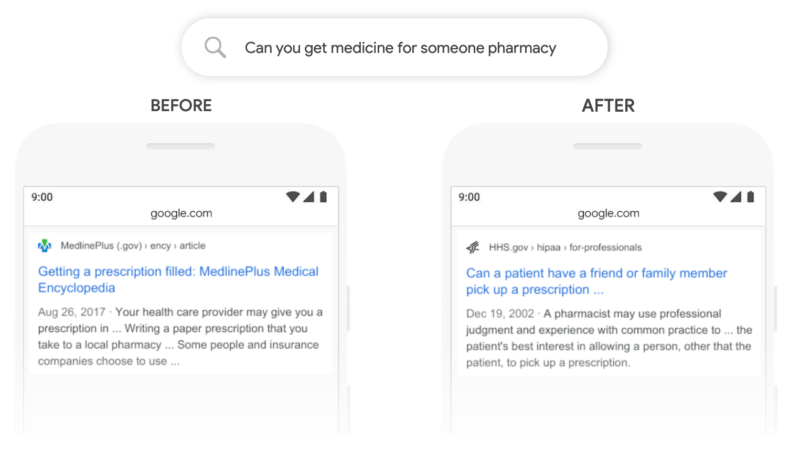

In the example below, Google can understand a query more like a human to show a more relevant result on a search for “Can you get medicine for someone pharmacy.”

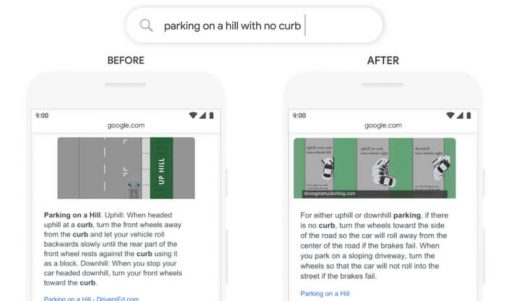

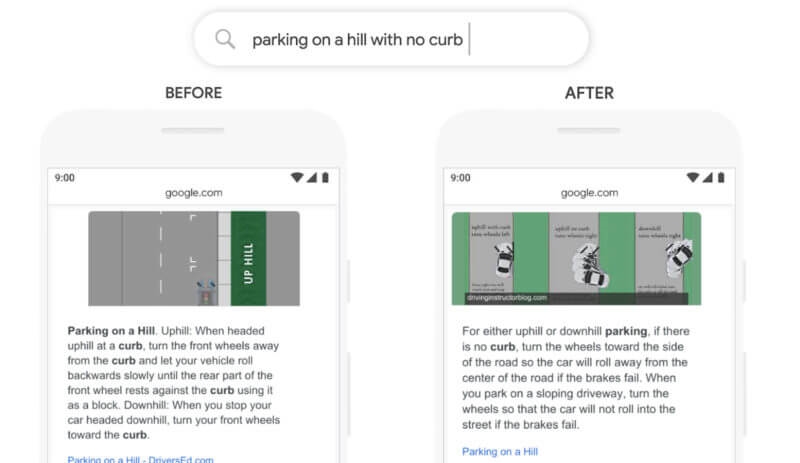

Featured snippet example. Here is an example of Google showing a more relevant featured snippet for the query “Parking on a hill with no curb”. In the past, a query like this would confuse Google’s systems. Google said, “We placed too much importance on the word “curb” and ignored the word “no”, not understanding how critical that word was to appropriately responding to this query. So we’d return results for parking on a hill with a curb.”

RankBrain is not dead. RankBrain was Google’s first artificial intelligence method for understanding queries in 2015. It looks at both queries and the content of web pages in Google’s index to better understand what the meanings of the words are. BERT does not replace RankBrain, it is an additional method for understanding content and queries. It’s additive to Google’s ranking system. RankBrain can and will still be used for some queries. But when Google thinks a query can be better understood with the help of BERT, Google will use that. In fact, a single query can use multiple methods, including BERT, for understanding query.

How so? Google explained that there are a lot of ways that it can understand what the language in your query means and how it relates to content on the web. For example, if you misspell something, Google’s spelling systems can help find the right word to get you what you need. And/or if you use a word that’s a synonym for the actual word that it’s in relevant documents, Google can match those. BERT is another signal Google uses to understands language. Depending on what you search for, any one or combination of these signals could be more used to understand your query and provide a relevant result.

Can you optimize for BERT? It is unlikely. Google has told us SEOs can’t really optimize for RankBrain. But it does mean Google is getting better at understanding natural language. Just write content for users, like you always do. This is Google’s efforts at better understand the searcher’s query and matching it better to more relevant results.

Why we care. We care, not only because Google said this change is “representing the biggest leap forward in the past five years, and one of the biggest leaps forward in the history of Search.”

But also because 10% of all queries have been impacted by this update. That is a big change. We did see unconfirmed reports of algorithm updates mid-week and earlier this week, which may be related to this change.

We’d recommend you check to see your search traffic changes sometime next week and see how much your site was impacted by this change. If it was, drill deeper into which landing pages were impacted and for which queries. You may notice that those pages didn’t convert and the search traffic Google sent those pages didn’t end up actually being useful.

We will be watching this closely and you can expect more content from us on BERT in the future.

Marketing Land – Internet Marketing News, Strategies & Tips

(25)