Why AirPods Are The Best Place For Siri, According To Apple Legend Bill Atkinson

In the heat of the controversy about removing the analog headphone jack on the iPhone, Apple released a line of wireless headphones called AirPods. They seemed to represent Apple’s vision for the way we’ll listen to mobile music in the future.

But the new AirPods are clearly meant to be far more than an audio device. With a powerful W1 chip inside and at least one microphone for listening, calling the AirPods a pair of headphones is like calling Amazon’s Echo a Bluetooth speaker.

You don’t get too far into Apple’s spiel on the AirPods without hearing about Siri’s role. “Talking to your favorite personal assistant is a cinch,” Apple says at the AirPods product page. “Just double-tap either AirPod to activate Siri, without taking your iPhone out of your pocket.”

AirPods—in their current form and in future revs—seemed aimed at putting Siri’s gentle voice, and her growing personal assistant capabilities, in your ear. And your ear canal may be the very best part of the body to put such a thing.

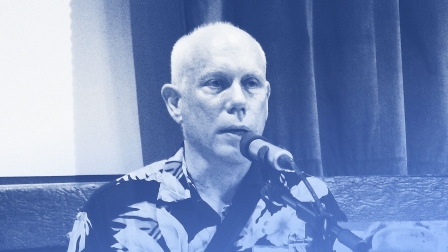

Veteran Apple engineer Bill Atkinson—known for being a key designer of early Apple UIs and the inventor of MacPaint, QuickDraw, and HyperCard—saw this coming a long time ago. He gave a presentation at MacWorld Expo back in 2011 in which he explains exactly why the ear is the best place for Siri.

After Glassholes

Atkinson gave his presentation just before the arrival of Google Glass, which represented the first big effort to put assistive technology in a wearable consumer device. The vision was for the device to be always watching and listening, always ready to assist with some digital task, or to instantly recall some vital piece of information.

Well, we all know how that turned out. In my neighborhood people who wore Glass were labeled “Glassholes” or pitied for being hopelessly geeked.

There were other, more functional, problems. “Google Glass is in your way for one thing, and it’s ugly,” Atkinson told me. “It’s always going to be between you and the person you’re talking to.”

Google Glass and other augmented reality devices superimpose bits of helpful information over the world as viewed through a small camera lens. “I don’t think people want Post-it notes pasted all over their field of vision,” Atkinson says. “The world is cluttered up enough as it is!”

Since Glass showed up, the personal digital assistant has, of course, become even more important to us and has emerged in more compelling form factors than eyewear. Assistants exist as free-standing apps like Google Now, in a variety of messaging apps, inside freestanding home speaker devices like Amazon Echo, in desktop OSs like Windows 10 and macOS, and of course as a key part of mobile OSs like iOS.

The device on which we arguably use personal assistants most often, the smartphone, is far from ideal. “We’re used to using touch screens, but when you’re in a car, that’s not what you want to do, and you certainly don’t want to be looking at a display,” Atkinson reasons.

Foreshadowing

The ear-based personal assistant isn’t a completely new idea. “Apple’s AirPod device … is a significant step forward toward a new interface paradigm that has long been anticipated in science fiction,” says Ari Popper, founder of the futurist consulting and product design firm SciFutures. “The movie Her is an interesting articulation of this.”

The sentient assistant OS in Her is perhaps the best developed imagining of the in-ear assistant, but the concept goes back far further than that.

Bill Atkinson points to Orson Scott Card’s Ender’s Game series from the 1980s, in which an artificial sentience called “Jane” lives in a crystal planted in the ear of the main character, Ender. Jane can do millions of computations per second and is aware and responsive on millions of levels. She’s hesitant to make herself known to humans because she’s painfully aware of the dangerous feelings of inferiority she may awaken in them. Pretty brilliant stuff.

Siri is headed for something like Jane, eventually, Atkinson says. “I think of this as Jane 0.1,” Atkinson says. “Within a few years it’s going to be able to do lots of things: It will hear everything you hear, it’s going to be able to whisper in your ear.”

The user can double-tap and quietly request some fact or figure, or some stranger’s name, or their birthday, or their children’s names. Reading email or texts in the car, or getting driving directions, would be easier and safer.

Atkinson says that as Siri gets more intelligent, it may be able to recognize certain important sounds in the environment. For example, if a user hears a siren while driving, the AirPods might immediately mute any messages or other audio.

In the ear, Siri is more discreet and polite as a notifications device. Sensors in the device will know if you are in conversation, and will break in only with the most important verbal notifications. “John, if you don’t leave now you will miss your meeting with IBM.” That’s far more discreet than getting buzzed on one’s wrist as a cue to look down at some update.

Siri Is No Jane, Yet

In fact, it’s Siri’s abilities that will hold back AirPods as an ear-based personal assistant. “Siri is a bit of a joke now; it doesn’t really understand the meaning of conversations,” Atkinson says. “That’s going to improve.”

For Siri to begin acting like Orson Scott Card’s Jane, her AI will have to advance far beyond what it is now. She will need a larger knowledge base, and the ability to understand the meaning and flow of conversation, not just store and regurgitate it.

“Your personal digital assistant needs to understand what you’re saying, and be able to piece together concepts even from your quiet mumblings,” Atkinson says. “It will understand the difference between a sequitur and a non sequitur; a simple transcribing technology wouldn’t understand that.”

The assistant needs to understand when the user is talking about taboo subjects, or saying something that’s politically incorrect,” Atkinson says. “I think we will slowly get there.”

Those are challenges all AI must deal with in the coming years, not just Siri, and not just Apple’s.

Adding The Camera

And, of course, the AirPods (at least for now) contain no camera. An effective personal assistant needs to see. The addition of a camera would open up the whole science of computer vision. Siri could recognize people, things, places, and motions, then connect them with data to give them meaning. It could pair a face with a name in one’s contacts list, in one simple use case.

Atkinson says the addition of a camera to AirPods may have to wait, for the same reasons people resented the camera on Google Glass. “A microphone is invasive also, but constant video is more threatening,” he said. “Adding a camera to the device might come 10 years later, when people realize that there really is no privacy.”

When Siri can see (and is smarter), she might start acting a lot more like Orson Scott Card’s Jane. “She’ll know everything about you,” Atkinson says. “We don’t have immediate recall, but Jane (Siri) will hear everything you hear and see everything you see.”

Change Agent

A device like that, of course, could radically reshape our personal computing universe. If the only thing our personal assistant device can’t do is show us images, we may not want a smartphone to fill that gap; we may want something larger. By then, our two “can’t-leave-home-without” devices might be our AI ear piece and a large transparent display that we keep folded up in our pocket.

And where the thing could go after that is something we’re only now envisioning.

“In the near future, as cloud computing, voice recognition, and AI algorithms improve, we envision ubiquitous human machine interactions initially expressed as voice and then as sub vocalization (currently deployed by the military) and finally as seamless mind machine interactions,” SciFutures’s Popper says. “The AirPod seems to be an early evolution of persistent AI assistants.”

Fast Company , Read Full Story

(33)