Why It’s So Hard For Robots To Get A Grip

Berkeley robotics professor Ken Goldberg is turning an empty coffee mug around and around in his hands. “Oh, it’s so complicated for a robot to be able to make sense of that kind of data,” he says, eyeing his fingers grasping the cup with a look of wonder. Artificial intelligence is taking on complex cognitive tasks, such as assisting in legal and medical research, but a manual job like picking up laundry off the floor is still science fiction. It’s a long way from Roomba to Rosie the Robot. Universities like Berkeley and Cornell and companies like Amazon and Toyota are working to close the gap with mechanical hands that approach human dexterity.

Success would unleash a new robotics revolution with positive effects like reducing household drudgework, and fraught effects such as eliminating jobs in places like warehouses. Machines have been taking over manual labor for centuries; but they’ve been limited to specific, predictable tasks, as in factories. “Parts are all in a given place,” says Goldberg. “You’re just doing repetitive motions, and you can spend a lot of time getting the repetitions really, really precise.” The challenge is in “unstructured environments,” like a messy home or busy warehouse, where different things are in different locations all the time.

Get A Grip

“Grasping is something that’s very subtle and elusive,” Goldberg says. “Humans just don’t even think about it. If I want to pick something up, I just do it.” It’s an ostensibly simple task accomplished by a complex network of circuitry inside the mind. In lifting that mug, a person has to pick the best of many ways their fingers will fit around it, be it encircling the whole mug or grabbing some part of the handle. And which part, with which fingers, in which positions? Humans also have to factor in physics like friction and center of gravity.

The trick: Human brains have developed automated, easily customized routines. “When I see a new pen that I’ve never seen before, I know how to pick it up,” says Goldberg. “So what I do is, I map it into something I do have experience with and I apply that, adapt it on the fly.” He and his grad students at the Berkeley Autolab are applying the same principle to robotics. They’ve developed an online database of 3D virtual objects, called Dexterity Network (Dex-Net, for short) to pre-calculate how to grasp any kind of shape. Dex-Net contains about 10,000 virtual objects, with plans to grow to hundreds of thousands, perhaps millions.

During a visit last September, Goldberg empties about a dozen 3D-printed models on a table in front of me, and they are bizarre. I eye a red one about the size of a hearty baked potato. It’s lumpy, without anything that screams “handle.” Goldberg’s been telling me how human dexterity is superior to a robot’s, which seems like an invitation to demonstrate. Mimicking the vice-like grasp of a robotic claw, I close my thumb and forefinger over the closest thing to a handle and start to lift. It instantly slips from my grip, clattering on the table. “These we call adversarial shapes,” says Goldberg, who adds, “They’re sort of diabolical.” He believes that if Dex-Net can master enough of them, it should be able to handle any object.

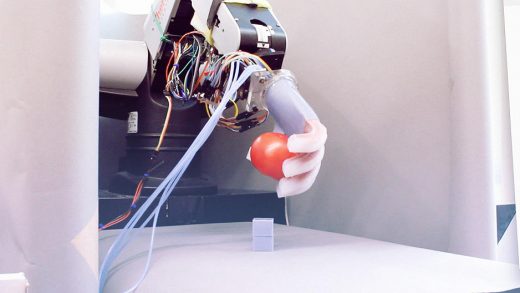

In my defense, this was my first attempt. Dex-Net employs an algorithm that makes 1,000 tries per virtual object, with 1,000 different variations. The goal is to build an inventory of “robust” grips a robot can draw on. I return to the lab in December, where grad student Jeff Mahler, who runs Dex-Net, has connected a popular two-arm industrial bot, called YuMi, to the network. “It’s very good at doing things like fixed motions,” Goldberg says. “But in this challenge…every situation is different, so it’s constantly adapting to what it is sensing. And that’s new.”

Using Amazon’s Alexa Voice Service, Mahler asks the robot to pack some of those oddball 3D-printed objects into a box. It reaches for the red thingy the same way I had, with the same result. “Oh no! I dropped the object,” the robot exclaims. But even failures generate knowledge. “You could do this in parallel, so you have hundreds of robots doing experimentation,” Goldberg says. “Through a combination of accident and guided search they will figure out the strategies or policies that will work, and they can share them.”

Trying in another spot on the red object, YuMi finally gets a good grasp. The robot places it and two other items that Mahler requested into the box, adds packing material, and closes the box for shipping.

If you’re thinking, “Amazon might like this,” you’re right. In 2015, the e-commerce behemoth launched the Amazon Robotics Challenge, a contest to build robots that could replace people in its shipping centers. The University of Delft in the Netherlands won the 2016 competition, mastering the challenge to unload 12 products from a tote bag into a series of bins, then load another dozen objects from bins into a tote. Delft’s robot used a suction device for things with a flat surface, but it needed a claw for others. The process was precise, but agonizingly slow.

Berkeley hasn’t taken part in the Amazon challenge, but it will enter a longstanding contest this year for housekeeping robots. Part of the annual RoboCup competition (best known for its robot soccer games), RoboCup@Home challenges teams to perform household tasks like vacuuming, serving food, or cleaning up clutter. Each team will pick one of two Japanese models to program: Toyota’s Human Support Robot or SoftBank’s rather adorable Pepper.

How appealing would a robot housekeeper be? Very, Goldberg predicts. “If it cost even $2,000 but it would keep the floor clean, that’s worth it,” he says. “We’re constantly yelling at my kids to pick up after themselves.” The professor has two children with his wife, the filmmaker and Webby Awards founder Tiffany Shlain. Such bots wouldn’t just help with sloppy kids but also assist people limited by disabilities or old age, even extending beyond the home to pitching in on tasks like shopping.

A company called Seven Dreamers has taken a first step toward Rosie with a clothes-folding robot called Laundroid. A dozen years in development, the device is promised to go on sale in March. It’s extremely slow and does just that one chore.

Give It A Hand

In the original Westworld, Michael Crichton’s 1973 exploitation film, only the slightly misshapen hands give away who is a robot. “Supposedly you really can’t tell, except by looking at the hands. They haven’t perfected the hands yet,” a veteran guest to the android-populated Westworld park tells his friend, a first-time visitor.

Today’s robotics have a long way to go. The YuMi in Goldberg’s lab has essentially two rigid metal fingers (called jaws) that open and close. It might grab better with a full hand, or at least with fingers that could sense what they are grabbing. “Currently they don’t do this at all,” Goldberg says. “It tries to close the jaws; and if they close all the way, it says, ‘Oh, I missed it.'” Funded by industrial firm Siemens (as well as Google and Cisco), Goldberg’s team is developing technology to improve real robots already deployed in industry. But there are very different ways to build future robot hands.

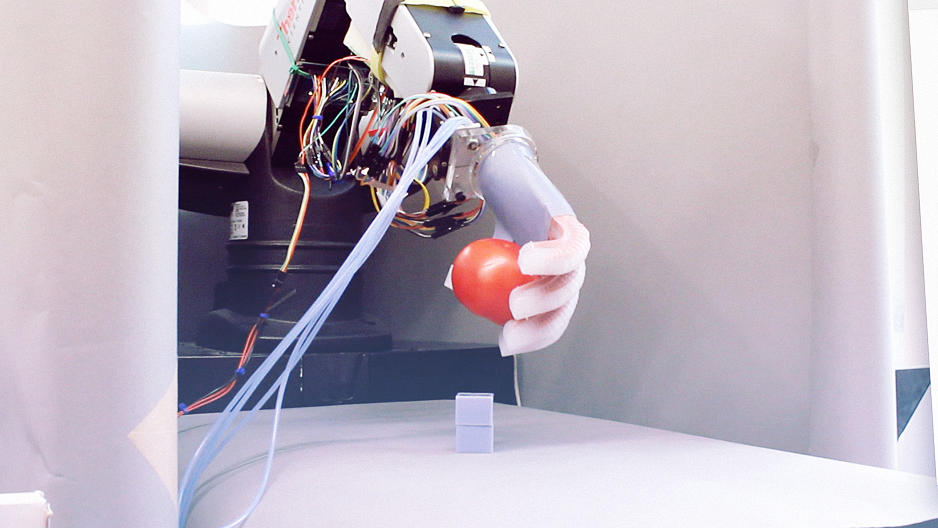

One developed by researchers at the Cornell Organic Robotics Lab looks freakishly human, with four fingers and a thumb roughly the color of light human skin. Instead of metal and motors, the fingers are made of rubbery silicon with air ducts. “Basically, each finger is a balloon,” says Cornell assistant professor Rob Shepherd. It’s driven by compressed air, like that from the CO2 tanks that power paintball guns. The underside of the finger doesn’t stretch much, but the top portion can as air is pumped in, causing the finger to curl inwards—rather like a human’s.

You can literally shake hands with Cornell’s device, and it’s not surprising that one proposed use of the tech is for a bionic hand. (The U.S. Air Force funds the research in part because it “is interested in human health and well-being,” says Cornell.)

Flexible fingers could make the grasping process a lot easier. “What is beneficial about our prosthetic hand is that it can conform to and deflect around objects,” says Shepherd,” which means it can pick up objects of different shapes without needing to come down exactly right on them.” Rubbery fingers don’t supersede Berkeley’s progress on dexterity algorithms, but they allow wiggle room (literally), increasing the chance that a grip will work.

Cornell’s would not be the first bionic hands. Companies such as Bebonic and Open Bionics make mechanical hands like something out of the latest sci-fi flick. They are capable of fine dexterity, but in part because a human brain is still operating them. The hands pick up electrical signals from the flexing of muscles in the amputee’s forearm. And these hands, with multiple segments, joints, and motors, are expensive. Open Bionics is aiming to get its hand down to around $3,000.

Shepherd reckons that rubbery hands, made by pouring silicon into molds, could be mass-produced for about $50 apiece (including incorporating some additional technologies we’ll mention in a bit). Flexible robotics aren’t new, either. A company called Soft Robotics Inc., for instance, makes mechanical arms with flexible grippers (though not resembling fingers) for delicate, preprogrammed tasks such as packing fruit without crushing it.

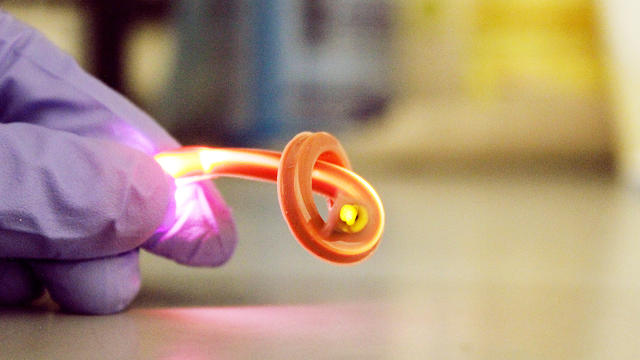

Cornell’s breakthrough is the development of sensors that are both precise and simple to build. Within each silicon finger are three clear polyurethane tubes, called light guides, that act like fiber-optic cables. Each has an LED on one end and a photodetector on the other. The amount of light passing through the tubes diminishes as they bend with the finger. Combining the photodetector readings from all three tubes allows the robot to calculate the position of each finger and even how hard it is pressing on something. Shepherd has posted a video of the hand running its fingers over three tomatoes and picking the ripe one by sensing how soft it is.

The fingers could even feel pain, in a sense, by detecting excessive pressure, say from gripping too hard or something falling on them.

Still Out Of Reach

Robots have to overcome a lot of mechanical and computational challenges before they can take on most human chores. Human hands are extremely detailed, building and growing from the cellular level. “What we’re doing is making a very low-resolution approximation of a human hand,” says Shepherd. Cornell’s fingers have three sensors and may get up to 100, but the nerves in a human finger are the equivalent of thousands of sensors.

Simply adding more sensors won’t close the dexterity gap, either. “We have a hard time dealing with the sensory data that we already have,” says Goldberg, about the robots Berkeley is using. “So it’s not just having the sensor. It’s the algorithms that go with it.” In short: Even a klutz among humans is a dexterity virtuoso among robots. Closing that gap will take time. “What I generally argue is that we’re far from that, very far,” says Goldberg. That could be a bummer for people who want to ditch their housework but a relief for those who want to keep their factory, warehouse, or agricultural work.

Fast Company , Read Full Story

(121)