Why This Diet App Is Using Computer Vision To Help You Lose Weight

One of America’s most popular diet apps is trying something new—computer vision—in its quest to help you keep off the pounds. Lose It, a freemium weight loss app with over 3 million active monthly members, is rolling out “Snap It,” a new beta feature this week that automatically identifies the foods that users eat. The idea is that, eventually, customers can photograph their meal instead of manually entering it. It’s the latest innovation in a cutthroat marketplace, and one that even outflanks much larger rivals like Google.

Computer Vision And Weight Loss

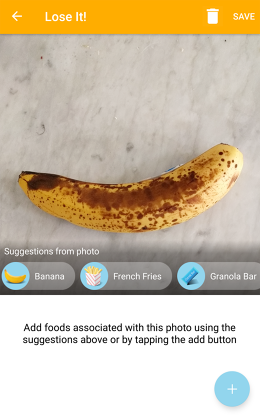

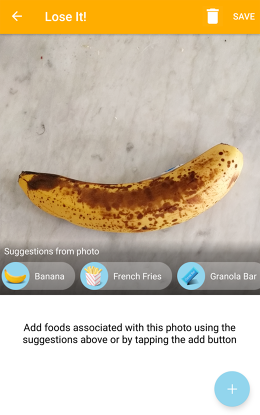

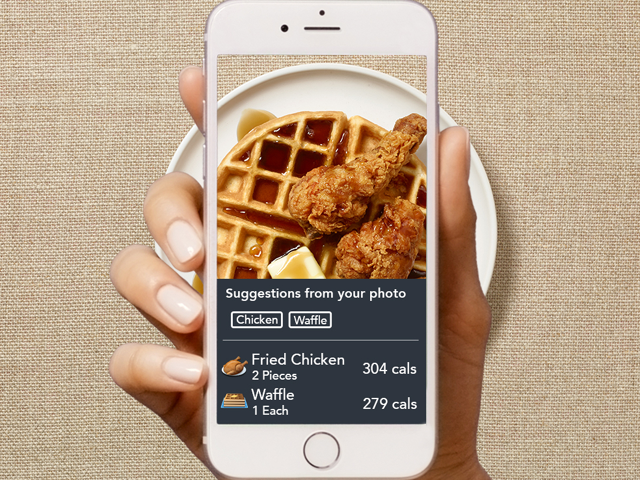

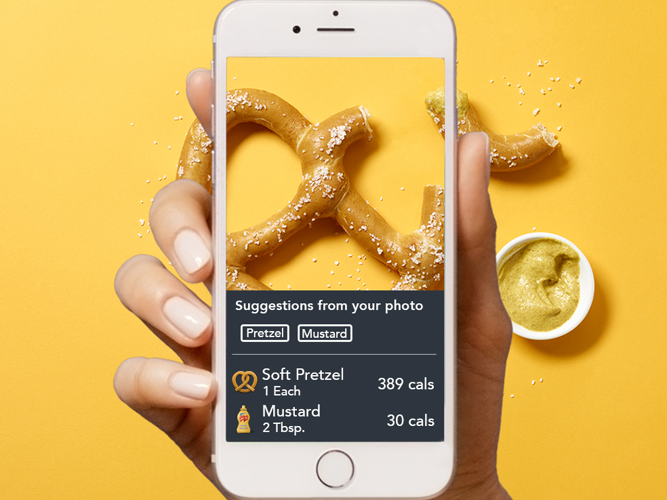

I tried out a beta version of the functionality last week. You choose a meal inside Lose It’s app, and take a picture. The app then analyzes a photo of the meal, and compares it against models from external databases as well as Lose It’s internal database. The app then provides what the company calls “food suggestions based on what it sees.” For instance, a photograph of sushi brings up sushi listings, and the user tweaks the entry to explain just what type of sushi it was. For Lose It, the idea is to let users enter meals into the app more quickly.

Lose It’s image recognition feature, which CEO Charles Teague told me in an interview is designed to “semi-automate tracking,” is a less sophisticated version of the holy grail of diet apps—a feature that estimates the calories in a meal when you point a phone at it. Teague repeatedly brought up the metaphor of Tesla Autopilot in our interview, and emphasized how data would be used to improve the product over time.

Last year, Google began work on Im2Calories, an experimental app that estimates the calories in a user’s meal based on a photograph of it. Another tech-forward think tank, SRI International, is working on a similar project called Ceres, which uses images of meals to provide nutritional information and portion estimates.

By comparison, Lose It’s feature identifies only the food itself, rather than nutritional information. The challenge is that computer vision is a young field, and is hard to implement in commercial settings without the massive research budgets available to tech giants like Google and Microsoft. That difficulty became clear when I started taking pictures of my food.

Despite the fact that Lose It puts out a great product—with pretty much the best user interface of any calorie-tracking app—this is, well, an obstacle.

An Omelette Is Not An Almond

In order to take the image recognition for a test drive, I tried a highly unscientific breakfast test for the app. I would photograph an easy target (a banana), a slightly more challenging target (coffee in a mug), and a difficult challenge (breakfast from the Whole Foods buffet counter), and see how the app would perform.

Lose It identified the banana almost as soon as I snapped it. However, the coffee and the full breakfast presented challenges.

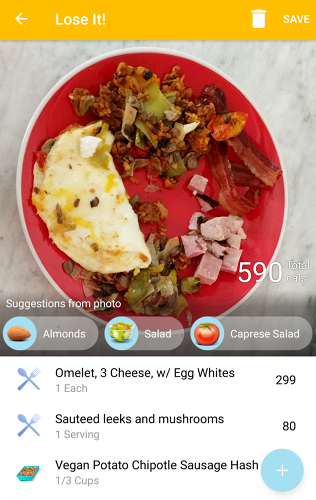

Coffee in a mug was misidentified right away as a soup or a milkshake. An admittedly complicated plate with an egg-white omelette, vegetable hash, bacon, ham, and sautéed vegetables registered as either almonds, a salad, or a Caprese salad.

This is one of the biggest problems with computer vision: It works great in controlled environments, using easily identifiable items. In the real world, things get messier.

“Those complicated cases are hard,” Teague told Fast Company. “The biggest thing for us to emphasize, and why I use the Autopilot analogy, is that they need cars out there obtaining functioning data, and getting the info that helps you drive the car. That’s what we need as well. We’re actually beginning gathering those millions and millions of photos of correlated foods. I hope users don’t have an experience like what we described, but if they do, that’s what we think of as a beta.”

And the reason why Lose It is rolling out a new, experimental technology has a lot to do with the crowded market it’s competing in.

Diet Apps Are Big Business

The weight loss app market is insanely crowded… and very profitable. A 2015 study by research firm Marketdata estimates the size of the U.S. weight loss market at $64 billion in 2014. Apps and software are a big part of that mix.

MyFitnessPal, an app that has much of the same functionality as Lose It, was acquired by Under Armour for $475 million last year. Weight Watchers, meanwhile, has been doing more than those iconic Oprah Winfrey “I love bread!” advertisements; they’ve also pivoted their business strategy to emphasize their app and online community groups alongside in-person meetings.

The challenge for Lose It is that customers have a short attention span. Their app already has a barcode scanning feature. Keeping users hooked (and more importantly, converted into paying subscribers) depends on making the app so easy to use that it becomes a part of daily life.

The next step for Lose It and their competitors is refining this computer-vision product, and creating something that works equally well with your lunchtime grilled chicken salad or an elaborate multi-course restaurant meal. Your phone might not recognize your dinner now, but it will very, very soon.

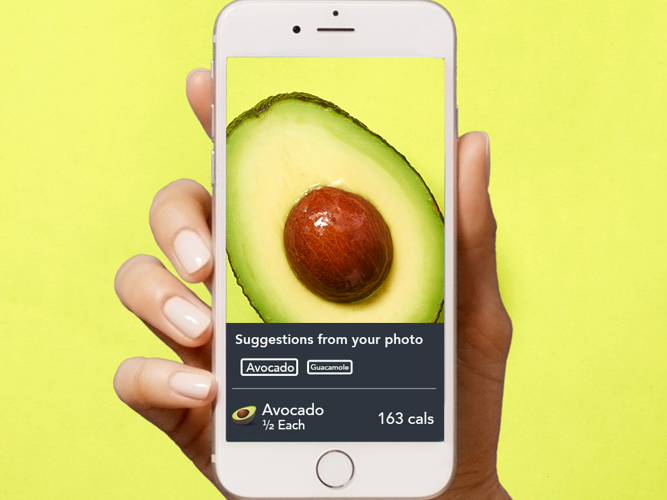

Lose It’s new feature uses computer vision to identify common food items without typing them in.

The goal of the new feature is to reduce time users spend entering items.

The feature works best on simpler items such as avocados.

However, the functionality stumbles when dealing with more complicated meals.

Fast Company , Read Full Story

(35)