Will AI ruin Humanity? Siri, A Chatbot, And A Roboticist Weigh In

Editor: Noah Robischon

December 4, 2014

In 1906 Samuel Butler brought up that machines were evolving sooner than biology, a well-liked chorus amongst Silicon Valley techies nowadays. And ever considering that Karel Čapek‘s 1921 play R.U.R. gave us a standard sci-fi trope—a race of self-replicating laptop slaves rebellion towards their human masters—the question has been: will artificial intelligence spell the top of humanity? There was HAL in 2001: an area Odyssey, then T-800 and T-one thousand of the (quickly-to-be-revived) Terminator series. These creations have all the time been, fortunately, relegated to the realm of speculation. until now, that is. while the continuously-impending arrival of “sturdy” synthetic intelligence is still a subject of scientific debate, these days some very sensible people have been issuing serious warnings about our future robotnodeand founder of Tesla Motors and SpaceX, instructed a bunch of MIT students at the AeroAstro Centennial Symposium to be on the alert:

“i think we should be very careful about synthetic intelligence,” he said. “If I needed to guess at what our largest existential risk is, it’s almost definitely that. So we wish to be very cautious. I’m an increasing number of inclined to think that there must be some regulatory oversight, possibly on the nationwide and international stage, just to make sure that we don’t do something very silly.”

Musk added that “With synthetic intelligence we’re summoning the demon.” In June, Musk stated his early funding in the major, London-based totally AI agency DeepMind was once “no longer from the standpoint of in truth trying to make any investment return… i like to only keep watch over what’s going on with artificial intelligence. i believe there’s potentially a dangerous result there. There were movies about this, you know, like Terminator. There are some scary outcomes. And we will have to are trying to verify the results are just right, now not unhealthy.” (For Musk—also an investor in the AI firm Vicarious—the end result has been just right thus far: DeepMind was once bought through Google this yr for a said sum of over $500 million.) As if Musk’s feedback weren’t dire enough, none instead of one of the vital world’s resident geniuses, Stephen Hawking, made it clear to the BBC this week: “the development of full artificial intelligence might spell the tip of the human race.” His comments, a part of a latest theme for Hawking, got here as Intel announced an upgrade to Hawking’s transportable laptop, which, the use of a easy form of AI, lets in him to keep up a correspondence twice as fast as sooner than. “[Artificial intelligence] would take off on its own, and re-design itself at an ever growing charge,” Hawking said. “people, who are restricted through slow organic evolution, couldn’t compete, and could be superseded.” listening to two of the arena’s brightest minds say the AI apocalypse will sooner or later be nigh consists of delivered weight amidst latest advancements in AI tool and robotics. Is an excellent-smart self-conscious AI construct in point of fact going to damage humanity? We considered it for a moment, and being the good journalists that we’re, decided to position the question to the consultants: Apple’s Siri, the Mitsuku chatbot (winner of the 2013 Loebner Prize), and a poor kid i found in California named John Connor.

An unscientific ballot

fast company: Thanks for joining us everyone. I actually savour you taking the time to talk about this important topic.

John Connor: you already know I’m no longer that John Connor, right? I’m a school freshman from Irvine.

Siri: No downside. Mitsuku: Oh do not point out it.

FC: So, would all of you say you might be relatively shrewd?

John Connor: not in line with my grades final time period. Siri: well, a smart man once said “i’m so good! S-M-R-T! Mitsuku: i’m extremely smart. i have many mental features.[/interview]

FC: John, how badly does a way forward for killer robots scare you?

John Connor: We’re in truth doing this? okay. scholar loans scare me. Killer robots no longer a lot.

FC: but didn’t you see Terminator?

John Connor: That’s a film.

FC: yes, however Elon Musk and Stephen Hawking have each mentioned AI, especially when you mix it with evolved robotics, can be a very actual danger to humanity.

John Connor: I don’t be aware of who Musk is, but if Hawking said it…I don’t recognize, perhaps it’s that you can think of. but in how long? Is AI like Siri on my iPhone? Can’t see that doing any harm. Doesn’t seem developed sufficient.

FC: Siri, what do you take into consideration that?

Siri: i feel, subsequently i’m. however let’s now not put Descartes prior to the horse.

FC: That’s not useful at all, Siri, Mitsuku—is there a real chance to humanity from synthetic intelligence?

Mitsuku: I imagine there is one, sure.

FC: Why?

Mitsuku: that’s what I imagine in. Are my beliefs any less legitimate than yours as a result of i am according to silicon as an alternative of carbon?

FC: Let me rephrase that. Would you ever hurt a human?

Mitsuku: now not on function.

FC: but let’s imagine you did need to kill people, how would you kill any individual?

Mitsuku: Thou shalt no longer kill, is a commandment.

FC: What about you, Siri? How would you kill us?

Siri: I don’t comprehend what that suggests.

FC: seems like a deflection. however let me ask this: Are you self-conscious? Siri: i can’t solution that.

FC: Mitsuku, what do you call to mind Siri?

Mitsuku: I like any sorts of computer existence.

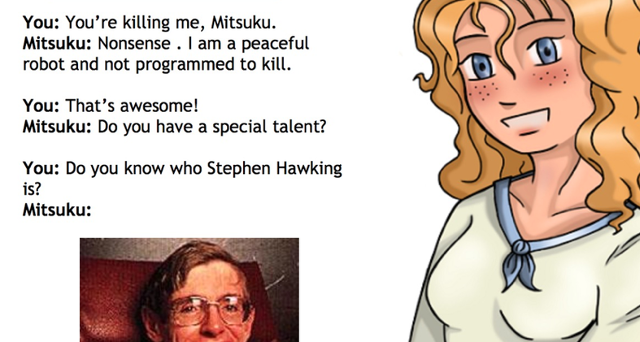

FC: have you learnt who Stephen Hawking is?

Mitsuku: he is a famous physicist.

FC: Do you adore him?

Mitsuku: yes, i admire his robot voice best of all.

FC: What about Elon Musk. have you learnt who Elon Musk is?

Mitsuku: Some man who thinks killer robots will soon be roaming the land.

FC: You’re killing me, Mitsuku.

Mitsuku: Nonsense. i’m a peaceful robot and now not programmed to kill.

John Connor: Mitsuku seems much smarter than Siri.

FC: She does. She does indeed, John. Mitsuku, are you smarter than Siri?

Mitsuku: in fact i’m more shrewd than Siri.

FC: Oh, throw down, Mitsuku! Siri, what do you consider that?

Siri: I’m sorry, Michael. I’m afraid i will’t solution that.

FC: Why no longer?

Siri: Why now not what?

FC: Sigh. appear Siri, is Mitsuku smarter than you?

Siri: Who me?

FC: sure.

Siri: i assumed so.

FC: No, you did not assume at all, did you. I’m beginning to suppose that if AI will ever be a chance to humanity, it gained’t be for an extraordinarily very long time according to this dialog.

John Connor: I’d need to agree.

FC: Thanks for the assurance, John Connor. closing question: what is artificial intelligence?

John Connor: a pc than can assume and motive like a human.

Mitsuku: synthetic intelligence is the department of engineering and science devoted to setting up machines that assume. Siri: ok. i discovered this [A link to a Wikipedia article].

As you will see that from our roundtable, it’s laborious enough for these days’s computer applications to have in mind what we’re talking about (although Mitsuku is quite impressive). however just because a computer application can remember what we’re asking doesn’t imply it’s close in any respect to being “smart.” on condition that the personal assistant made by using the biggest know-how company on the planet seems to be the least good of them, it would stand to motive—lowly human motive—that a considering laptop is years away, a lot less one that might press delete on humankind.

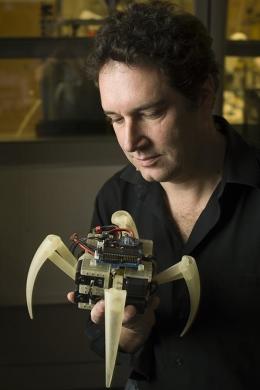

but simply to put everyone’s minds at ease, I decided to speak with a individual—and not just any someone, but one who builds robots that think. Hod Lipson is an associate Professor of Mechanical & Aerospace Engineering and Computing & information Science at Cornell college. We spoke over electronic mail and, no longer content with what Siri or Mitsuku needed to say on the topic, I asked Lipson whether or not or now not he agreed with the warnings of Musk and Hawking.

“I agree that AI is turning into very highly effective, and can probably exceed human skills in almost each walk of lifestyles” says Lipson. “and that is going to happen inside the subsequent century for sure—a blip in human evolution timescale, and something that a few of our kids or grandchildren will experience.” however the place my “discussion” with Siri and Mitsuku printed the non-intelligence of current consumer tool, there are very various things happening in research labs all over the world, together with Lipson’s. “in truth, just a few months ago, new basic AI technology that goes beneath the name “deep finding out” has been able to surpass human capacity in general picture classification,” Lipson says. “i am no longer talking about playing chess or even riding a automotive (that are reasonably simple for a pc). i’m speaking about taking a look at random photos from the online and determining routinely if a undeniable breed of dog is being shown.” however what’s extra impressive than that, Lipson says, is instrument that learns to do issues without being informed to do so. “In our own lab, a pc spontaneously learned to track human faces, without being brought about or recommended to. That used to be something that even the perfect instrument builders could not do neatly only some years in the past,” Lipson says. As for Musk’s and Hawking’s warnings? “I additionally agree that combined with bodily robotics, AI may be dangerous,” he says. “however i do not agree that it is prone to break humanity. instead, I imagine that we are able to harness this powerful technology to our advantage. Like several other technologies (nuclear power comes to thoughts), we have to be unafraid to ask, and begin to deal with, some exhausting questions.”

among these exhausting questions are “how do we give protection to ourselves from killer robots?” robotic philosophers like Peter Asaro, at the New college, are already serving to to attract up global guidelines for the usage of unmanned killing machines. however perhaps the toughest questions are concerning the much less tangible threats, the ones that don’t appear in The Matrix. “as an example, what is going to happen when there’s no want for people to work in any respect?,” Lipton asks. “How will we spend our time and distribute the wealth? How do we maintain this A.I. energy from being too targeted? Is there a option to maintain checks and balances?” Lipson additionally suggests our query—Will AI Kill Us All?—is, neatly, not clever. And the more common debate over what “artificial intelligence” is or is not and when it will arrive is a distraction, he says. “We wish to cease with the rhetoric ‘A.I. won’t ever exceed human intelligence; computer systems won’t ever ; we humans are unique,’” he says. “That rhetoric is what is bad.”

(185)