Your Smartphone Is Becoming An AI Supercomputer

IPhone owners will get an upgrade on September 13 that allows them to find a picture of nearly anyone or anything, anywhere and from any time. Neural network artificial intelligence in the new iOS 10 performs 11 billion calculations in a tenth of a second on each photo snapped to figure out who people are and even what mood they’re in.

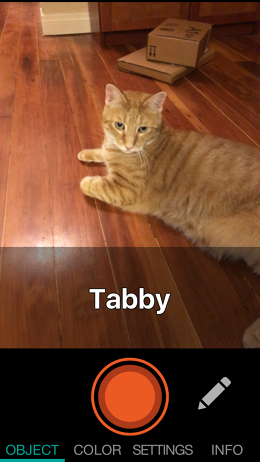

iOS 10’s new Photos app is only the latest example of a growing movement toward handheld artificial intelligence. Aipoly, an app released in January, recognizes objects and speaks their names aloud to blind people. Google Translate can replace text in one language with another language as soon as you point your camera at it. All this happens even if you can’t get cell reception.

Just as “the cloud” was becoming the answer to every “How does it work?” question, smartphones have started clawing back their independence, performing on their own tasks that used to require a tether to a server farm. The result is a more natural AI experience, without the annoying or creepy lag of an internet connection to a data center. “If I said, ‘Hey Siri, what is this?’ it would take two seconds to send a picture to the cloud [and get a response back],” says Aipoly

cofounder Alberto Rizzoli. “It kinda feels like talking to someone who just woke up.” Aipoly was not the first seeing app for the blind; it was the first to identify objects instantly by cutting its dependence on the cloud and running AI on a phone.

Such instantaneous AI will take augmented reality far beyond Pokémon Go by accurately mapping the surroundings in detail to insert rich 3D objects, characters, and animations into the video feed on a phone or tablet screen. Likewise, virtual reality will start looking more genuine with mobile AI, according to Gary Brotman, director of product management at mobile chip maker Qualcomm, where he heads the machine learning platform. “To do that right, everything has to be totally realtime,” he says. “So you have to be able to present the video, present the audio, and have the intelligence that drives the eye-tracking, the head tracking, gesture tracking, and spatial audio tracking so you can map the acoustics of the room to that virtual experience.”

AI will also drive convenience features. You might see virtual assistants that use the phone’s camera to recognize where you are, such as a specific street or the inside of a restaurant, and bring up relevant apps, says Rizzoli. And for once, such hyper-conveniences may not have the creep factor. If future AI doesn’t need the cloud, then the cloud doesn’t need your personal data.

“For privacy reasons, for latency reasons, for a variety of others, there’s no reason why the locus of control for analytics and intelligence shouldn’t be on the [phone],” says Brotman.

AI Inside

What’s brought the power of AI to handhelds? Video games.

“People want better mobile games on their phones and their iPads,” says Rizzoli. “So Apple has been particularly good, and so has Qualcomm and the other chip manufacturers, in making better performance.” That’s pushed the development of more powerful mobile CPUs and Graphics Processing Units. While CPUs mostly work sequentially, GPUs work in parallel on the simpler but very numerous tasks required for quickly rendering 3D graphics. AI also requires performing multiple straightforward duties in tandem.

Take what’s called a “convolutional neural network”—a staple of modern image recognition. Modeled on how the brain’s visual cortex works, CNNs divide the visual field into overlapping tiles and then, in tandem, filter out simple details such as edges from all of these tiles. That info goes to another layer of neurons (biological in humans or virtual in software), which might combine edges into lines; another layer might recognize primitive shapes. Each layer (of which their may be dozens) further refines the perception of the image. “You’re looking at a photo, and you’re identifying various elements of it at the same time,” says Rizzoli. “You’re identifying edges, and you’re identifying what shapes [might exist]. And all of this can be done in parallel.”

Smartphone chips have been up to the challenge for a few years. Even 2013’s iPhone 5s supports the new people, scene, and object recognition upgrade in iOS 10; and Aipoly is working on versions that will run on the iPhone 5 as well as Android phones going back several years. But programmers have just recently been taking advantage of this power. Photo effects app Prisma, which launched in June, has been one of the early adopters.

Twenty-five-year-old Aleksey Moiseenkov created the app, which renders a smartphone photo in a choice of over 30 artistic styles, such as “The Scream,” “Mondrian,” and many with playful titles like “Illegal Beauty,” “Flame Thrower,” and “#GetUrban.” The effects are rendered nearly instantaneously, which belies their complexity. An Instagram filter simply tweaks basic parameters like color, contrast, brightness, or white balance in pre-set amounts. Prisma has to analyze the image, recognize elements like shapes, lines, colors, and shading, and redraw it from scratch like Edvard Munch or Piet Mondrian might. The results can be gorgeous, making even a banal picture intriguing.

Prisma initially did all this work in the cloud, but Moiseenkov says that could hurt the quality of the app. “We have a lot of users in Asia,” he says, “and we need to give the same experience no matter what the connection is, wherever the server or cloud processing is.” A new version of the iPhone app that came out in August runs entirely on the phone, and Moiseenkov is working on the same shift for the Android version.

An upgrade that applies the same artistic effects to video is coming, too, possibly in September, says Moiseenkov. “Video is much more complicated in terms of overloading the servers and all that stuff,” he says, so doing effects right on the phone is crucial.

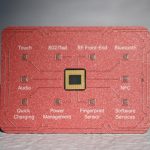

Moiseenkov and his team had to figure out from scratch how to get their AI software working on smartphones, but future programmers might have an easier time. In May, Qualcomm came out with a software developer’s kit called the “Neural Processing Engine” for its Snapdragon 820 chip, which powers 2016’s high-end Android phones, like the Samsung Galaxy S7 and Note 7, Moto Z and Z Force, OnePlus 3, HTC 10, and LG G5. The software juggles tasks between the CPU, GPU, and other components of the chip for jobs like scene detection, text recognition, face recognition, and natural language processing (understanding conversational speech rather than just rigid commands).

Specialized AI chips are also arriving. A company called Movidius makes VPUs—vision processing units—optimized for computer vision neural networks. (This week, chip giant Intel agreed to acquire it.) Its latest Myriad 2 chip runs in DJI’s Phantom 4 drone, for instance, helping it spot and avoid objects, hover in place, and track moving subjects like cyclists or skiers.

Using only about one watt of power, the Myriad 2 would be thrifty enough to run in a phone, too. Movidius has made a few vague statements about future products. In June, it announced “a strategic partnership to provide advanced vision processing technology to a variety of VR-centric Lenovo products.” These could be VR headsets or phones, or both. Back in January, Movidius and Google announced a collaboration “to accelerate the adoption of deep learning within mobile devices.” I asked Movidius and Google about the deals, but they wouldn’t say anything more.

The iBrain

Apple has been characteristically coy about its AI plans, not saying much before it previewed iOS 10 in June.

The AI-powered Photos app is the biggest component. It uses neural networks for the deep learning process that recognizes scenes, objects, and faces in pictures to group them and make them searchable. Its Memories feature puts together collections of pictures and videos based on people in them, places or what it judges to be a significant event, like a trip. Doing all this work on the phone keeps personal information private, says Apple.

Neural networks also power Apple’s predictive typing that helps finish sentences, and AI appeared well before iOS 10. Apple switched Siri over to a neural network running on the phone back in July 2014 to improve its speech recognition.

Siri is how most app makers will plug into the iPhone’s AI, for now. Apple hasn’t released AI-programming tools for its A series chips the way Qualcomm has for Snapdragon, but a feature called SiriKit lets developers connect their apps so people can interact with the apps by chatting with Apple’s virtual assistant.

And Apple may not be far behind Qualcomm in helping third-party developers leverage AI. It recently spent a reported $200 million on a company called Turi that makes AI tools for programmers. Developers will have more power to work with, too. The new A10 Fusion chip in the iPhone 7 and 7 Plus has a CPU that runs 40% faster than in the previous generation iPhones, and the graphics run 50% faster.

As artificial intelligence continues expanding across the tech world, it seems destined to grow on phones, too. Expectations are rising that gadgets will simply know what we want and what we mean. “I can say that the large percentage of mobile apps will become AI apps,” says Nardo Manaloto, an AI engineer and consultant focused on health care apps like virtual medical assistants.

Alberto Rizzoli expects to see a lot of new apps by CES in January. App creators “will follow…when more tools for deep-learning software become available, and the developers themselves become more aware of it,” he says. “It’s still considered to be a dark magic by a lot of computer science people…And it’s not.”

Fast Company , Read Full Story

(21)