“All right, here we are in front of the elephants. The cool thing about these guys is that they’ve got really, really, really long, um, trunks. And that’s cool. And that’s pretty much all there is to say.”

“Me at the zoo,” a 19-second-long YouTube video, has been viewed more than 30 million times—a stat that starts to makes sense once you learn that the fellow helpfully explaining elephants is YouTube cofounder Jawed Karim, starring in the very first YouTube video. He uploaded it as a test shortly before the service went live in 2005.

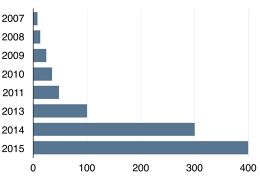

Fast forward 11 years, and YouTube is such a core element of the Internet as we know it that the numbers associated with it, though vast, no longer sound fantastic. Of course people upload 400-plus hours of video every minute—the equivalent of more than 75,000 copies of “Me at the zoo” and up 50-fold since 2007. Nor is it the least bit implausible that the service has more than a billion users who watch hundreds of millions of hours of video every day.

Simply making YouTube operate at that scale, and preparing it for continued growth—upload minutes tripled between 2013 and 2014, then jumped by another third in 2015—was a years-long quest. “Up until about 2012,” says technical lead for video infrastructure Anil Kokaram, the company’s engineering journey was “all about infrastructure and making sure pictures could be displayed. Then came the next step, which is how can we make that experience as smooth as possible and as seamless as possible?”

Kokaram, who joined the company in 2011 when it bought his Ireland-based startup, Green Parrot Pictures, was part of that next step, as YouTube beefed up its in-house team of computer scientists, who work in collaboration with colleagues at Google Research. In his pre-YouTube career, Kokaram invented technologies used for purposes such as restoring silent movies and contributed his expertise to blockbusters such as the Lord of the Rings and Spider-Man franchises. In 2007, he even won a science and engineering Oscar for his work.

It was an excellent background for someone charged with taking on the challenge of making YouTube video look better. And yet, there’s a basic fact about YouTube that is wildly removed from Kokaram’s history in film restoration and Hollywood postproduction: Everything the service does must accommodate its vast community of content creators and consumers. And that means that any technologies it applies to videos must work quickly, and require little or no involvement on the part of content creators.

VP of engineering Matthew Mengerink calls it the “billion-people problem.” As he explained to me, “We start off by saying, ‘Can we make it work at all?’ Then, ‘Can we commercialize it such that anybody with a mobile phone can do it?’ I think that’s where a lot of the magic is.”

From Lo-Fi to Hi-Fi

If you used YouTube in its early days, you remember when anything you uploaded ended up looking like it had been run through a Xerox machine a few times. Today, the service aims to make each video look as good as it can in a range of viewing scenarios—from a 4K TV to a desktop PC to a smartphone with an iffy cellular connection. It even wants the video you publish today to have a shot at looking better in the future.

That starts with preserving a video’s original quality. “If I were to send you a video on iMessage, it’s going to compress the dickens out of that thing and you’re going to get very few bits in it,” says Mengerink. “On YouTube, we’ll be storing all the bits, so that years later when there is an advancement, you could take advantage of that raw material.”

Even if you choose to store all the bits—and YouTube transcodes and stores multiple copies of each video, so it has versions optimized for varying streaming scenarios—you still need to decide what technologies to use to store and stream them. The service, which originally relied on Adobe’s Flash for video playback now uses Google’s own VP9 technology, a modern open-source video codec that can deliver better image quality than Flash once did at half the bandwidth. That’s why even “Me at the zoo” both looks nicer and streams more efficiently than it did in 2005.

In YouTube’s early days, the elapsed time between a video upload and when it was live on the site could be frustratingly long. By working on multiple parts of each video simultaneously, the service has been able to grind down the wait time. “We break it up into little bits, process each bit independently, and then tape it back together,” says Kokaram.

That approach introduces technical challenges of its own, though. All of this processing happens at such a breakneck pace that the multiple instances of encoding proceed independently, making decisions about each chunk of video without a coordinated effort to impose visual consistency. In a worst-case scenario, that could leave a video with telltale seams between its chunks. Using machine learning technology based on analysis of 10,000 representative videos, YouTube has been able to optimize the process so that the encoders hit a target bit rate with a picture quality that doesn’t just look good, but looks good in an adequately consistent way.

The company has also begun monitoring the popularity of individual videos so that it can give some extra technological TLC to the most popular ones, such as applying a de-noising filter to improve image quality. “As soon as you get a few thousand views, you can say, ‘This deserves some attention. Let’s see if we can improve the quality,'” Kokaram says.

Quality is Subjective

Even if computer science tells YouTube that a particular encoding scheme results in high-quality results, that doesn’t mean that it’s the one that people would pick based on a taste test of varying technological approaches. So YouTube aims to achieve results that look good in a way that pleases the human brain.

“We engage in subjective assessment by actually sitting down and looking at loads of clips to make sure we’ve done the right thing,” Kokaram says. “It’s not something that people would think people at YouTube would do, because we have billions of files. We come up with ways to summarize billions of files with 50, and we sit down and look at 50.”

“There was a 15-minute argument about how should you process flames coming out of a rocket to get the best effect, and how do you take into consideration people’s preferences of color,” adds Mengerink. “Do you want highly saturated so that it’s bright and poppy, or do you want a natural rendition?” Tastes, he says, are not only subjective but different from region to region—viewers in Pakistan lean toward more highly saturated colors than those in the U.K.

Some of the image-quality problems that YouTube is solving are broadly applicable to Internet video of any sort, which is why the company shares engineering resources with corporate siblings such as Google Play Movies and TV, which streams big-time entertainment to phones, tablets, and other devices. Once again, however, YouTube’s billion-person problem is a factor. If the only content creators you’re dealing with are movie studios, it’s possible to convince them to hand over their material in a consistent, ideal file format. “In fact, if your file doesn’t comply to Google Play standards, they send it back,” says Kokaram.

Consumers, by contrast, are going to use whatever they happen to use, whether it’s a 4K video-capable smartphone or a creaky old camcorder. As a result, YouTube needs to be prepared to ingest video in almost any format into its system. It does its best to accommodate what’s out there, which also varies widely from country to country.

Since YouTube is capable of storing and streaming high-quality video, its message to creators, Kokaram says, is “please give us the best stuff that you have.” As more people own devices capable of shooting in high definition, the typical resolution of uploads is improving, but the service still sees lots of random odds and ends. “Suddenly now, 720p and 1080p are taking over,” Kokaram says. “We can also see that no matter what we do, 10% tends to be nonstandard. Netflix and all those other guys don’t have that problem, because their stuff is much more controlled. It’s something that I was quite shocked at when I first came here.”

Editing in the Cloud

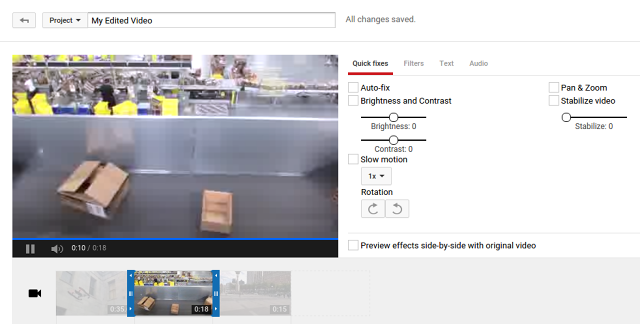

The effort that YouTube pours into image and audio quality is, in a way, invisible. It’s all done to produce better results without any invention on the part of content creators and consumers, and you’re more likely to notice any glitches than you are the lack of them. But another key effort involves building tools that creators can use to polish up their videos in YouTube’s editor. Here again, scale is an inherent part of the challenge, since everything runs in the cloud on Google servers rather than as part of a local app along the lines of iMovie.

When Kokaram arrived at YouTube, he explains, “The editor had just launched, and they were looking for some high-end creator tools to attract people to the platform. We thought we’d just have a go with the most difficult thing we could possibly think of, which is slow motion, and managed to pull that off.”

YouTube slows down a video not by duplicating frames—which is a cakewalk to implement, but can cause a smearing effect—but by using an approach based on ambitious computer science. “You have 20 frames a second and you want to slow it down by a factor of two,” Kokaram says. “You need to put frames in between. We estimate motion between those two frames, and then try to build a motion field that is as smooth as the other frames.”

It works beautifully. But in order to make it available to YouTube’s huge army of creators, the company had to roll it out in a form that was carefully designed not to devour too many cycles of computing time. “The scale issue is a double-edged sword,” Kokaram says. “On the one hand, users believe you have all the computing power, and you can do everything. On the other hand, somebody has to pay for computing. In this case, we had to put a limit. We realize somebody could actually upload a file and ask for a thousand-time slow down.”

The fact that YouTube restricted how slow its slo-mo feature could go—the maximum is an 8X speed reduction—didn’t stop one intrepid user from slowing down a leaping corgi even further, by running a video through the tool repeatedly—a trick that Kokaram says is a reminder that the technologies that his team builds must be designed based on the assumption that they’ll get used by real people in unexpected ways.

The service also took the billion-people problem into consideration when it recently launched a custom blurring tool, building on a face-blurring option, which has been available since 2012. With the new feature, you can draw a box around something—a license plate, a logo on a product, an entire person—and the editor will track that item and blur it in subsequent video frames.

The feature doesn’t try to neatly blur the item’s outline. On YouTube, “it’s difficult to do that for 100,000 people every minute,” Kokaram says. “We make some compromises. In postproduction, you wouldn’t use a box. You would use the exact shape of the object. But here we just want to achieve this blur, and we use a simpler shape.”

Even though YouTube had to reduce the custom blurring feature’s ambition to make it manageable, the computer science behind it is a big deal. And once you’ve engineered techniques for identifying a specific element in a video as it moves around, there is an array of potential applications.

“If you look at it from an infrastructure perspective, these things help within the VR context,” Mengerink says. “They help within 3D video. You can start doing virtual green screening, because you can map foreground and background. You can delete objects out of videos. You can change scales—there are some crazy demos that people have done where they turn somebody who’s walking through a video into a giant.” It’s a sign of just how far YouTube has come since the era of “Me at the zoo” that it’s in a position to explore all of these possibilities—and whatever else a billion-plus people expect from it in the years to come.

(47)